What happens to the datacenter when a trillion devices embedded in every manner of product and facility are chatting away with each other, trying to optimize the world? There is a very good chance that the raw amount of computing needed to chew on that data at the edge, in the middle, and in a datacenter – yes, we will still have datacenters – will absolutely explode.

The supply chain for datacenters – including the ARM collective — is absolutely counting on exponential growth in sensors, which ARM Holding’s top brass and its new owner, Japanese conglomerate SoftBank Group, spent a lot of time discussing in the opening keynote for ARM TechCon in Santa Clara. Because of the hegemony of Intel Xeon processors in the datacenters of the world, a lot of the hopeful talk about the massively computerized and telemetried future that they want to build is focused on the edges, where Intel does not hold sway (at least not yet). We just think of all of that Internet of Things stuff as just another data source with an exponentially growing number of bits streaming in, and while we do think that there will be a need to do real-time and long-term analytics on this data, we don’t think it will be so much different in kind to the most sophisticated data analytics and machine learning systems that we have already created. It will just be a much, much bigger pipe. The issue is will it grow faster than Moore’s Law advances in computing, storage, and networking.

No one has an answer to that yet, but with some help from ARM executives, we are going to take an initial stab at it as a thought experiment. To do that, we first have to count the devices, and Masayoshi Son, CEO at SoftBank and the boss of Simon Segars, the CEO at ARM Holdings, after his company paid $32 billion (a 40 percent premium) to acquire the upstart chip designer for reasons that Son spent most of his time trying to explain. What the situation boils down to is the network effects that will magnify the value of putting computing into each and every thing. As Segars put it during the keynote, the world already consumes billions upon billions of microcontrollers every year, but very few of these have been connected to share data and processing, and similarly while cars have hundreds of chips these days, for the most part they are not compute heavy (that will change when they are self-driving) and they are not connected to the network (which will also have to happen).

The ARM collective, which is focused on client devices like smartphones and tablets with a smattering of servers and a plethora of other devices from microcontrollers to cars, pushed around 15 billion chips by itself in 2015, said Segars, and that drove nearly $50 billion in aggregate revenues across that collective. That is five-sixths of an Intel, in terms of revenues, just to give you some perspective. ARM is more a shared chip design engineering department than a chip manufacturer, and its piece of that $50 billion action was about $1.2 billion, which stands to reason given the role – an important one, mind you – that it plays in the ecosystem in defining the architecture and instruction set and giving chip makers a running start if they need it. Designing specific chips for specific workloads, as ARM partners do, and then paying to manufacture the chips takes most of the money, as you would expect.

“We are seeing growth from lots of markets, and that is all coming from the innovation that all if us together are creating,” Segars explained. “What that innovation is doing is deploying intelligence, and what is really exciting for me is that there is really no stopping the demand for intelligent silicon, the demand for processor-based intelligent silicon is not going down any time soon, and that is going to drive enormous opportunities for everyone.”

Particularly, it seems for Son, the founder and CEO at SoftBank, who committed to the multitudes attending TechCon that ARM would continue to provide neutrality to all of the partners in the ecosystem and provide the platform that everybody can rely on. “Don’t worry, I am going to make everybody happy,” Son declared with a bright smile that was absolutely genuine and expected from someone who studied economics and computer science and at the University of California at Berkeley and who has amassed, lost, and re-amassed fortunes over the three and a half decades since he founded SoftBank, which owns the Sprint cellular carrier as well as ARM and a bunch of other companies.

Like many of us, Son has a view of distributed computing that is evolving and potentially massive by the standards of what we do today to store and process data. The way that Son sees it, we are on the verge of an IoT Explosion, where a diversity of types of devices will be necessary along with an absolutely immense number of them to provide intelligence all up and down the line from those devices at the edge of the network all the way back to the central datacenter.

We may have to start talking about something called the datanetwork, in fact, if we may be permitted to coin an ugly term. Maybe datanet for short, to contrast with the idea of a datacenter. This datanet will be a different kind of beast, with a workflow that spans across widely separate and perhaps very different types of compute up and down the network from the edge back to the datacenter, with many steps of compute and storage in the hierarchy.

As Jimmy Pike, an analyst at Moor Insights & Strategy and formerly the CTO for Dell’s Enterprise Solutions Group and before that the chief architect of the company’s Data Center Solutions hyperscale server and storage business, succinctly put it in chatting with The Next Platform: “Compute follows the data. If you put data in the cloud, compute has to follow it, for instance. It is not good to ask God for all of the answers – you need to be able to make some decisions on your own.”

This, it seems, is what a datanet as we are envisioning it, will do.

This is precisely what Son sees happening in computing, and why SoftBank shelled out so much money to acquire ARM.

“The center of gravity of the Internet has migrated from the PC to mobile, and we saw this great evolution in the past several years,” Son explained. “But the next big shift is coming from mobile to IoT. And people ask me what is the synergy between SoftBank and ARM, and why now? Why did you pay a 40 percent premium and why did you pay $32 billion? This is the answer. This is the time, this is the Cambrian Explosion.”

Son was referring, of course, referring to the 80 million or so years ago around 540 million years ago when life on earth went from a few hundred species of single-celled species or relatively simple colonies of cells to tens of thousands of complex life forms that resemble the species that we see around us today. Back then, Son said, the trilobite was the first animal to get a sensor – in this case, a rudimentary eye – which allowed it to find prey and avoid being eaten, giving it a tremendous advantage over other animals. The array of sensors that animals eventually evolved gave them all kinds of data about themselves and their environment, and their ganglia and eventually brains evolved to recognize the world, learn from it, and draw inferences about new data as it came in – exactly the kind of thing we are teaching software to do with large datasets and machine learning algorithms as they suck in sensor data from the devices under their purview.

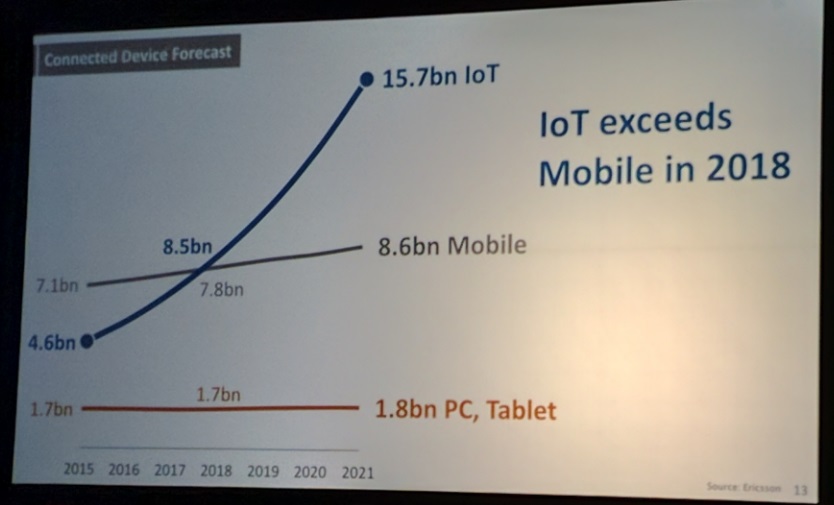

The new owner of ARM brought some data with him to make his case, looking at the installed base of PCs and tablets against smartphones and IoT devices. The first is a big device with heavy network use, the IoT devices are anywhere from tiny to small with bursty but relatively modest packets of data, and the smartphones are somewhere in between.

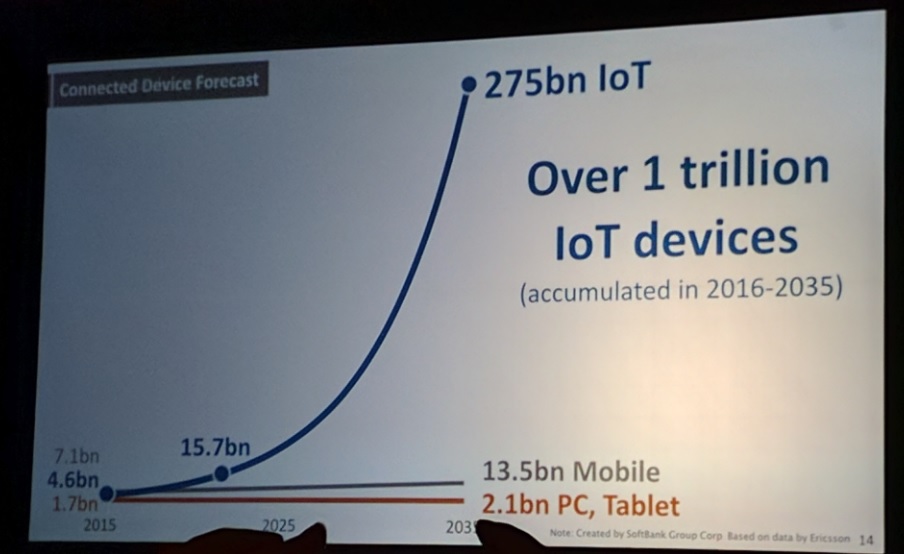

As you can see, the installed base of PCs and tablets is not going to grow much between 2015 and 2021, but in 2018 or so the number of IoT devices installed in the world are expected to exceed the number of smartphones. But that is not all there is to the story. IoT devices are going to keep on growing from there, much faster than the population of Earth, as intelligence is pushed into every little thing. Look at this pretty exponential curve:

These are all cumulative installed base numbers, not annual shipments, but it looks like whoever built this forgot that and resummed the IoT figures to get a cumulative installed base of devices between 2016 and 2035. From our interpretation of this data (and a rough reckoning against projections we could find out on the Internet), the installed base of IoT devices probably won’t hit one trillion until 2037 or so based on this curve. But Son’s point is nonetheless valid even: The number of IoT devices out there in 2035, at 275 billion or so, is going to utterly dwarf the number of PCs, laptops, tablets, and smartphones all added together.

(Our thanks to compatriot Chris Williams, the San Francisco bureau chief at The Register, for snapping those pictures for us.)

Leaving aside Son’s contention that all of this telemetry and deep learning and analytics will give us a utopia and actually bring about The Singularity – that is a topic for another day, but we could probably call that The Last Platform if we correctly stayed away from the word “final” – what we want to know is how all of these IoT devices drive a need for compute in the datacenter and in the datanet as we are calling it.

No one seems to know, but the best guesses we could gather up at TechCon seemed to think it would be a lot.

For one thing, that IoT traffic is going to be immense, rising from a total of around 1 EB per year (that’s exabyte) to 2.3 ZB per year (that’s zettabytes) by 2035. That is a factor of 2,450X increase in data traffic relating to IoT, streaming from the edges where devices live back to intermediate compute in the datanet (think of something like a baby Spark streaming cluster) and datacenter computing (which could end up being aggregates of processed data much like today’s data warehouses are). ARM processors can be used in every stage of the compute, from IoT endpoint to the datacenter.

Trying to figure out just how much computing this IoT explosion will drive, and where it will reside, is not a trivial task, since this market and its applications are so nascent. Like most things in life, we would expect that about 20 percent of the processing will occur back in the datacenter as we know it, with the other 80 percent being spread out across the datanet. All those zettabytes don’t get chewed and swallowed at once, because compute is nearly free but moving data is very, very expensive. You process where you can, and as efficiently as you can, when handling such mind-numbing amounts of data. So in a sense, the compute is going to break outside of the datacenter walls and become even more distributed and, equally importantly, more diverse. Like a Cambrian Explosion, in fact. We think Son has that much right. As for this enabling or causing The Singularity – we will return to that another day. Seriously. We will.

As for the amount of compute this IoT Explosion will drive, we asked ARM CTO Mike Muller what his thoughts were.

“I have done an off of the top of my head calculation,” Muller told us. “You have to think that it is maybe 10,000 IoT devices to a server, and if you say all of the servers in the world are going to take maybe 20 percent of all of the energy supplied, you end up figuring that each server has to run at about 15 watts. Which is doable. It is an order of magnitude better than where we are today. Is there a good business to be had in in servers? Yes, I think there is. What is the ratio of IoT devices per server, is it 1 to 10,000 or 1 to 100,000? Who knows. But it will drive traffic data rates and the number of servers. It seems to kind of scale.”

We are going to have a think on all of this for a while. We feel a spreadsheet model coming on. . . .

The problem with all IoT is that no one has a credible solution that solves the software/hardware security issue and that it’s Achilles heel. Just wait for one major disaster to happen and IoT will be dead because of the legalisation bomb that will inevitable drop on it.

And if you think ARM Trustzone is the answer please think again there have been numerous reports on hacks of it on on different vendor SoCs using it.

IoT software security a reasonable fear but it’s a solvable problem. Devices on the scale this article is talking about will not be general purpose computing devices but more like immutable hardware. Hackers exploit field-programmability. The IoT devices these guys are excited about have limited purposes and will have to cost pennies to manufacture so won’t have the computing capabilities that hackers require. The real opportunities for hackers will simply be the increased data volumes and server surface areas that the fatter pipes will present. But that’s nothing new, the financial services industry already contends with that kind of thing. The same kind of anti-IoT security arguments were made about cell phones in the late 80s prior to their Cambrian Explosion. When was the last time you heard about a “major disaster” security incident involving the cellular networks?

Actually security is a second order issue. An even more pressing issue is that no-one has a competent story in place for managing and MOVING personal compute clusters.

Today I have eight Bluetooth devices connected to my phone, which is not many when you consider personal items (scale, headphones), car items, home items, etc. And if I switch to a new phone, oh joy, I have to reconfigure every damn one of those items. This is a pain with eight items, it starts to get into the “no freaking way” territory when we’re at the 30 or 40 items point.

Yet this is where the wilder IoT proponents imagine we are headed soon. By 2020 we’re all supposed to be opening our houses with BT locks, switching lamps on and off with BT switches, controlling cameras and HVAC equipment via BT. Nowhere in all this grand futurizing does a SINGLE COMPANY seem to have even a ghost of a plan for how to make this stuff work properly!

By which I mean that it all needs to

– be able to connect to multiple devices simultaneously (my phone, her phone, the kids’ phones)

– be able to connect to multiple of MY devices simultaneously (my phone, my tablet) (this is a different problem — one involves different Google/Apple IDs, one involves different devices with the same Google/Apple ID)

– easily enroll a new device (I buy an iPad, and it IMMEDIATELY knows all my household IoT devices; I buy a smart toothbrush, connect it to my phone, and my tablet immediately knows about it)

– easily detach a device (I sell my iPhone, I sell my smart scale)

– easily upgrade a device (I replace my iPhone 8 with an iPhone 10)

Right now we are nothing close to this level of interop, and no-one seems to even be headed there.

Even Apple, who should be at the cutting edge, are not taking this seriously. Upgrading from one iPhone to another is a PITA, upgrading from one Apple Watch to another is unspeakable in terms of the hassle factor to move your configuration across. And none of these upgrades transfer BT paired information…

Right now it’s all skittles and laughs in IoT land because not enough people have yet had to go through this level of pain. I expect that to change over the next two to three years as a critical mass of IoT devices meets the natural turnover time to new phones…

If Arm Holdings(SoftBanK) can get a wider order superscalar Server market Reference ARM core design with SMT abilities, then Arm Holdings will profit more directly in the server market by offering that IP to a larger customer base. If AMD’s custom K12 ARMv8A ISA running core has as wide an order superscalar design as its Zen x86 cousin has and if K12 has SMT ability then AMD will get more of some of that custom ARM core server pie for itself, unless some other ARM Holdings top tier licensees add SMT and at least a 6 issue custom core design. Apple’s A series starting with the A7(Cyclone) custom ARMv8A running CPU micro-architecture is at least a 6 instruction issue design, but Apple’s custom cores lack SMT capabilities.

Adding STM(Simultaneous Multi-Threding) to K12 and a wider order superscalar design(Similar to Zen’s wider order cores with their new SMT capability) is what needs to be done to make any custom ARMv8A ISA running design look a little more like IBM’s Power8 RISC ISA designs which support SMT8 and are very wide with 8 instruction decoders at 10 instructions issued per cycle. Even IBM’s Power9 SMT8, and SMT4 variant, still has the wide order superscalar resources.

SMT is what allow for better CPU core utilization metrics and any CPU core without SMT is going to be at a competitive disadvantage in the server room where the closer to 100% utilization of all pipeline cycles the better. Without SMT capabilities in the CPU core’s hardware there is simply not enough ability to keep a CPU’s execution pipelines supplied with productive work quickly without the SMT enabled CPU core’s hardware processor thread context switching management to get the most out of any CPU core’s available execution pipeline cycles.

CPU cores that do not have SMT do not have the ability to make better use of their execution resources, If a single processor thread stalls on a single threaded(Non SMT enabled) CPU core, more execution pipeline resources will be wasted. The next step for any custom ARM cores for the server market is to get that SMT capability to compete with the x86, Intel, AMD(Zen), and Power/Power8s/9s, designs that all make use of SMT. SMT and a big back end to do the server workloads.

“If a single processor thread stalls on a single threaded(Non SMT enabled) CPU core, more execution pipeline resources will be wasted.”

This assumes that “execution pipeline” is the most important resource, the thing you want to use most aggressively. This is not necessarily a good assumption. It assumes either

– that the “execution” part of a core uses the most area OR that

– licensing issues require that an SMT core somehow be treated differently (cheaper) than the equivalent number of non-SMT cores.

The problem with SMT is that it requires sharing the cache (and other cache-like resources — branch prediction, TLB entries, maybe registers) and this sharing is not free, it dramatically reduces performance. If your core looks, essentially, like a small amount of execution logic attached to a see of SRAMs (making up the L1 caches, the branch predictors etc), then from an engineering point of view, it makes far more sense to just duplicate the entire thing (cache and execution) so that each thread gets a full set of SRAMs to play with, not a half (or a quarter) set.

And this is basically what ARM is doing. The most logical way (IMHO) to look at the standard ARM design pattern is that the 4 A72’s (or A73’s or A57’s) making up a single compute cluster and sharing an L2 are, for all practical purposes, a 4-way SMT engine — the L2 unit is the “core” unit, and the details below that of how the L1 caches, registers, etc are split is an implementation detail.

A57, A72, A73 are so area-efficient that this is a perfectly reasonable viewpoint, only it gives you a 4-way SMT engine that scales vastly better than Intel, IBM, or Sun’s SMT scaling — at lower area.

20% of the Xeon E5 26xx market in 2020 is 12 to 15 million processing units.

Mike Bruzzone, Camp Marketing

Well another problem reason why we definitely will not see the IoT explosion as everyone is predicting is the shear number of batteries that would have to be churned out for this, conservative estimates go at 9.1 million batteries a day

Not going to happen folks! Unless the power requirements can be filled in a more sensible way

And yet more signs of ARM chips struggling in the server market with this latest news which could spell the end of Applied Micro’s X-Gene following Applied Micro’s acquisition by Macom http://www.eetimes.com/document.asp?doc_id=1330870&page_number=2 quote…

” By contrast, Applied’s X-Gene ARM server business “doesn’t fit in our portfolio even in presence of [its likely future] success,” Croteau said.

The microprocessor unit was making an estimated $55 million annual loss that represented 51% of Applied’s operating expenses while contributing just 1% of its revenues.”.