With this summer’s announcement of China’s dramatic shattering of top supercomputing performance numbers using ten million relatively simple cores, there is a perceptible shift in how some are considering the future of the world’s fastest, largest systems. That shift isn’t because the architectural bent the Chinese chose is new or revolutionary; it is that the old idea is resurfacing given greater pressures on energy reduction and real world application performance–and being demonstrated at scale.

While one approach, which we will be seeing with the pre-exascale machines at the national labs in the United States, is to build complex systems based on sophisticated cores (with a focus on balance in terms of memory) the Chinese approach with the top Sunway TaihuLight machine, which is based on lighter weight, simple, and cheap components and using those in volume, has proven itself successful, both on the Linpack benchmark and on real-world applications as shown by the Gordon Bell prize submissions among other application performance results the Chinese disclosed.

To be fair, as we highlighted here, while the Top 500 Linpack benchmark results were impressive, and the energy efficiency targets were also exceeded (TaihuLight came in second on the Green 500 rankings), there are some cracks in the façade—at least for now. Most notably were the relatively low rankings on the newest, and for actual application owners at elite supercomputing sites, HPCG benchmark. While TaihuLight does a remarkable job on sheer number crunching, figuring out how to tailor that into a more balanced system profile is the real challenge ahead. At the core of that is memory and, as Micron’s Steve Pawlowski tells The Next Platform, when those system designers working on the Sunway system turn an eye toward taking their cheaper, more energy efficient design and tuning it to strike a better bandwidth balance, it will be a real threat—and change in the narrative—for future exascale computing design.

“We should be focusing on the needs of applications in the core and make memory the focal point because it is the one thing that changes the least in the system in terms of technology and capability. We need to leverage that to build systems based on that premise of memory,” Pawlowski argues. “The Chinese have changed the narrative. They took relatively cheap devices, put them together, and built a high performance system for now. But we shouldn’t assume the next machine they build won’t start looking at solving the overall memory bandwidth and bandwidth per flops problem.”

Holding to Pawloswki’s view, an alternative course they might employ is moving away from 260 cores in a chip and instead putting 16 cores per chip and simply using more chips. This would mean the same overall amount of memory, improving bytes per flop and bandwidth per core and while there are still only 10 million cores and just more chips, the system becomes more balanced. This is not the only way to solve such a problem, but what China has figured out, and it’s not necessarily new (consider BlueGene, among other examples) is that cheaper, more efficient chips, once balanced with memory that can scale across the system (and that part is still in development—at least based on current HPCG numbers showing abysmal memory performance).

It is natural to think that memory maker Micron’s VP of Advanced Computing would be invested in memory as the central component for future systems. But to be far, Pawlowski is taking a wider view. He spent over 30 years at Intel, leaving as a senior fellow before leading extreme scale efforts at Micron. Looking back at how the paradigm has shifted for HPC centers, he recalls experiences with Intel when they first launched their multicore strategy. Even then, he said, there were a number of folks who detested the move because before that, when a core was added, memory and I/O could be added alongside to keep the system in balance. “Those systems scaled with the number cores; now we’re adding more cores but the memory and I/O are not scaling in parallel. Adding more cores per chip continues to amplify that trend.”

Pawlowski says that keeping up with that from a memory perspective is also a problem and “more boutique technologies like faster DRAM interfaces and using organic substrates to keep things close together is only a stop-gap solution.” He says that if we can get past the point of working with an industry standard interface, it is possible to get a terabyte per second inside the DRAM (theoretically) but currently, the real delivery is more like 8 gigabytes per second at best. “If we architected memory devices differently, we could theoretically bring 128 gigabytes per second of bandwidth of out of each memory device. That should be the goal.”

This is where theoretical, big picture thinking turns toward how Micron itself is thinking about the problem—at least one variant on potential solutions.

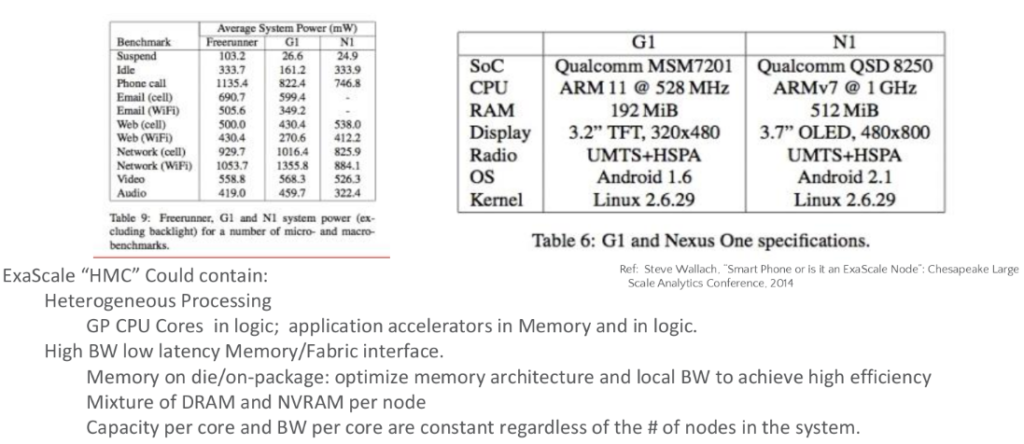

Pawlowski shared some details putting this into real system perspective with a conceptual optimized SoC that can “share” computing with a tightly coupled memory system, which could become a hybrid memory cube-style base layer

The idea of having an SoC with processing on board (ARM or X86) with access to RAM and other capabilities cooked in is that it is (relatively) cheap and can handle local processing and with memory and an interconnect, can become a “brick” that can build a highly efficient server. Imagining something like four cores and 8 GB of memory stacked on top with 160 GB/s of bandwidth means it would take little to build a 32-core server with 8 such “bricks” connected via an interconnect on the backside. The point is, no matter how it is put together, in such a case, instead of building a 32-core chip with 8 to 16 GB of memory, the memory stays intact. If someone wanted to take such a chip, and lets imagine one teraflop per chip, and string a million of them together, the memory has increased commensurate with the cores and the capacity per core and bandwidth per core are the same as with a single chip.

The idea is extensible. If starting off with an energy efficient approach with memory capacity and bandwidth at the heart of such an SoC it can be the basis of building a cellphone or an exascale system. The same concept scales.

So, the question is, why isn’t this the norm?

Well, this isn’t the way things are now, but as we look to the future, particularly with architectures like the future Knights Hill chips, for instance, we are getting closer. One could make the argument that we are in an-between stage now where we’ve just finally figured out how to perfect stacking memory and keeping it in tight kahoots with the processor.

The fact as, as Pawlowski argues, we are stuck in a pattern of thinking about performance and efficiency that is missing the real point—at least for future exascale machines. And that is balance. Vendors are all talking about building balanced systems with various frameworks and data-centric designs, but what is being argued here is more at the system level. The time hasn’t been right, the stop gaps will start leaking, but there is hope on the horizon—even if the winners haven’t quite emerged yet. It might look like something Pawlowski proposes above, it might be a re-architecting or re-balancing of the Sunway system’s approach, or most likely yet, it might look like what the future Aurora supercomputer will be when it finally emerges.

Be the first to comment