No one expects that setting up management tools for complex distributed computing frameworks to be an easy thing, but there is always room for improvement–and always a chance to take out unnecessary steps and improve the automated deployment of such tools.

The hassle of setting up such frameworks, such as Hadoop for data analytics, OpenStack for virtualized infrastructure, or Kubernetes or Mesos for software container management is an inhibitor to the adoption of those new technologies. Working with raw open source software and weaving it together into a successful management control plane is not something all enterprises have the skills to do. If Linux, by far the most popular open source software stack yet deployed, teaches us anything, it is only when Linux is packaged up and made easier to consume that it can become widely adopted in the datacenter.

The Kubernetes container management system, which is inspired by the internal Borg and Omega cluster controllers created by Google to run its own infrastructure, is younger than either OpenStack or Mesos as an infrastructure control plane, but its popularity is growing fast and it is finding its place in the datacenter.

“Anything that comes along that can help people get Kubernetes up and running faster is good for the community,” explains Bob Wise, chief cloud technologist at the cloud native computing team at Samsung SDS Research America, which is a big backer of Kubernetes.

The Samsung Group conglomerate, a $305 billion behemoth that makes semiconductors, appliances, electronics, heavy equipment, chemicals, as well as providing financial and other kinds of services, was one of the early adopters of OpenStack and it has become one of the driving forces behind Kubernetes since Google open sourced it in the summer of 2014. The SDS arm of the Korean giant is an IT service provider that generated $7.2 billion of its own revenue and that does two different jobs: it acts as the research and development organization defining the next-generation of datacenter infrastructure to support Samsung’s vast and diverse empire, and the 7,000 techies in this division sell their expertise to help others evolve their infrastructure as well.

A company the breadth and depth of Samsung Group, which has 489,000 employees, is not easy to characterize when it comes to its own IT operations, but SDS is always looking out into the future to make sure Samsung can stay ahead on the technology curve.

“We are the scouts before the wagons,” Wise tells The Next Platform. “We are building out reference architectures that are on a roadmap for how we are going to move this stuff forward. Samsung has what I would call typical IT, in the sense of lots of different things–there’s OpenStack, there’s VMware, there’s bare metal, there’s some AWS. The goal is to have one way of doing it, which is containerized, that spans any infrastructure platform. This is a long term plan for us, and especially for any company of Samsung’s scale, it takes a while.”

The transition that Samsung is starting could take a decade or more, which is not at all surprising to those who have been around the IT industry for a long time. IBM mainframes are more than five decades old and they are still an important component in many large enterprises running transaction processing, even if they are utterly swamped in a sea of X86 servers doing most of the interesting web and analytics work around those transactions.

Building Expertise In Kubernetes

Like any other large organization, Samsung wants to simplify its IT infrastructure as much as possible without sacrificing flexibility. Wise has seen a lot of change in back-end infrastructure over his years in the industry, and there is no question that Seattle has become a hotbed for innovation in infrastructure control software. Amazon Web Services and Microsoft Azure do their infrastructure work there, and Joe Beda, the first engineer at Google to work on its Compute Engine public cloud is from the area, too. The Kubernetes project launched by Google hails from Seattle, too. (Wise and Beda are co-chairs of the Kubernetes scaling group, which is steering how the container management system is being scaled to the kind of levels a company like Samsung will require.)

Wise was chief technology officer at a music streaming service called Melodeo, which was an early adopter of Amazon Web Services and that was acquired by Hewlett Packard Enterprise back in June 2010. After moving over to HPE, Richard Kaufmann became CTO of the HP Cloud public cloud and Wise was its vice president of engineering. When HPE decided to shut down its public cloud efforts, Kaufmann moved over to Samsung SDS and eventually convinced Wise to rejoin him to work on architecting future compute platforms for the conglomerate.

“Given that we had a lot of battle scars from OpenStack, I had been looking at what some of the alternatives were, and there had been all of this progress with Docker and then Mesos and Kubernetes, and I had come to the conclusion even before joining Samsung that the world had changed and that it was time to think about a new way of doing infrastructure management,” Wise explains. “We are very well experienced with OpenStack, and we don’t think that is the right pick. Even now, I would say that Docker Swarm is not really an operational tool, it is a developer tool, so it has never been a serious contender. So for us it came down to Mesos or Kubernetes, and the key part of our analysis was that Mesos, much like Docker, is vastly dominated by a single contributor, and that is Mesosphere, and the size and energy of the Kubernetes community, even when we were making this bet, was much bigger. So we felt we were making a bet for the future. Mesos is a decent choice, but we think Kubernetes is a better choice.”

That said, Samsung is a big company, and Wise expects that some parts of the organization may want to run OpenStack, or Spark or Hadoop on top of Mesos. No matter what, you don’t want to have dedicated clusters, so the issues are now about how you can deploy Mesos or OpenStack on top of Kubernetes. (You can also run Kubernetes on top of Mesos, which is how Mesophere does it.)

Samsung has been working to help scale up Kubernetes, and early on when initial benchmark tests were showing Kubernetes scaling to run across 100 nodes, Samsung was demonstrating how it could be pushed to 1,000 nodes. But scale is not the only issue for large enterprises. Making Kubernetes easier to deploy and use and therefore helping to make it ubiquitous are things that Samsung, like Google and the other members of the Cloud Native Computing Foundation that steers it, also cares about just as much.

Full Speed Ahead, Cloud Pilot

Having established itself as a leader in the Kubernetes community, and having an interest in helping with the broader adoption of Kubernetes, Samsung SDS and Univa worked together to do a human factors test to figure out how much faster the Navops Launch implementation of Kubernetes created by Univa was to get up and running compared with going out an grabbing open source components of the Kubernetes Community Version and standing them up.

The whole premise behind Navops Launch, which just debuted, is that it contains everything needed to create a basic Kubernetes container management stack and is quick and easy to set up. Navops Launch has a subset of Univa’s UniCloud provisioning tool, which was originally created to provision various cloud infrastructure so it can run the Grid Engine workload scheduler that Univa sells for enterprise HPC workloads. The portions of UniCloud that allow it to deploy on bare metal are mixed with Kubernetes, Puppet system configuration tools, Docker containers, and the minimalist Atomic Host Linux from Red Hat to create a complete container management environment. Navops Launch, which you can get here, downloads in three containers, and if you put it onto a Docker-ready machine, it installs itself, adds a Kubernetes master node and control plane and the Kubernetes worker nodes running Fedora Atomic Host and you are ready to start adding machines to the cluster to expand the Docker pods.

The work that has been done to make Navops Launch fast will undoubtedly feed back into the open source Kubernetes stack and make it better, and in the meantime, Univa will be offering Launch as a free download and proving support if desired. In addition, Navops Command, which adds advanced scheduling features and policy management capabilities to any Kubernetes distribution, will be available this summer.

“There is an art and science to this kind of work, and we tried to do this from the perspective of the typical skillset of the typical kind of person who would do this kind of work and have a certain amount of technical skills,” says Wise of the benchmarking that Samsung SDS did with Univa. “You have to assume that people have some basic system administration skills, but you don’t have to be a core Kubernetes developer, either.”

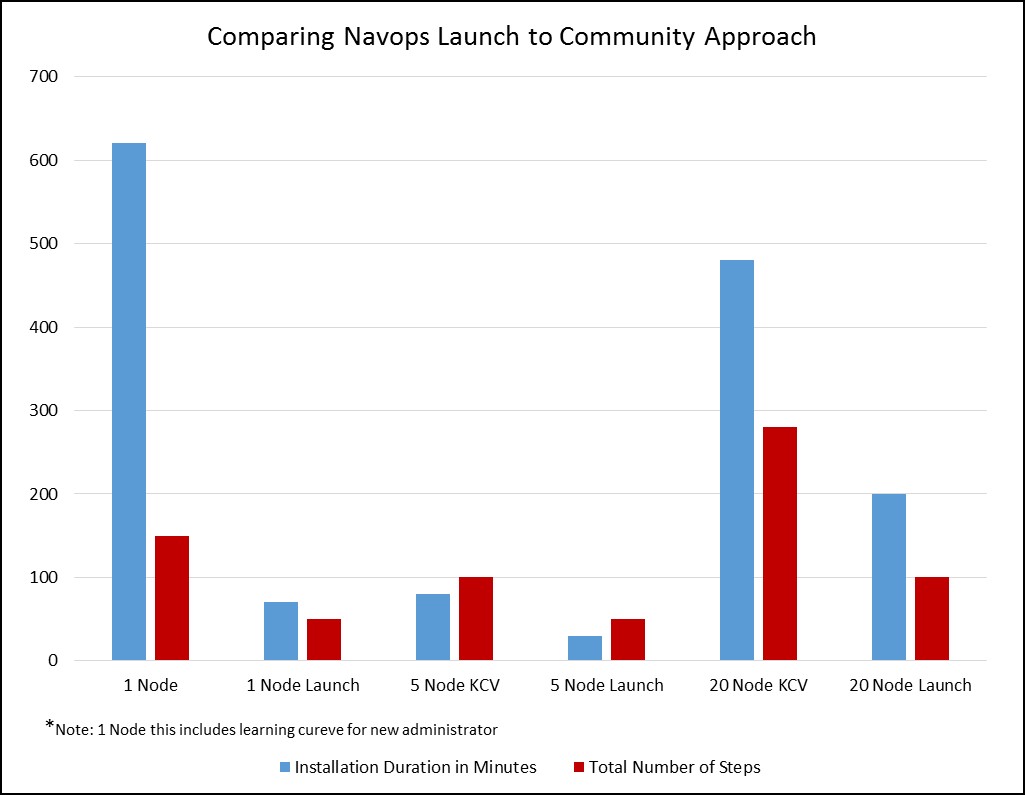

As part of the benchmarking, Samsung SDS looked at the time for the install as well as the number of steps in Web interfaces and command line interfaces that the two approaches took to install one, five, and twenty nodes running Kubernetes. Take a look:

As you can see, the time and step counts for the open source Kubernetes setup were significantly higher for the single node configuration than for the Navops Launch setup. The administrators who did the tests were installing the Kubernetes stacks for the first time, but had knowledge of Linux, Docker, and bare metal provisioning.

With the five node Kubernetes setups, the system administrators who were subjects of the tests had learned a bit about how to do it and it was relatively easier to add nodes and the difference in the number of steps was not as significant as with the initial setups. This is expected. With the 20 node clusters, the increasing node count scale takes up more time, and Navops Launch still holds a significant advantage.

But both Kubernetes Community version and Navops Launch scale proportionately to the size of the cluster. Importantly, bare metal provisioning represented 66.4 percent of the time on the Kubernetes Community version for the five-node cluster and 97.1 percent of the time for the twenty-node cluster, compared to 10 percent and 18.8 percent, respectively, for the Navops Launch clusters of the same size. This difference is important, and we think that the lessons learned from Navops Launch about simplifying the bare metal provisioning for Kubernetes will eventually make their way into the open source Kubernetes stack. Exactly how this might happen remains to be seen.

Be the first to comment