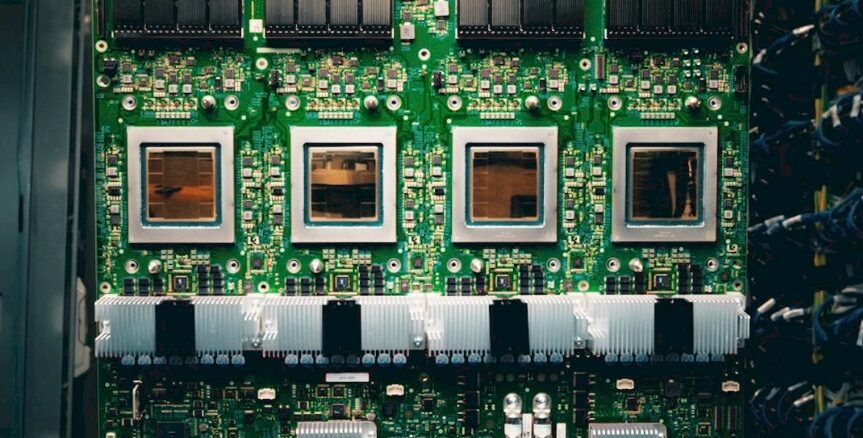

With “Ironwood” TPU, Google Pushes The AI Accelerator To The Floor

If you want to be a leading in supplying AI models and AI applications, as well as AI infrastructure to run it, to the world, it is also helpful to have a business that needs a lot of AI that can underwrite the development of homegrown infrastructure that can be sold side-by-side with the standard in the industry. …