Connect

If You Want To Sell AI To Enterprises, You Need To Sell Ethernet

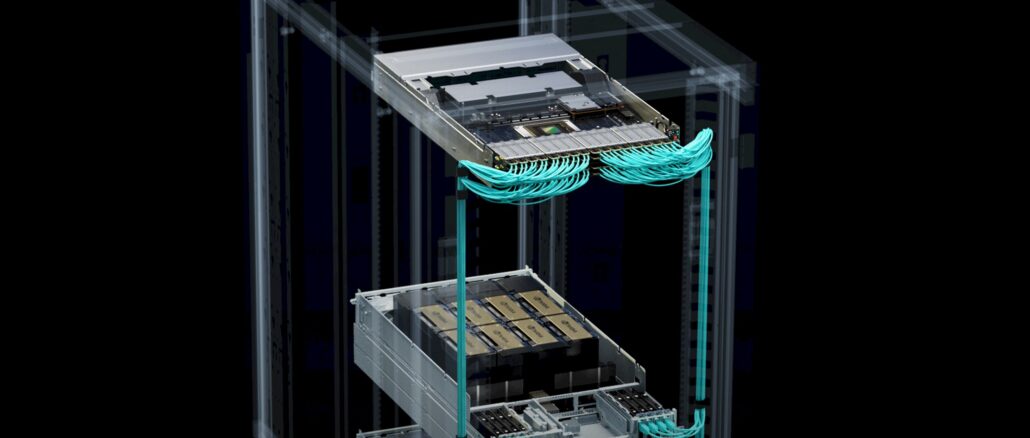

Server makers Dell, Hewlett Packard Enterprise, and Lenovo, who are the three largest original manufacturers of systems in the world, ranked in that order, are adding to the spectrum of interconnects they offer to their enterprise customers. …