What Faster And Smarter HBM Memory Means For Systems

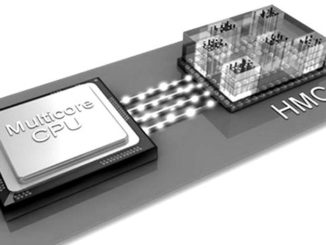

If the HPC and AI markets need anything right now, it is not more compute but rather more memory capacity at a very high bandwidth. …

If the HPC and AI markets need anything right now, it is not more compute but rather more memory capacity at a very high bandwidth. …

We have nothing against disk drives. Seriously. And in fact, we are amazed at the amount of innovation that continues to go into the last electromechanical device still in use in computing, which from a commercial standpoint started out with the tabulating machines created by Herman Hollerith in 1884 and used to process the 1890 census in the United States, thus laying the foundation of International Business Machines. …

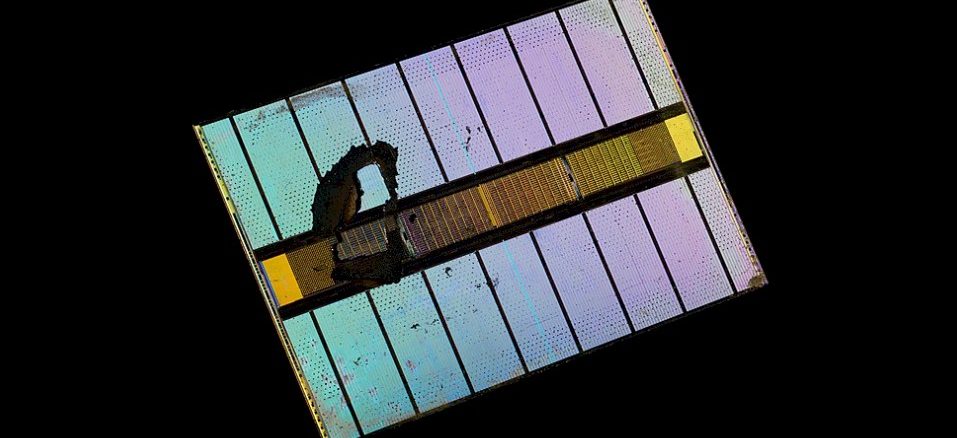

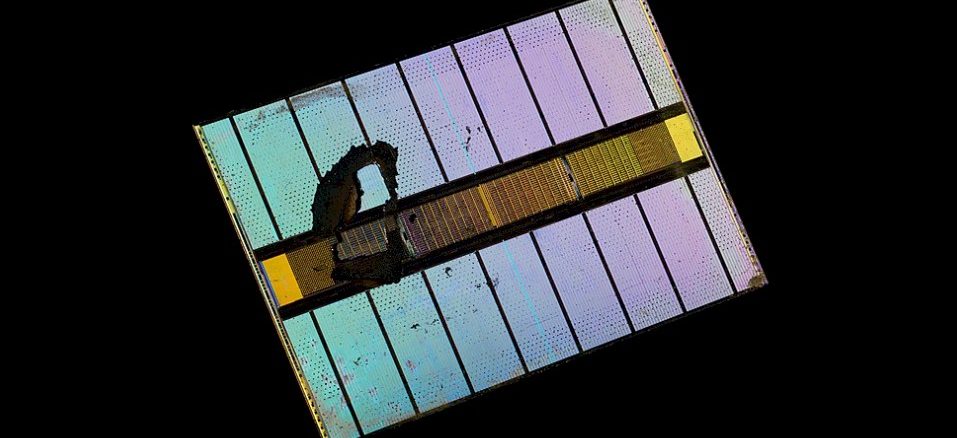

Micron has a habit of building interesting research prototypes that offer a vague hope of commercialization for the sheer purpose of learning how to make its own memory and storage subsystem approaches more tuned to next generation applications. …

Astronomy is the oldest research arena, but the technologies required to process the massive amount of data created from radio telescope arrays represents some of the most bleeding-edge research in modern computer science. …

There may be a shortage in the supply of DRAM main memory and NAND flash memory that is having an adverse effect on the server and storage markets, but there is no shortage of vendors who are trying to push the envelope on clustered storage using a mix of these memories and others such as the impending 3D XPoint. …

Making the transition from disk storage to flash and other non-volatile media is perhaps more difficult for the makers of storage than it is for customers. …

With this summer’s announcement of China’s dramatic shattering of top supercomputing performance numbers using ten million relatively simple cores, there is a perceptible shift in how some are considering the future of the world’s fastest, largest systems. …

Compute is by far still the largest part of the hardware budget at most IT organizations, and even with the advance of technology, which allows more compute, memory, storage, and I/O to be crammed into a server node, we still seem to always want more. …

For most applications running in the datacenter, a clever distributed processing model or high availability clustering are enough to ensure that transaction processing or pushing data into a storage server will continue even if there is an error. …

All Content Copyright The Next Platform