AI

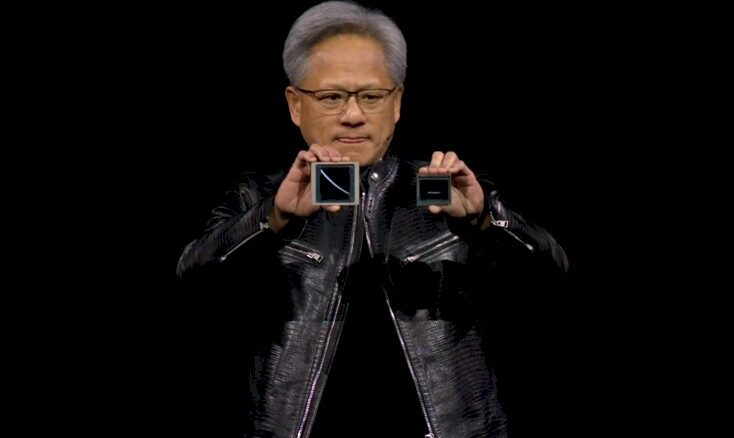

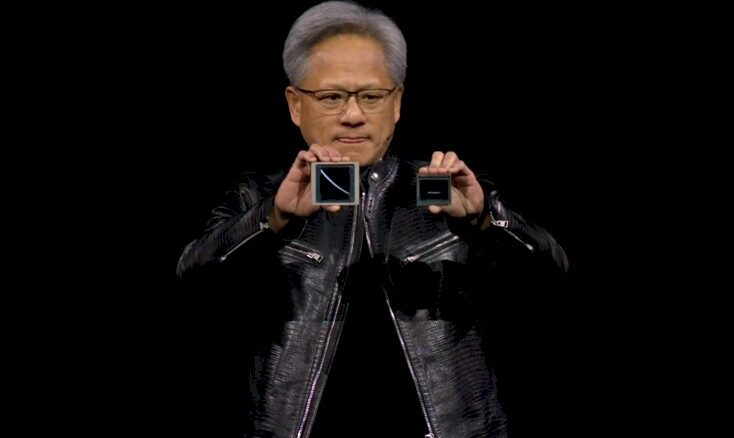

With Blackwell GPUs, AI Gets Cheaper And Easier, Competing With Nvidia Gets Harder

If you want to take on Nvidia on its home turf of AI processing, then you had better bring more than your A game. …

If you want to take on Nvidia on its home turf of AI processing, then you had better bring more than your A game. …

Heaven forbid that we take a few days of downtime. When we were not looking – and forcing ourselves to not look at any IT news because we have other things going on – that is the moment when Nvidia decides to put out a financial presentation that embeds a new product roadmap within it. …

All Content Copyright The Next Platform