AWS Plunks Down $10 Billion For Datacenters In North Carolina

When you drive around the major metropolitan areas of this great country of ours, and indeed in any most of the developed countries at this point, you see two things. …

When you drive around the major metropolitan areas of this great country of ours, and indeed in any most of the developed countries at this point, you see two things. …

There is a bit of AI spending one-upmanship going on among the hyperscalers and cloud builders – and now the foundation model builders who are partnering with their new sugar daddies to be able to afford to build vast AI accelerator estates to push the state of the art in model capabilities and intelligence. …

If Amazon is going to make you pay for the custom AI advantage that it wants to build over rivals Google and Microsoft, then it needs to have the best models possible running on its homegrown accelerators. …

Think of it as the ultimate offload model.

One of the geniuses of the cloud – perhaps the central genius – is that a big company that would have a large IT budget, perhaps on the order of hundreds of millions of dollars per year, and that has a certain amount of expertise creates a much, much larger IT organization with billions of dollars – and with AI now tens of billions of dollars – in investments and rents out the vast majority of that capacity to third parties, who essentially allow that original cloud builder to get their own IT operations for close to free. …

Here’s a question for you: How much of the growth in cloud spending at Microsoft Azure, Amazon Web Services, and Google Cloud in the second quarter came from OpenAI and Anthropic spending money they got as investments out of the treasure chests of Microsoft, Amazon, and Google? …

Three years ago, thanks in part to competitive pressures as Microsoft Azure, Google Cloud, and others started giving Amazon Web Services a run for the cloud money, the growth rate in quarterly spending on cloud services was slowing. …

We have been tracking the financial results for the big players in the datacenter that are public companies for three and a half decades, but starting last year we started dicing and slicing the numbers for the largest IT suppliers for stuff that goes into datacenters so we can give you a better sense what is and what is not happening out there. …

If Microsoft has the half of OpenAI that didn’t leave, then Amazon and its Amazon Web Services cloud division needs the half of OpenAI that did leave – meaning Anthropic. …

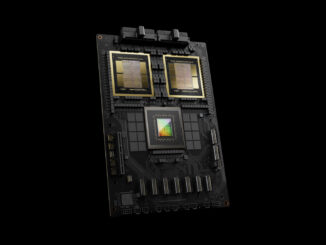

At his company’s GTC 2024 Technical Conference this week, Nvidia co-founder and chief executive officer Jensen Huang, unveiled the chip maker’s massive Blackwell GPUs and accompanying NVLink networking systems, promising a future where hyperscale cloud providers, HPC centers, and other organizations of size and means can meet the rapidly increasing compute demands driven by the emergence of generative AI. …

Amazon Web Services may not be the first of the hyperscalers and cloud builders to create its own custom compute engines, but it has been hot on the heels of Google, which started using its homegrown TPU accelerators for AI workloads in 2015. …

All Content Copyright The Next Platform