Everybody knows that companies, particularly hyperscalers and cloud builders but now increasingly enterprises hoping to leverage generative AI, are spending giant round bales of money on AI accelerators and related chips to create AI training and inference clusters.

But just you try to figure out how much. We dare you. The numbers are all over the place. And that is not just because it is tough to draw the lines that separate AI chippery from the stuff surrounding it that comprises a system. Part of the problem with estimating AI market size is no one really knows what happens to a server once it is built and sold and what it is used for. How do you know, for instance, how much AI work or HPC work a machine loaded up with GPUs is really doing?

Back in December as we were winding down for the holidays, we drilled down into the GenAI spending forecast from IDC, which was fascinating in that it broke GenAI workloads separate from other kinds of AI, and talked about hardware, software, and services spending to build GenAI systems. We, of course, made reference to the original and revised total addressable market that AMD chief executive officer Lisa Su made in 2022 and 2023 regarding datacenter AI accelerators of all kinds, including GPUs but all other chippery.

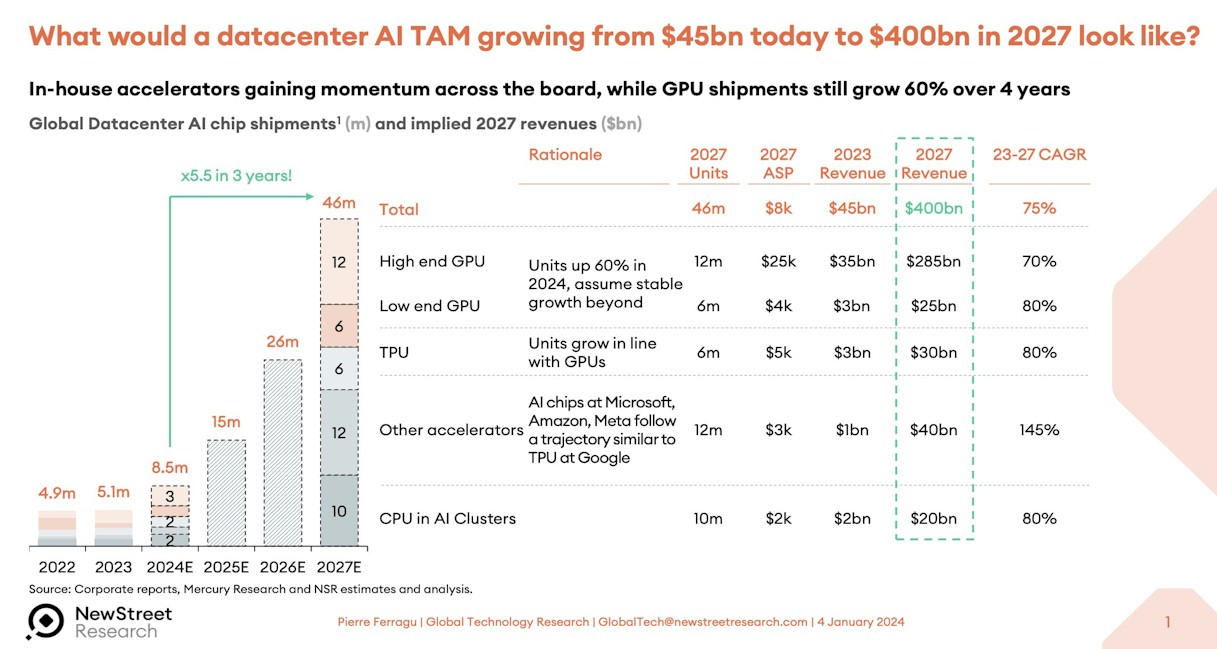

To remind you, Su said the total addressable market for datacenter AI accelerators was on the order of $30 billion in 2023 and would grow at around a 50 percent compound annual growth rate through the end of 2027 to more than $150 billion. But a year later, as the GenAI boom went sonic as the “Antares” Instinct MI300 series GPUs were launched in December, Su said that AMD was pegging the market for AI accelerators in the datacenter at $45 billion in 2023 and that it would grow at a more than 70 percent CAGR out through 2027 to reach more than $400 billion.

That’s just for the accelerators – not the servers, the switches, the storage, and the software.

Pierre Ferragu of New Street Research, whose team does very smart work in the tech sector, had a stab at how this $400 billion datacenter accelerator TAM might break down, which they tweeted out:

We still think this is a very large number, which presumes somewhere around $1 trillion in AI server, storage, and switching sales at the end of the TAM forecast period.

To come back down to reality a little, as we started out 2024, we grabbed the GPU sales forecast from Aaron Rakers, managing director and technology analyst for Wells Fargo Equity Research and had some spreadsheet fun. That model covers GPU sales in the datacenter from 2015 through 2022 and had estimates to close out 2023 (which had not yet ended when the forecast was made) and stretched out to 2027. That Wells Fargo model also predated the revised forecasts that AMD has made in recent months, where it says it will make $4 billion on GPU sales in 2024. (We think it will be $5 billion.)

In any event, the Wells Fargo model shows $37.3 billion in GPU sales in 2023, driven by 5.49 million unit shipments for the year. Shipments almost doubled – and include all kinds of GPUs, not just the high-end ones. GPU revenues were up by a factor of 3.7X. The forecast is for 6.85 million datacenter GPU shipments in 2024, up 24.9 percent, and revenues of $48.7 billion, up 28 percent. The 2027 forecast is for 13.51 million GPU units shipped, driving $95.3 billion in datacenter GPU sales. In that model, Nvidia has 98 percent revenue market share in 2023 and only drops to 87 percent share in 2027.

That beings us to now. Both Gartner and IDC have recently put out some stats and forecasts for AI semiconductor sales, and it is worth walking through them so we can try to reckon what the AI chip terrain looks like now and might look like some years hence. The publicly available reports from these companies always skimp on the data – they have to make a living, too – but usually say something of value that we can work with.

Let’s start with Gartner. Nearly a year ago, Gartner put out a market study on AI semiconductor sales in 2022 with a forecast in 2023 and 2027, and a few weeks ago it put out a revised forecast that had sales for 2023 with a forecast for 2024 and 2028. The market study for the second report also had a few stats in it, which we added to create the following table:

Compute Electronics is a category that we presume includes PCs and smartphones, but even Alan Priestly, the vice president and analyst at Gartner who built these models, knows that by 2026, all PC chips sold will be AI PC chips because all laptop and desktop CPUs will include neural network processors of some sort.

AI chips for accelerating servers is what we care about here at The Next Platform, and the revenue from these chips – we presume excluding the value of the HBM, GDDR, or DDR memory attached to them – was $14 billion in 2023 and is expected to grow by 50 percent in 2024 to reach $21 billion. But the compound annual growth rate for AI accelerators for servers is expected to be only about 12 percent between 2024 and 2028, reaching $32.8 billion in sales. Priestly says that custom AI accelerators like the TPU and the Trainium and Inferentia chips from Amazon Web Services (to just name two) only drove $400 million in revenue in 2023 and will only drive $4.2 billion in 2028.

If the AI chip represents half of the value of a compute engine, and a compute engine represents half of the cost of a system, then these relatively small numbers could add up to a fairly large amount of revenues from AI systems in the datacenter. Again, it depends on where Gartner is drawing the lines, and how you think they should be drawn.

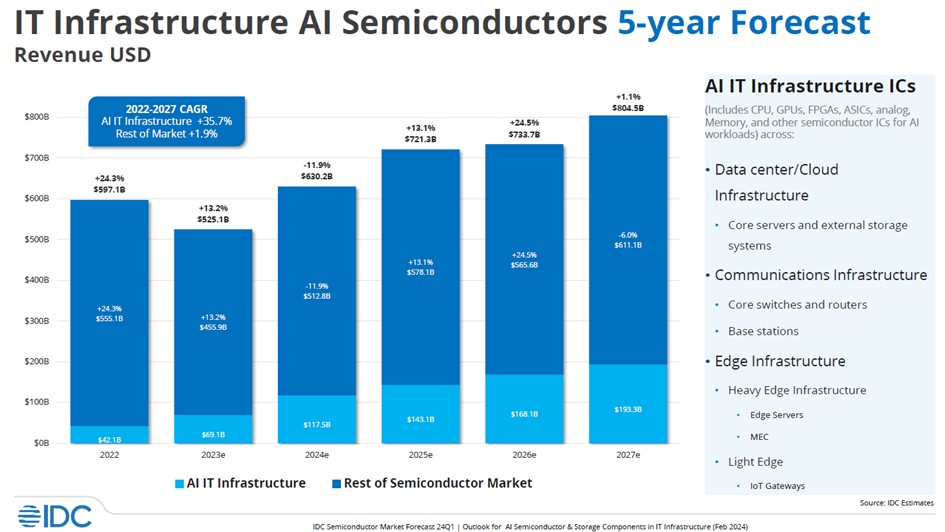

Now, let’s take a look at how IDC is thinking about the AI semiconductor and AI server markets. The company posted this interesting chart a few weeks ago:

In this chart, IDC is putting together all of the revenues from CPUs, GPUs, FPGAs, custom ASICs, analog devices, memory, and other chippery that is used in datacenter and edge environments. Then, it is taking out the compute, storage, switch, and other device revenues as these devices apply to AI training and AI inference systems. This is not the value of all of the systems, but of all of the chips in the systems; so it does not include chassis, power supplies, cooling, motherboards, riser cards, racks, systems software, and such. This chart has actual data for 2022 and is still estimating data for 2023 through 2027, as you can see.

This chart is a little hard to read, so click it to enlarge it if your eyes are strained like ours are. In this IDC analysis., the AI portion of the semiconductor pie grew from $42.1 billion in 2022 to an estimated $69.1 billion in 2023, which is a growth rate of 64.1 percent between 2022 and 2023. This year, IDC thinks that AI chip revenues – and that does not mean just XPU sales, but all chip content that goes into AI systems in the datacenter and at the edge – will rise by 70 percent to $117.5 billion. If you do the numbers between 2022 and 2027, IDC reckons the AI chip content in datacenter and AI systems will see total revenues on the bills of material growth by a compound annual growth rate of 28.9 percent to $193.3 billion in 2027.

The blog post where this chart came from was published at the end of May and is based on a report done in February. So take that time lag into account.

In that post, IDC added in some figures for server revenues, pulling out AI servers distinct from servers used for other workloads. We did some spreadsheet work on raw IDC server numbers to try to figure out AI server spending back in October 2023, but here is some real data.

In 2023, IDC reckons that the number of servers sold worldwide dropped by 19.4 percent to just under 12 million units, but thanks to the ASPs for AI servers being very high – our best guess is between 45X and 55X that of a generic server for supporting boring infrastructure applications – the revenues for AI servers (based on the estimate for 2022 AI server revenues we came up with last year of $9.8 billion) was up by a factor of 3.2X to $31.3 billion, which is 23 percent of the market. IDC is projecting that by 2027, AI servers will drive $49.1 billion in sales. IDC did not say what its most recent forecast for server revenues were for 2027, but at the end of 2023, that number stood at $189.14 billion as we showed here.

By the way, as IDC carves the server market up, machines that do AI on a CPU using the native matrix or vector engines on those CPUs are not considered accelerated and therefore are not considered “AI servers” in our lingo.

In any event, we think that forecast for AI server revenues for 2027 is too low or the forecast for overall server revenues for 2027 is too high – or a mixture of both. We still think that by 2027, AI servers with some sort of acceleration will comprise a little less than half of revenues and we assume there will be a lot of acceleration and generative AI going on in the datacenter.

But that is admittedly a hunch. We will be keeping an eye on this.

So when is the inevitable correction going to happen on all this AI hype? I’m sure there will be some smart applications to come out of this at some point, but the hallucinations get you out of some models I’ve played with have been laughable. If you know anything at all about the subject in question, you’d be horrified. It _sounds_ good, but it’s just not based on reality.

So even though Gartner, IDC and others show numbers going up up up, we all know it’s going to crash back down at some point. It’s just a matter of when the correction happens and how hard. I lean towards more severe, but that’s the pessimist or curmudgeonly instincts kicking in.

I have similar instincts. And selfish ones, too. We have land, can grow food, hunt and fish. I have planted orchards and have a massive raspberry and blackberry garden. We have chickens and the neighbors have beef and a need for some hands to mend fences. That is not an accident, but it is also how I want to live out the final two quarters of my life. I will write as long as it makes money and does something useful, and when it doesn’t, I will turn to politics I guess….?

That sounds lovely, Timothy, but ehat about the massive raspberry pending in the AI market? No prediction?

It’s hard to say. But I do not believe the high cost of AI is sustainable as it is currently being done. The use cases are limited and the revenue streams are small. I do not use this stuff, and I do not envision I will. I like to do my own thinking. Not everyone feels this way, but these tools have to do a better job for a lot less money. If they do, then I can see a few hundred billion being spent a year on it, maybe even a trillion. But this is not like online shopping destroying small towns and malls — at least not yet. That seemed more inevitable back in the late 1990s and was.

AI in the LLM sense and current state of the art is a data mining tool with a friendly front end. That’s my $0.02.

Bruzzone NVIDIA accelerator on channel supply on financial data;

A100 q1 2022 = 1,589,368 units

A100 q2 2022 = 1,348,612

A100 q3 2022 = 1,151,484

A100 q4 2022 = 948,259

H100 q1 2023 = 366,774

H800 q1 2023 = 103,449

H100 q2 2023 = 983,539

H800 q2 2023 = 245,885

H100 q3 2023 = 773,648

H800 q3 2023 = 190,355

L40_ q3 2023 = 296,223

H100 q4 2023 = 798.539

H800 q4 2023 = 196,479

L40_ q4 2023 = 305,753

GH 200 q1 2024 = 168,819

H100 q1 2024 = 685,033

H800 q1 2024 = 225,059

L40_ q1 2024 = 557,067

2022 = 5,037,724 units of A100

2023 = 4,260,644 units here broken out,

H100 = 2,922,500

H800 = 736,168

L40_ = 601,97

I’m also working on consumer dGPU volume. Similar the industry myth of 20 M so said servers produced annually, which on the mass of 2017 through 2021 v2/v3/v4 volume pumped out as sales close [?]for their standard platform markets, the syndicate myth that conceals total Nvidia generation volume in about to be revealed.

Doing this exercise in due diligence it has become apparent why Intel is continuing consumer dGPU field application validation relying on Arc priced at cost. Monopoly substitutes aim for 20% of the market they enter because that’s what it takes to sustain a presence. Intel would not be interested in expending development resource on consumer dGPU when the second players so said annual volume averaged only 8.7 M units annually over the last 5 years.

Mike Bruzzone, Camp Marketing

Right the myth of 20 M servers, IDC says 12 M in 2023?

Intel DCG + Nex on channel AWP < COS / division revenue on a gross basis = 21,606,432 Xeon components

Intel DCG + NEX on channel AWP < COS < R&D < MG&A < restructuring on a net basis = 44,884,979 components

AMD Epyc net = 6,490,264 units, I've only scored AMD on net.

Total servers of all types on a net basis 72,941,675 components / 18 CPUs per rack = 4,052,315 racks composed of 36,470,837 2P sleds.

On a gross basis approximately 28,096,696 components because I score AMD on a net basis regardless, 1,560,928 18 CPU racks that is 14,048,348 2P sleds.

Then one has to ask why channel supply data has AMD and Intel head-to-head on current generation share and the answer is estimating on a net basis for keeping track of bundle deal sales close incentive.

Mike Bruzzone, Camp Marketing