The ProLiant server business is down in the dumps, and the storage business is in a slump. But petascale and exascale supercomputer deals based the combination of AMD CPUs and GPUs have filled in a lot of the gap. And Hewlett Packard Enterprise is now waiting, like all OEMs and ODMs, to get increased allocations of GPUs so it can ride the generative AI wave into the next year and beyond.

HPE has also created a wave of its own with the Aruba edge networking business, which has been the growth story for the company for the past year and which accounts for nearly half of its operating income these days. Thus far, there has not been a lot of datacenter revenue from this business, but that could very well change in the future. Slingshot, of course, is the interconnect it will preferentially sell for HPC and AI systems.

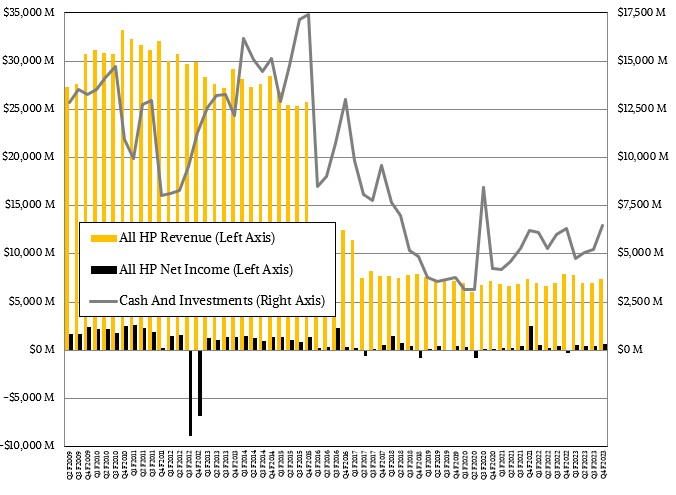

In the fourth quarter of fiscal 2023 ended in October, HPE brought in $7.35 billion in sales, down 6.6 percent year on year but up 5 percent sequentially from Q3 F2023, and the good news is that the company post net income of $642 million, which compares very favorably indeed to the $304 million loss it had in the year ago period. And the company is sitting on $6.47 billion in cash and equivalents, about what it had this time last year, too.

As we have been saying for quite some time, there is a recession in the server market in terms of both revenues and shipments except in those portions of the market that are employing accelerators for either HPC or AI workloads, and the Compute division within HPE, which peddles those ProLiant machines around the world, is not immune from that recession. If anything, it has been hit pretty hard by it because no matter how it feels, generative AI has not really gone mainstream within enterprises – meaning they are not yet building out their GenAI infrastructure even if they are doing proofs of concept – and even if they tried to take it mainstream they would not be able to get the GPUs to do it. No with the hyperscalers, cloud builders, and HPC centers of the world hogging all of the GPUs.

As best we can figure from the Omdia data released this week, HPE, Dell, and Lenovo are not even among the upper echelon of companies to get allocations of the “Hopper” H100 GPUs in 2023 as the top clouds and hyperscalers have pushed everyone else out of the Nvidia trough. No OEM or ODM is among the top twelve to get allocations in 2023, with Meta Platforms and Microsoft reportedly each getting 150,000 H100 units, with Google, Amazon Web Services, Oracle, and Tencent getting 50,000 units each, and the next tier down getting between 20,000 and 40,000 H100s this year. Tesla, at number twelve, is said to be getting 12,000 H100s this year. (The story cited above says Nvidia sold a half million units in Q3 of 2023, but the Omdia chart says it is for expected H100 shipments for all of calendar 2023.)

And so, it is no surprise to us that Antonio Neri, chief executive officer at HPE, is telling Wall Street that very little of its sales and orders for machines with “accelerated processing units,” which can be GPUs, CPUs and GPUs sharing a socket, or other kinds of accelerators, were driven by AI workloads per se, but rather for HPC systems sold under the Cray brand in the company’s HPC & AI division. HPE can still sell ProLiant servers based on prior “Ampere” A100 GPUs from Nvidia, but even still, Neri said a tiny portion of the Compute division revenues came from AI workloads.

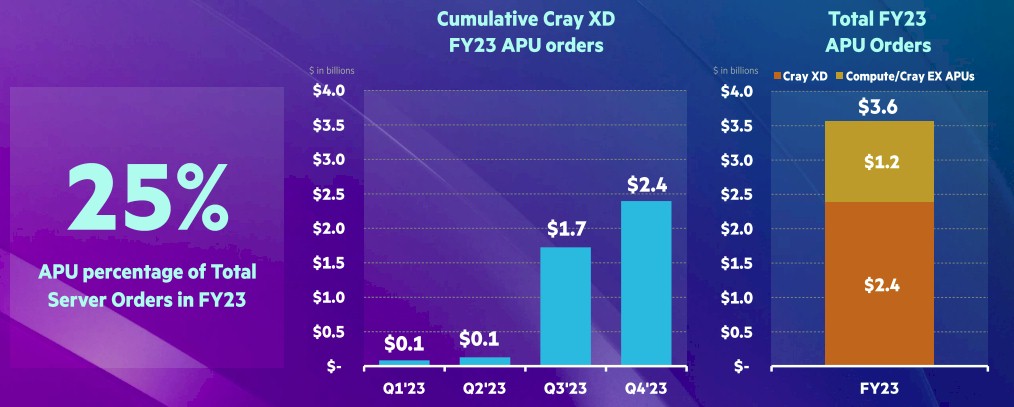

That said, HPE booked $600 million in incremental AI system orders in the last twelve days of the final quarter of fiscal 2023, which tells you how this business is ramping, and ended the year with $3.6 billion in cumulative orders for HPC and AI gear across its Compute and HPC & AI divisions.

The Cray XD line, which is not the “Shasta” Cray XE machines deployed for exascale systems in the United States and Europe, $2.4 billion in cumulative orders in fiscal 2023, and it looks like very little of it has been fulfilled so far. (Orders fulfilled in fiscal 2023 were placed in fiscal 2022.) There was another $1.2 billion in orders for Cray EX systems or more generic ProLiant and Apollo platforms sold in HPE’s Compute division. Almost none of this AI action is coming from the Compute division, but that will change as HPE forges tighter relationships with an Nvidia that can double or triple its GPU volumes and as AMD ramps up its “Antares” MI300 series of GPUs. And even the APU action that is coming from the HPC and AI division is admittedly – and HPE is admitting this – for HPC systems that may also do double duty on AI training and inference workloads.

HPE may be capturing the AI demand, as the chart above suggests, but it has yet to fulfill it. Luckily, that AI order pipeline is just getting larger and larger, and so is the opportunity for HPE and its OEM competitors.

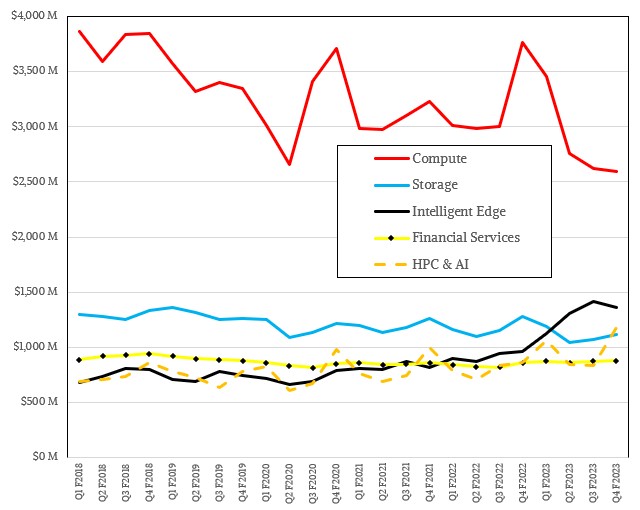

In the final quarter of fiscal 2024, the Compute division saw sales collapse by 31.1 percent to $2.6 billion, and operating income fell by an even more drastic 54.5 percent to $255 million. Sales of ProLiant machinery (and a smattering of networking gear not in the Aruba line) fell by 1.1 percent sequentially. For the full year, the Compute division posted sales of $11.44 billion, down 10.5 percent, and operating income is off 12.4 percent to $1.57 billion. Server makers can talk all they want about how this is just a digestion phase after eating a lot of servers in fiscal 2022, but we think that generative AI has caused companies to lock down their server spending almost as tightly as during the peak of the Great Recession back in 2009 so they can free up budgets to invest in AI on the cloud and eventually with their own on premises gear.

HPE said that unit shipments in the Compute division fell by 35 percent year on year and that average selling prices were down in the “low single digits” as commodity prices dropped and the revenue mix did, too. And that is, we think, because HPE is struggling to get GPU allocations from Nvidia.

“The compute segment is going through a cyclical period where customers are consuming prior investments, making this a price competitive market for now,” Neri explained on the call. “As we prepare to capture a greater share of AI linked inferencing opportunities, we saw overall demand improved moderately in the second half and are encouraged in our outlook for this segment.”

No kidding. If you can get a matrix math engine, you can sell it with a markup.

At $1.18 billion in sales, the HPC & AI division of HPE set its highest sales level in its history, and was nearly 1.6X that of the $724.7 million peak that the formerly independent Cray pulled in during 2015, when it only brought $27.5 million to the bottom line. We don’t have a net income for the HPC & AI division, but we can tell you that the operating income against that $1.18 billion was only $55 million, which means the net income is even lower, perhaps on the order of $28 million the way we play games with the operating and net income at the current HPE. So the supercomputing business, speaking broadly as both HPC and AI mushed together, is 1.6X as large and still only about as profitable at the net level as Cray was back in 2015.

We have said it before, and we will say it again: Send HPE flowers, because it is doing HPC for love as much as it is doing it for money.

To be fair, as we like to be, the HPC & AI division, with $1.18 billion in sales, had growth of 37 percent in the quarter, and operating income was up 83.3 percent to that $55 million. The arrows are moving in the right directions. It is just that HPC is only moderately more profitable than being an ODM. (4.7 cents on the dollar compared to maybe 3 cents.) For the full year, which is the best gauge for the HPC business anyway, the HPC & AI division had sales of $3.91 billion, up 22.6 percent, and operating income was up by 4.3X to $47 million. That is only 1.7 cents on the dollar for the year in terms of operating profit and 1.3 cents on the dollar at the net income level. OK, that is worse than an ODM. Maybe send HPE some chocolates, too.

HPE might be excited about its Alletra file and object storage and the very good growth it is having, but the rest of the storage business is not doing so hot. Storage revenues in Q4 of fiscal 2023 were down 12.8 percent to $1.11 billion, and operating income was off 54.1 percent to $90 million. A couple of more quarters like this and HPE will have to buy another storage company. Nutanix? (Market capitalization $10.8 billion.) Pure Storage? (Market capitalization $11.9 billion.) But no, HPE doesn’t want to keep doing this over and over. Alletra, with its significant software and services component, appears to be the right thing to sell alongside cloudy server slices a la GreenLake.

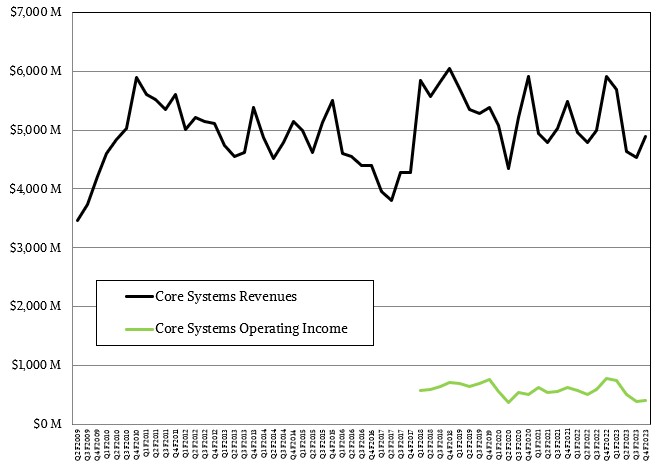

HPE has been through a lot in two and a half decades, but perhaps the best way to think about the company is to look at its core systems business and see how that has fluctuated with the times, as shown in the chart above. By our math, that core HPE systems business accounted for $4.89 billion in sales in Q4, down 17.2 percent, and had an operating profit of $400 million, down 49.1 percent. None of this is surprising in a server recession caused in large part by generative AI investments – particularly as companies shifted harder to generative AI investments as fiscal 2023 progressed.

For the full 2023 fiscal year, that core systems business did better, with revenues of $19.76 billion, down 4.3 percent, and operating income of $2.05 billion, down 17.3 percent.

Looking ahead to fiscal 2024, we think there is every prospect that some AI training and a lot of AI inference will take off among the enterprise customers that are served by the OEMs and that GPU allocations will increase and those orders on the books that HPE showed above can be filled. Hopefully at a much higher profit margin in the Compute division than the HPC &AI division has been able to get. That core systems business is running operating income at somewhere between 8 percent right now, and it needs to get back to something above 13 percent.

Dear HPE-Cray,

Please accept this heartfelt bouquet of virtual flowers, and virtualized chocolate box, as tokens of my deep appreciation for your unparalleled dedication to HPC and related parallel computing! Your ceaselessly outstanding philosophy of engineering excellence is second to none and uniquely impressive with 67x performance improvements in just 10 years, going from #1 Titan (18 PF/s, 2012) to #1 Frontier (1200 PF/s, 2022)! May your Slingshot interconnect continue to inspire young Davids to successfully challenge the Goliaths whose only goal is to drain us of our earthly possessions and wallets! May the upcoming 10th Anniversary of your partnership be filled with joyful excitment and a harbinger of many more decades filled with the most impressive of HPC innovations!

Storagewise, might an innovative Rabbit be “magically” pulled out of a buccaneer’s hat? ( https://www.nextplatform.com/2023/06/07/talking-novel-architectures-and-el-capitan-with-lawrence-livermore/ )

Rabbit should be commercialized. No question.

Flowers and chocolates to HPE-Cray from me as well! I’ll take 2024’s expected perf of Aurora and El Capitan though (2 EF/s) to predict 222 EF/s from HPE-Cray by 2036! Coupled with MxP that’ll be 1 to 2 ZettaFlops of effective oomph … keep up the great work!

The main theme dont there exaflops power… the efficience it is the main goal.

HP tried with memristor and the work by hp scientific S.Williams but the it was semi fiasco. INTEL, IBM, FUJITSU and others tech goliaths also they tried with similar outcome. I believe, without effient mem tech, the total effiency effort on total digital System never triumph on that priority search.