Because they are in the front of the line for acquiring Nvidia datacenter GPUs, the hyperscalers and cloud builders are going to be the ones who benefit mightily from shortages of matrix math engines that can train AI models and run inference against them. And it looks like Amazon Web Services, the cloud computing arm of online retailer, entertainer, and advertiser, is going to be one of the big beneficiaries of the generative AI boom that is trying to sweep through the datacenters of the world.

The GenAI boom is different from the Dot Com boom in one important way. Back in the late 1990s and early 2000s with the Dot Com boom, the era-defining Web applications of the time, including search and online content and advertising and the very beginnings of social media, could all be run on an X86 server running Linux, which was without a doubt the cheapest data storage and compute platform the world had ever seen. With the GenAI boom, training the large language models that underpin chatbots requires the most expensive supercomputing nodes the world has ever seen, and for the biggest LLMs it can take tens of thousands of GPUs several months to chew through the corpus of text data from planet Earth to train those models.

The Web was democratic from the beginning thanks to its modest compute requirements. GenAI is elitist from the get-go because of the high cost and shortage of GPU compute and low latency, high bandwidth networking required to create and use LLMs. And while many cloud providers are talking about how customers are preferring to use cloud GenAI rather than build their own systems, given the death of AI software and model expertise in the world and even scarcer top-end GPU compute, they really don’t have much of a choice buy to go to a cloud and sign up for whatever GPU instance allocations and whatever tools they can get their hands on that give them a head start on building GenAI into their applications.

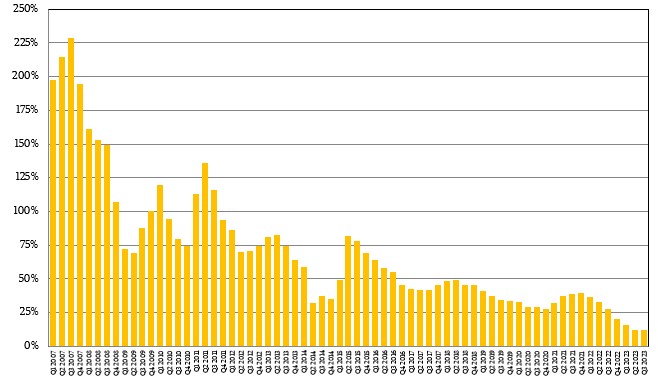

The shortages of AI skills and GPU compute engines is the best thing that could have happened for companies like Amazon and its AWS cloud. And the financial benefits that accrue to AWS – buy GPUs relatively low and rent them for very high – are starting to show up in the company’s financial results. It might even reverse the AWS growth rates, although it will probably never be in the 25-plus percent range again, much less the triple digits the company saw earlier in its life:

The GenAI uplift could not have come at a better time, with IT shops the world over being jumpy about the state of the global economy for more than a year as inflation went through the roof and war erupted between Ukraine and Russia. Since the middle of 2022, many AWS customers have been “optimizing” their spending on the AWS cloud, and indeed other cloud providers have seen the same thing happening among their customers. Like all online services, it is easy to start spending on AWS, but it is less easy to keep track of spending and even harder to figure out what to keep and what to shut down. But for the past five quarters now, this is precisely what AWS customers have done even as they think about new workloads like GenAI.

“The AWS year-over-year growth rate continued to stabilize in Q3,” Andy Jassy, chief executive officer at Amazon and formerly general manager of AWS, said on a conference call with Wall Street analysts going over the third quarter 2023 results for Amazon. “And while we still saw elevated cost optimization relative to a year ago, it has continued to attenuate as more companies transition to deploying net new workloads. Companies have moved more slowly in an uncertain economy in 2023 to complete deals. But we are seeing the pace and volume of closed deals pick up and we’re encouraged by the strong last couple of months of new deals signed. For perspective, we signed several new deals in September with an effective date in October that won’t show up in any GAAP reported number for Q3 but the collection of which is higher than our total reported deal volume for all of Q3. Deal signings are always lumpy and the revenue happens over several years, but we like the recent deal momentum we are seeing.”

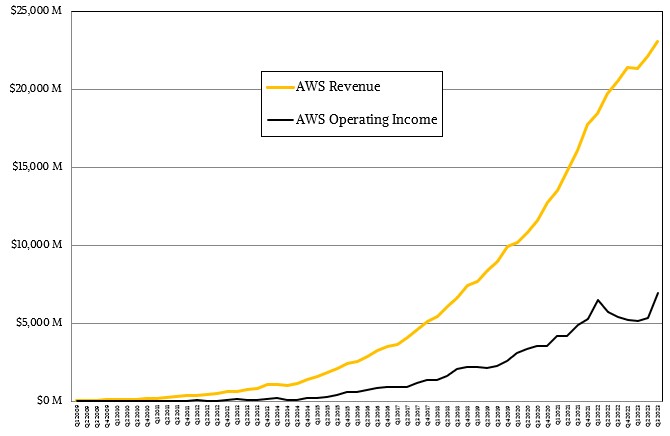

You can see the dip in spending as the rationalization and optimization of AWS capacity began in 2022, and you can see the consequent drop in operating income, too. And then you see the revenue rise again and eventually the operating income follows:

What you are very likely seeing it is the generative AI bump on the revenue side and the AI generative cost as well as the optimizations of capacity pushing down the profits on the operating income side – and then suddenly, the profits shoot up as the revenue continues to climb. Those p5 GPU instances based on the Nvidia “Hopper” H100 accelerators ain’t cheap, and we think this is a big reason why AWS profits are not only climbing, but have hit a new high in terms of absolute dollars and have tied as a high in terms of percentage of AWS revenues, too. We expect for GenAI to continue to drive revenues and profits.

In the quarter ended in September, AWS revenues were up by 12.3 percent to $23.06 billion, which is nearly the same growth rate that it had in Q2 2023. But at 30.3 percent of revenues, the AWS operating profit was bigger, and was up 29.1 percent year on year. That is in the same ballpark as the level of operating income growth that AWS enjoyed from Q1 2020 through Q2 2022, but in Q3 2022 revenue growth started to slop and eventually profit levels started to decline. Don’t worry, AWS didn’t shift to losses and operating income was roughly a quarter of revenues even during the optimization downturn. AWS sells hardware as software and commands a very high software-style premium for it, even when it is under pressure.

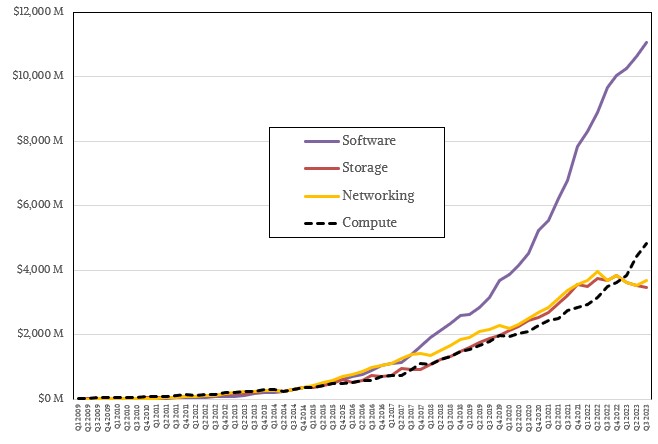

We think that the actual AWS software stack, which it sells as myriad services, is also wickedly profitable, but there is no way to prove it because Amazon doesn’t talk about it separately. But if it did, a breakdown of software, compute, networking, and storage services might look like this:

We think the Bedrock AI training service, which brings together Amazon’s own LLMs as well as those from Anthropic and Meta Platforms, is going to make a lot more money than the custom Elastic Map Reduce or hosted Cloudera Enterprise Hadoop data chunking and processing services ever did. Hadoop is a joke compared to LLMs. But Bedrock is the same kind of animal, to be sure, in that it seeks to take a complex thing and make it easy easier for a premium.

We also think there is an uptick in compute revenues that is being driven by the GenAI boom, which AWS has been able to capitalize upon because it has been investing heavily in datacenters and gear even as it cuts back on fulfillment centers and other capital expenses in its other operations.

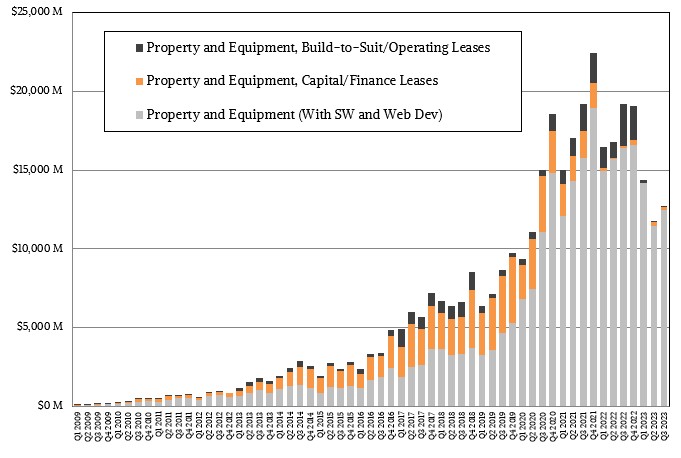

The capital expense bill at AWS has been going down pretty fast, and it is, as best as we can tell, not due to a slump in datacenter infrastructure spending. Here is how the Amazon capex per quarter is reported by the parent company:

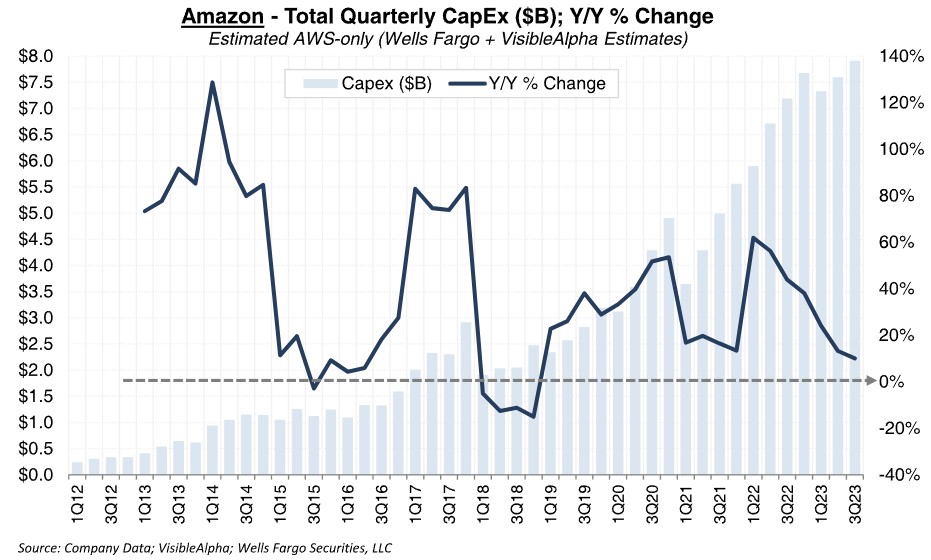

This chart has never been particularly useful to those of us analyzing AWS, but this one, created by Ken Gawrelsi at Wells Fargo Securities, is plenty useful since it shows estimated capex by AWS only:

As far as Gawrelsi can tell, AWS capex was up 10 percent to around $7.9 billion, which means it represented around 62 percent of total capex spending for Amazon, which was up considerably from the 43.9 percent of capex that AWS did in the year ago period. In recent years. AWS was around 40 percent of capex compared to 60 percent for the transportation, fulfillment centers, and office space. The ratios seemed to have flipped, and you can blame the high cost of Nvidia H100 GPUs and InfiniBand networking for that. We would not be surprised if Amazon spent $4 billion last quarter on AI systems, or about half of its total AWS capex. The significantly lower shipments of CPUs from Intel and AMD and the rise of homegrown Graviton Arm server CPUs certainly supports such a hypothesis.

We also think that at least some of the optimization that is going on at AWS customers is the shift from X86 instances to Graviton instances, which is possible where applications run atop Linux and is even easier if they are based on open source middleware and other tools and are homegrown so they can be recompiled for Arm Linux or run in a virtual machine environment atop Linux if using any number of interpreted languages.

Jassy actually had something to say about this and the other optimizations that companies are doing.

“We are still seeing elevated customer optimization levels than we have seen in the last year or year before this, I should say,” Jassy conceded. “So if you look at the optimization, it’s more than a year ago but it’s meaningfully attenuating from where we’ve seen in the last few quarters. And if you look at the optimization too, what’s interesting is it’s not all customers deciding to shut down workloads. A very significant portion of the optimization are customers taking advantage of enhanced price/performance capabilities in AWS and making use of it. So for example, if you look at the growth of customers using EC2 instances that are Graviton based which is our custom chip that we built for generalized CPUs, the number of people and the percentage of instances launched their Graviton base as opposed to Intel or AMD base is very substantially higher than it was before. And one of the things that customers love about Graviton is that it provides 40 percent better price/performance than the leading X86 processors. So you see a lot of growth in Graviton. You also see a lot of customers who are moving from the hourly on demand rates for significant portions of their workloads to one-year to three-year commitments, which we call savings plans.”

When it comes to GPUs, at this point all you can get is a layaway plan. . . . Unless, of course, you are one of the Super 8.

Be the first to comment