When it comes to deploying Arm in the cloud, a lot of the talk of late has centered on things like efficiency, core density, or predictability of performance.

However, Amazon Web Services believes that its Arm chips can compete on performance and price/performance, and in the very picky HPC market at that, with its Graviton3E processors.

AWS showed off its third-gen Graviton processors in early 2022. The 55 billion transistor part packed 64 Arm-compatible cores running at 2.6 GHz and fueled by 300 GB/sec of memory bandwidth courtesy of a move to speedier DDR5 memory. The cloud colossus followed that up in November 2022 with the Graviton3E, which was tuned for HPC and networking jobs with optimizations for floating point and vector math. Amazon says the chip is about 35 percent faster in both floating point and vector calculations, and about twice as fast in the Linpack benchmark than the standard Graviton3.

Alongside the CPU, Amazon also showed off an updated Elastic Fabric Adapter (EFA) low-latency network interface for stitching multiple Graviton instances together.

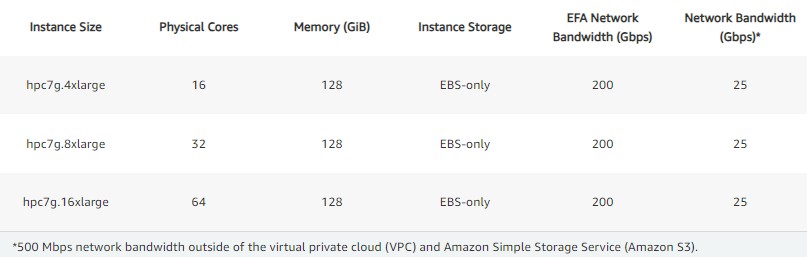

Both of these products are at the heart of AWS’s Hpc7g instances, which are available in three SKUs with your choice of 16, 32, or 64 Graviton3E cores. Beyond that, the instances are all pretty much the same, with support for Amazon’s Elastic Block Storage service, 128 GB of DDR5 memory, and 200 Gb/sec networking by way of the aforementioned EFA.

According to Amazon this sameness is intentional. The idea here is by opting for lower core-count instances, customers can tune for a specific ratio of memory or network bandwidth per core. These instance sizes will probably be advantageous for those running software with restrictive licensing regimes too.

As with the cloud provider’s older AMD and Intel-based Hpc6 instances, the company expects customers to use these instances like nodes in a cluster, as opposed to individual VMs. In the launch release, the company described distributing workloads requiring “tens of thousands of cores” across its Hpc7g instances, so it appears Amazon is ready to support some decently large clusters.

Of course, Amazon is hardly the first to pit Arm cores against HPC workloads. For two years, the Fugaku supercomputer at RIKEN Lab in Japan held the top spot on the Top500 ranking with its 48-core Arm-based Fujitsu A64FX processor, which is crammed with great gobs of vector performance.

Amazon says it is now working with RIKEN to create a “virtual Fugaku” that runs on the cloud provider’s Hpc7g instances to support the institute’s growing demand for compute resources.

While live, Amazon’s Hpc7g instances are still somewhat limited. The company says clusters of instances are confined to single availability zones, and at the time of publishing, are only running in Amazon’s US East region in northern Virginia.

Does the Graviton3E also have the SVE vector width of the A64? (For code compatibility with the Virtual Fugaku)?

I think by definition it has the Neoverse V1 vector engines, not the ones used in A64FX.

If I remember, A64FX has 2x512b (2x64Bytes), Neoverse V1 (Graviton 3) has 2x256b (2x32B) and V2 (Grace) has 4x128b (4x16B). The Neoverses match the common cache line size of 64B (A64FX’s SVE data would require 2 lines) and should consume much less than Fugaku’s SVE units (where energetic efficiency was not as good as some other HPC machines).