In Nvidia’s decade and a half push to make GPU acceleration core to all kinds of high performance computing, a key component has been the CUDA parallel computing platform that made it easier for developers to create applications that can leverage graphics chips for general purpose processing.

The GPU maker correctly foresaw that libraries and algorithms needed to be in place, with a consistent programming model, if GPU compute was to become normal even if not perfectly easy. Quantum computing is going to need the same approach if it is to be successful, too. But with a slightly different twist.

“In 2006, before the launch of CUDA, there were domain scientists leveraging GPUs to accelerate their work, but there were very few, and that’s because they had to program in graphics and shader APIs for GPU assembly in order to gain access to the disruptive performance provided by GPU acceleration,” Timothy Costa, director of Nvidia’s HPC and quantum computing product business, tells The Next Platform. “From this perspective, what Nvidia did by launching CUDA is usher in a revolution in accessibility to a disruptive compute technology – at that time, the GPU – to the typical domain scientists.”

In the ensuing sixteen years, GPU acceleration in supercomputers in particular and all kinds of HPC systems in general has become commonplace – even more so with the rise of workloads such as AI training and inferencing and analytics – and is fueling an intense competition between Nvidia, Intel and AMD as well as the rise of other accelerators, from FPGAs to data processing units (DPUs). We’ve estimated that GPUS account for as much as 90 percent to 95 percent of the computational power in datacenter servers in which they’re installed.

Eight of the top ten supercomputers on the Top500 list run accelerators.

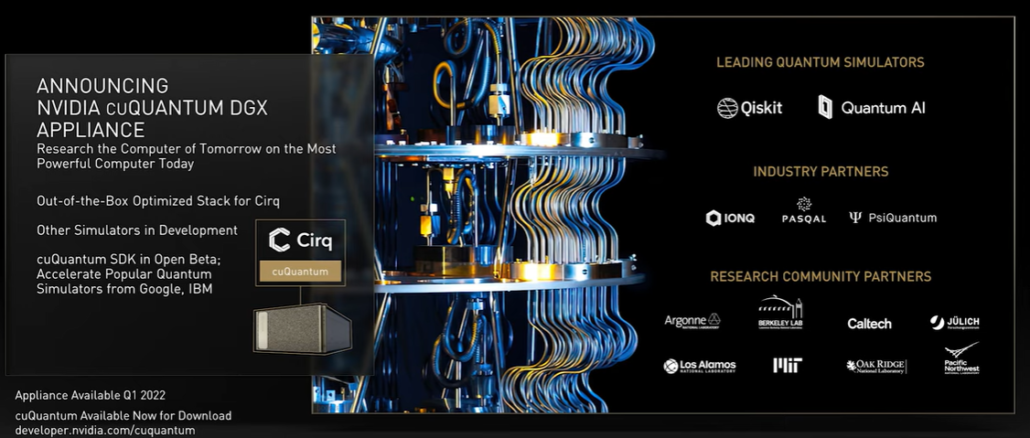

Now, as the industry steps deeper into a future with quantum computers, Nvidia sees the same need. A year ago, the company unveiled cuQuantum, a software development kit (SDK) for accelerating quantum workflows using the chip maker’s GPU Tensor Cores and complete with various libraries and tools optimized for such jobs as quantum circuit simulations. cuQuantum illustrates what Costa calls a “fundamental tenant” of the vendor’s quantum strategy.

“We aim to be a platform to advance the state of the art in quantum informational science and partner with the entire ecosystem,” he says. “There are a lot of the companies that are big names in computing that have adopted cuQuantum. The various kinds of adoption are different. We have quantum circuit simulation frameworks out there. We have supercomputing centers who are deploying cuQuantum for their users. We have institutes that are actually end users exploring the algorithms space, like AI for drug discovery.”

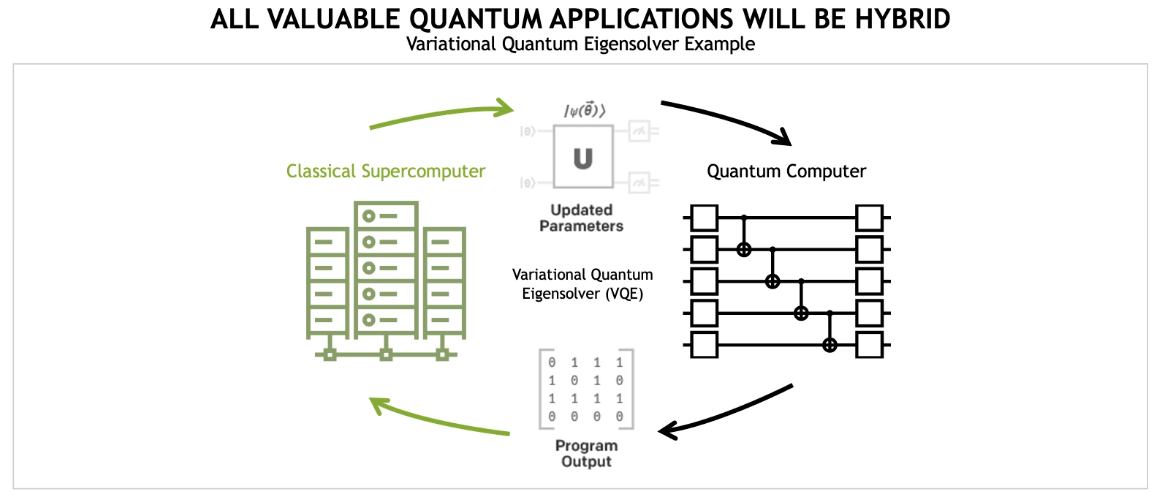

The fast-growing quantum space is now facing a similar situation in the HPC space that gave rise to CUDA, according to Costa. Applications that will be accelerated by quantum processing units – or QPUs – will be hybrid workloads that run on hybrid classical-quantum systems, leveraging classical supercomputing for large parts of an application while critical parts are accelerated by a quantum resource. An example is a variational quantum migrate solver, which is a hybrid algorithm.

“But at the application scale, it gets really interesting,” he says. “If we consider applications rather than algorithms, the list of domains that are believed to be candidates for quantum advantage is large and it’s unknown. Regardless of which domain we look at, we take a lesson from the last 20 years of effort to GPU-accelerate scientific computing workloads. What we expect to see is the very same applications being used today incrementally accelerated by quantum computing as algorithms are developed and discovered and as hardware matures. This observation that today’s GPU-accelerated scientific computing applications are the candidates for future quantum-accelerated applications is more profound than it may seem at first glance and it raises some questions about what we need to build to push things forwards.”

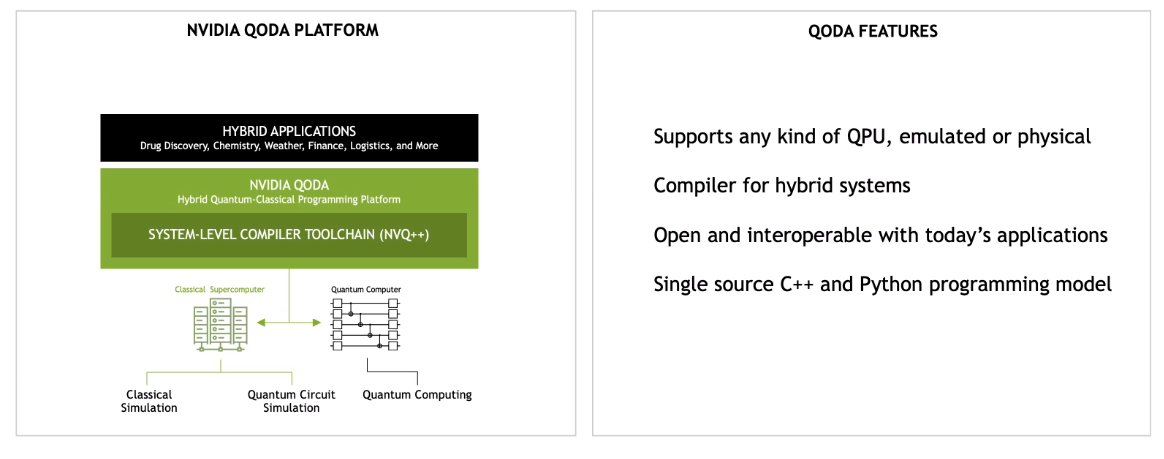

There’s a gap between experimenting with algorithms on various frameworks and the understanding that current GPU-accelerated scientific computing applications will most likely become quantum-accelerated workloads during the transition from algorithms created by quantum physicists to applications developed by domain scientists. What’s needed is a “platform that’s built for hybrid quantum-classical computing, delivering high performance, interoperating with today’s applications and programing paradigms and one that is familiar and approachable to domain scientists,” Costa says, pointing to Nvidia’s experience with CUDA.

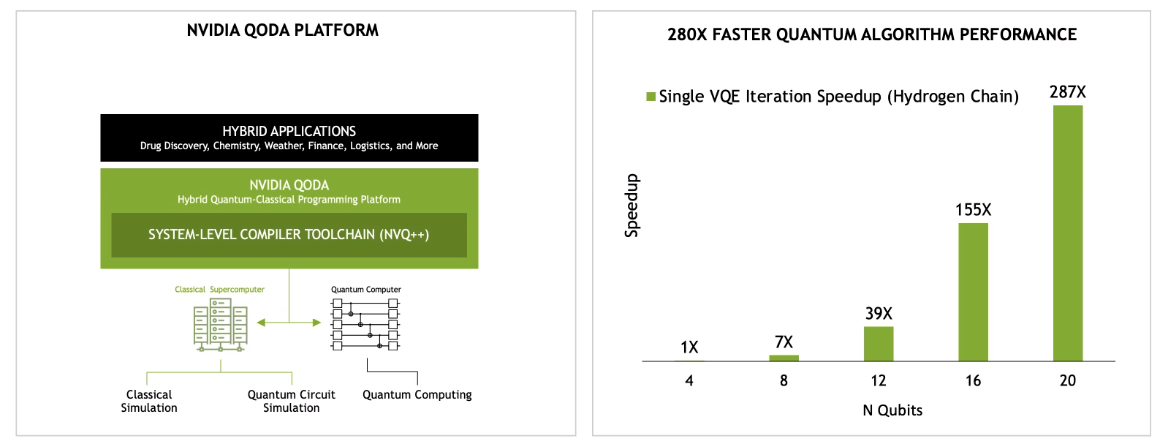

At the Q2B conference in Tokyo, Nvidia unveiled what it sees as that necessary platform. The Quantum Optimized Device Architecture – or QODA – is designed for hybrid classical-quantum computing and applications that will incorporate quantum acceleration into their applications, either through emulation or a quantum processor. A CUDA for the quantum crowd. It offers a kernel-based programing model with both single-source C++ and Python implementations and a compiler toolchain for hybrid systems. There also is a standard library of quantum primitives, he says. It will integrate with current high-performance applications and interoperate with modern parallel-programing techniques and software, allowing “domain scientists to quickly and easily move between running all or parts of their applications on the best classical computing resources, the best simulated quantum computing resources and the best real quantum computing resources,” Costa says.

The QODA code is designed to be familiar to domain scientists who work on modern scientific computing workloads, part of Nvidia’s efforts around interoperability with current scientific computing applications, program models and libraries. QODA operates with standard language parallelism – such as OpenMP, OpenACC and CUDA – and an array of accelerated libraries, enabling scientists to incrementally add quantum acceleration where they’re able to existing applications. He points to the Quantum Imaginary Time Evolution (QITE) algorithm, an intrinsically hybrid algorithm used for computing energies in fields like material science, chemistry and physics.

Tested by running the Variational Quantum Eigensolver (VQE), an algorithm for quantum chemistry, on a Nvidia A100 GPU, the QODA software stack was up to 287 times faster than a Python framework.

Costa notes that QODA is open and QPC-agnostic, with partners ranging from hardware companies like Quantinuum, Pasqal, Quantum Brilliance, IQM, and Xanadu to algorithm developers Zapata and QC Ware and supercomputing centers Oak Ridge National Laboratory, NERSC, and Julich.

As with everything in quantum computing, much of this is forward-looking, he says. There are no hybrid quantum-classical applications or quantum applications currently being used in production and developing algorithms that can be more widely used as the hardware matures. That said, there are a range of jobs that will fit well in the near-term, such as the simulation of quantum systems, which Costa says is “very well past the sniff test.” Other areas where interest is high are drug discovery, chemistry and biochemistry, financial services and large banks. That will grow as the hardware matures.

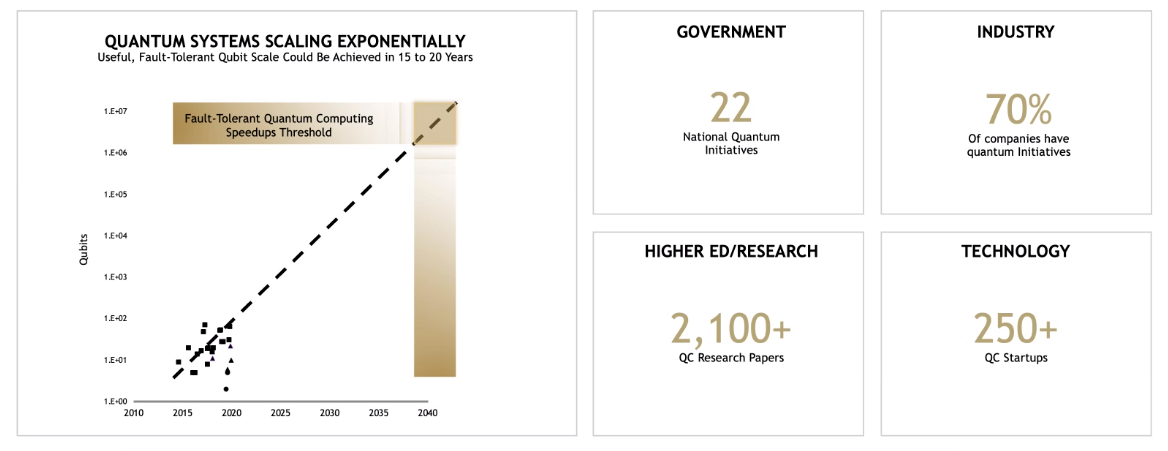

“The state of quantum computing is, we’re along a path from the discovery of the theoretical work to justify the attempt to build a quantum computer,” he says, adding that the industry has gone from “10 years ago, when they had one or two qubits that can actually be thought of as a quantum computer, to today, having 200 qubits available through the cloud, but they’re noisy. We’re looking forward to a future where we get millions of qubits that are error corrected down to thousands of logical qubits and provide fault-tolerant quantum computing that is very likely to have many, many productive applications. We’re along that journey, but along that journey, not only does the hardware need to mature, but the software and the algorithms also need to be developed and built. That’s what we’re about here.”

Be the first to comment