The people running Google Cloud can see the tides of HPC changing and know that, as we discussed only a few months ago, there is a fairly good chance that more HPC workloads will move to cloud builders over time as their sheer scale increasingly dictates future chip and system designs and the economics of processing.

Google also knows it needs to do more to steal more market share from its larger rivals — top dog Amazon Web Services and silver medalist Microsoft Azure — so the company has introduced a new open source toolkit that helps HPC shops construct clusters for simulation and modeling that are repeatable and yet flexible.

Called the Cloud HPC Toolkit — a name that likely saved Google Cloud’s marketing department some money — the system software has a modular design that lets users create everything from simple clusters to advanced ones that can benefit from the cloud’s ability to easily slice and dice disaggregated resources based on ever-changing needs – what is called composability and what is starting to get some traction in the HPC sector.

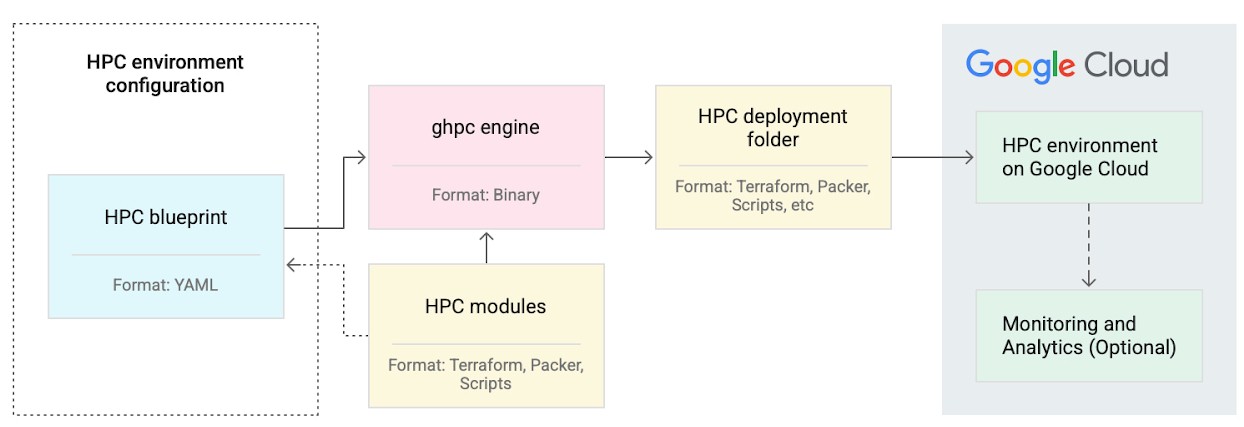

Here is what the components of the Cloud HPC Toolkit look like:

Google Cloud thinks that most users will want to get started with the toolkit’s several pre-defined blueprints for infrastructure and software configurations that are handy for HPC environments. But for those who have their own configuration preferences, these blueprints can be modified through changes to a few lines if text in configuration files.

These blueprints support a variety of building blocks needed to create an HPC environment, from the compute and the storage to the networking and schedulers. On the compute end, this includes all of Google Cloud’s virtual machines as well as its GPU-based instances and its HPC VM image, which is based on the CentOS variant of Red Hat Enterprise Linux. For storage, the toolkit supports Intel’s DAOS system and DDN’s Lustre-based EXAScaler system as well as Filestore, local SSDs, and persistent storage on the Google Cloud. Additionally, blueprints can be configured to run on a 100 Gb/sec network using Google Cloud’s placement policies to provide lower latency between VMs.

There is, however, only one choice of scheduler available on the toolkit for now: Slurm. Given that Google Cloud currently supports Altair’s PBS Pro and Grid Engine schedulers as well as IBM’s Spectrum LSF and Slurm, it seems reasonable that Cloud HPC Toolkit will eventually add these, too.

Both Intel and AMD have thrown their support behind the Cloud HPC Toolkit, but it’s the former — which is currently trying to catch up with the latter to make faster and better processors — that is especially eager to use Google Cloud’s latest HPC offering as a showcase for the semiconductor giant’s growing investments in software, particularly on the HPC side.

Among the blueprints in Google Cloud’s new toolkit is a pre-defined configuration of hardware and software for simulation and modeling workloads from Intel itself, which is promoted under the Intel Select Solutions brand. Whatever happened between Google Cloud and Intel behind the scenes, the cloud builder made sure to promote Intel’s simulation and modeling blueprint as the only detailed example in its blog post announcing the toolkit.

A key part of Intel’s simulation and modeling blueprint is the company’s oneAPI toolkit, the cross-platform parallel programming model that aims to simplify development across a broad range of compute engines, including ones from Intel’s rivals.

In a statement, Intel said access to oneAPI and its HPC-focused branch can help optimize performance for simulation and modeling workloads by improving compiling times, speeding up results, and enabling users to take advantage of chips from Intel and competitors using SYCL, which is the royalty-free, cross-architecture programming abstraction layer that underpins oneAPI’s Data Parallel C++ language.

Intel and its rivals know the real gold in the semiconductor industry is in the cloud builders and hyperscalers, so we wouldn’t be surprised if we increasingly see more HPC software announcements of this ilk in the cloud world, with Intel hawking oneAPI, AMD pushing its open ROCm platform, and Nvidia finding new ways to expand the software Hydra that is CUDA.

Be the first to comment