When it comes to co-packaged optical interconnects, there are two camps: Lasers in and lasers out. The one, epitomized in the datacenter by the on-chip lasers and optical interconnects that Intel has been working on for decades, is the champion of the lasers in camp. Ayar Labs, which has deep roots in the US Defense Research Projects Agency that has spawned so much technology it is hard to keep track of it and which is run by a bunch of former Intel HPC executives, is emphatically in the lasers out camp.

To be fair, there are those who are lasers out now and hope to be lasers in at some point. (Switch ASIC chip maker Broadcom is a good example of this, and we are putting together a piece on its efforts with co-packaged optics, or CPO for short.) But the jury is still out being fully lasers in because without a lot of redundancy in the lasers and signaling paths coming off the chip, a failure of a laser – which can and will happen – would mean losing a compute engine. This sort of risk gives system architects and IT vendors the willies.

So there is an understandable level of skepticism, risk aversion, and hopeful enthusiasm when it comes to silicon photonics, as we talked about recently with Andy Bechtolsheim, the chairman and chief development officer at Arista Networks, a few weeks ago. The statements that Bechtolsheim made to us related to the use of the CPO variant of silicon photonics in datacenter switching, not across all datacenter use cases, and Charlie Wuischpard, who used to be general manager of the HPC Platform Group within Intel’s Data Center Group and then was tapped to be vice president of the Scalable Data Center Solutions Group at the chip maker, reached out to us in the wake of that conversation with Bechtolsheim to have a chat about the distinction between CPO on switch ASICs and with other kinds of system I/O.

Our conversation with Wuischpard happened as Ayar Labs was putting the finishing touches on its $130 million Series C funding round, bringing its total funding to date to $194.5 million, and in the wake of a partnership between Hewlett Packard Enterprise and Ayar Labs to work together to bring co-package optics to the Slingshot HPC-juiced Ethernet switches that are part of its Cray EX supercomputer line. Both HPE and Nvidia participated in this Series C funding, kicking in money along with Applied Ventures, GlobalFoundries, Intel Capital, and Lockheed Martin Ventures, who led the Series B round, and s BlueSky Capital, Founders Fund, Playground Global, and TechU Venture Partners, who led the Series A round. A slew of other funds kicked in money for the Series C kitty, by the way. Suffice it to say, they all smell an opportunity to commercialize silicon photonics.

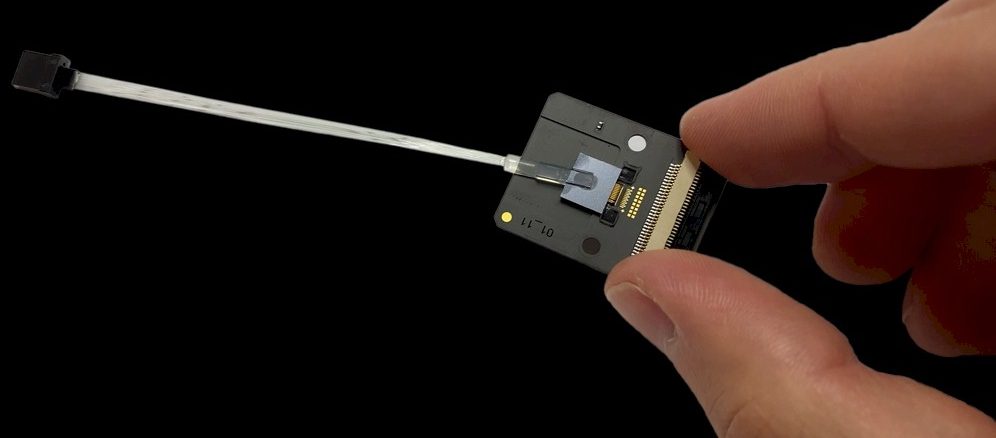

And that Series C funding round, says Wuischpard, was larger than expected, allowing for its CPO chips and TeraPHY optical transceiver chiplet to get qualified with various I/O standards and scaled to production this year. The company has already shipped its first products to partners and expects to ship “thousands of units” of its in-package optical interconnects to customers this year.

Timothy Prickett Morgan: To start with, I realize now after that interview with Andy ran that some people could conflate a pushing out of co-packaged optics for datacenter Ethernet switching ASICs as a pushing out of all use cases for co-packaged optics. So this conversation is about refining the observations in that story and adding some more that you have for other kinds of I/O.

Charlie Wuischpard: As far as pluggable transceivers being the way to go for datacenter Ethernet, I think Andy’s got a very lucid argument. But we are different, and I think this is a problem that has surfaced in the industry, where people are looking at the efforts of Facebook, Microsoft, and others, which are in the Ethernet market. We agree that for switch silicon, CPO is not ready, it’s not economical and pluggable optics is the way to go for, say, the next five years or so.

TPM: Ayar Labs has emerged as a kind of counterbalance to what Intel is doing with its on-chip silicon photonics, which I find intellectually interesting.

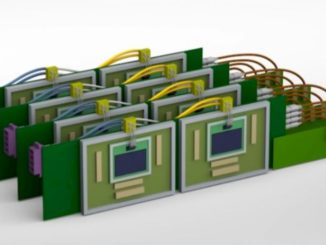

Charlie Wuischpard: The co-packaged optics that we are focused on is not Ethernet fabrics, but on other fabrics like CXL, NVLink, Infinity Fabric – the things used in the datacenter and particularly for HPC and AI workloads. CXL is a big deal for us because it is going to normalize linkages to a certain degree. Servers get bigger, and PCI-Express gets faster and now we have 32 Gb/sec with PCI-Express 5.0 and we will get 64 Gb/sec signaling with PCI-Express 6.0, and as servers get fatter and these other fabrics get larger in terms of capacity and scale, the actual Ethernet fabric might get a little leaner at the top.

TPM: Here is something that I have been wanting to ask you. We have PCI-Express 5.0 and within two years or so we will have PCI-Express 6.0. Assuming the PCI-Express roadmap stays regular, as we have plotted out, then the PCI-Express 7.0 spec should be done in 2023 with 128 Gb/sec signaling, and that could double every two years out to 2029 with 1 Tb/sec signaling with the PCI-Express 10.0 spec in 2029. Who knows how we will get there, but engineers are clever. Anyway, is there anything about PCI-Express 7.0 or beyond that will require co-packaged optics, and specifically, the kind of lasers out CPO that you have created?

Charlie Wuischpard: That’s an interesting question, but we have not done much beyond PCI-Express 6.0 in detail, but we do have a roadmap through the rest of the decade that just keeps increasing the bandwidth, the bandwidth density, the power efficiency, and all of that sort of thing.

TPM: But is there a point where electrical signaling coming out of the PCI-Express bus no longer works because the bandwidth is too high and the wires get too short?

Charlie Wuischpard: There is a point where the curve starts to bend down, but it is probably another eight to ten years.

Everything right now is wide, parallel signaling and that means you can run a lot of lanes at a lot lower speed. But the serial links of out the switch create issues. One of our theses is that the Ethernet market tries to be backward compatible with so many prior generations. So wavelength grids, interface speeds, and all of this stuff is set by the IEEE. It’s our supposition that at some point you have to break with the past in order to go forward.

A lot of companies use serial feeds out of the switch chip like XSR SerDes, which is the current thing, but there have been people thinking about whether to use don’t go to a different interface there. And this whole UCI-Express thing, which you wrote about back in March, is interesting, especially for us because we have the AIB interface, which happens to be a precursor of UCI and is actually UCI compatible. The government was pushing that standard, and it’s a very power efficient interface, and in fact we provide more bandwidth density on the optical side than they can get out of the electrical side so we are somewhat limited by that interface. But the roadmap for UCI is very durable over time.

One of the interesting things for us is that if we don’t have UCI, then we have to spin up a different chip with a different electrical interface. At the moment, our roadmap has different electrical interfaces for different devices – Nvidia has NVLink, AMD has Infinity Fabric, Intel has UltraPath, and so on. But if they all collapse onto UCI, then theoretically we can build one chip to rule them all. That is the whole idea of UCI, that chiplets can be mixed and matched and plugged and played across the entire ecosystem.

TPM: Could the industry decide that, for instance, the PCI-Express bus goes optical before other parts of the datacenter, such as switch ASICs, do? Not just because electrical signaling runs out of gas, but for performance or latency reasons?

Charlie Wuischpard: That could be. But the point for us today is that co-packaged optics may not be suitable for the Ethernet gear, but what Ayar Labs is doing is ideal for other use cases.

What we are doing is a hard problem to solve. And if we can solve it – and we’ve got a bunch of brilliant people – then it does change things. That’s the task, and that’s the reason we’ve been able to recruit in this competitive world is that it’s a hard problem. But if we can fix solve it, and I think we’re ahead of everybody else, then all sorts of fun things happen, and not just the monetary kind. The fun is the ability to actually change architecture.

Electrical interconnects have limitations which directly impacts board layout and system power / cooling / provisioning designs. With each new PCIe signaling generation the distance that an electrical signal can travel at a given power consumption declines. This is why system designers are moving to new low-loss high speed connectors (e.g., SFF-TA1002), new low-loss board materials, new passive copper cables, increase use of retimers (finally, mostly standardized to enable interoperability and multiple suppliers), etc.

As for everything moving to UIC, IMO, at best, that is little more than a marketing pipe dream, and some might argue, another disingenuous attempt to slow customer adoption of a number of independent technologies already transparently delivered as the foundation of many solution infrastructures. As noted in multiple articles on this website, customers don’t want to spend resources on understanding and managing the underlying technologies used to deliver a solution stack. Hence, why should they care about UIC; heck, most are not even aware of which version of PCIe or DDR is being used; they only care about getting their work done at a reasonable price.

As for photonics, Andy has one of the best grasps on all of the issues in the industry. Photonics will continue to evolve and eventually replace off-package electrical signaling just as it has enclosure-to-enclosure, so it is really a question of “when” not “if”. That said, I cannot say how many times over the past 20 years I’ve heard silicon / VCSEL photonics architects say nirvana is just 2-3 years away.