The Pawsey Supercomputing Centre in Australia, which handles a large portion of Square Kilometer Array (SKA) workloads in addition to other scientific computing initiatives, is preparing to take on more capability than they’ve ever seen.

The forthcoming Setonix supercomputer, which we talked about last October before it had a name, will mark a 30X increase in compute power than Pawsey’s previous systems, which means preparations ranging from application readiness to rethinking storage requirements. On the latter, the center has carved out $7 million of an overall $70 million equipment refresh budget to add 130 petabytes of storage, split between disk and object stores.

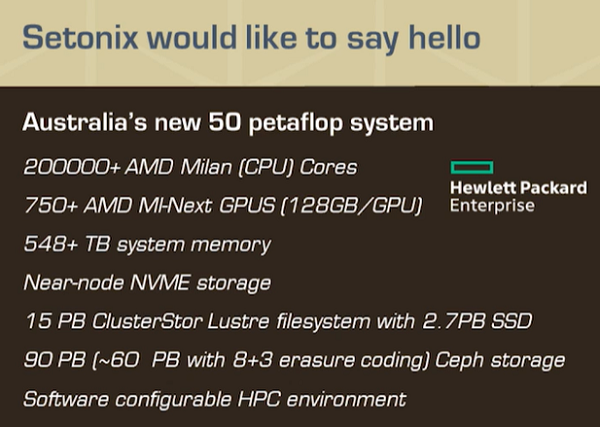

The multi-tiered storage investment will support Pawsey’s upcoming Setonix supercomputer, an AMD CPU and GPU-powered 50 petaflop (peak) HPE/Cray machine focused on Square Kilometer Array workloads as well as climate and Covid research.

In addition to the Cray Clusterstor E1000 system, the Pawsey group selected Dell to provide 60 petabytes of disk, which has been tiered to support different data types. The center selected fellow Australians, Xenon, for the object storage piece, which will snap into Pawsey’s existing object stores. This includes two mirrored libraries, capable of handling 70 petabytes.

In addition to the Cray Clusterstor E1000 system, the Pawsey group selected Dell to provide 60 petabytes of disk, which has been tiered to support different data types. The center selected fellow Australians, Xenon, for the object storage piece, which will snap into Pawsey’s existing object stores. This includes two mirrored libraries, capable of handling 70 petabytes.

Pawsey selected Ceph as the storage backbone for the new system. Mark Gray, head of scientific platforms at Pawsey says it was the only way to build a storage system so large on a budget. “An important part of this long-term storage upgrade was to demonstrate how it can be done in a financially scalable way. In a world of mega-science projects like the Square Kilometre Array, we need to develop more cost-effective ways of providing massive storage.”

Eventually, Pawsey’s storage environment for Setonix will include 60 petabutes of object built on Ceph and over 5000 16TB disks, over 400 NVMe devices for handling metadata, and over 400GB/s bandwidth across the system. Offline storage is also impressive, moving Pawsey away from Sepctra TFinity tape libraries to the Versity file system (which we don’t see often) on the order of 5 petabytes and more tape, this time from IBM.

The network piece is a huge improvement for Pawsey. The center will be moving from a monolithic single core router to a spine-leaf architecture with a 400GB/s backbone and 100 GB/s links to host endpoints. “This will allow the Nimbus cluster, which was recently upgraded to 100 Gbps internally, to connect at that speed to the Pawsey network. The network has been designed to be easily expandable to support the Ceph based object storage platform being purchased as part of the Long-Term Storage procurement as well as integration with the PSS,” the team says. “This will allow all network endpoint (ie. login nodes, visualisation servers, data mover nodes, etc.) to realise a ten-fold increase in bandwidth from moving from 10 Gbps to 100 Gbps ethernet.

“When researchers want to make massive amounts of data available to users on the internet, any delay in accessing data is very hard to accommodate,” Gray adds. “Users and most web tools expect files to be immediately available and the time it takes for a tape system to retrieve data becomes a challenge.”

Pawsey had been expecting the first phase of Setonix in July but supply chain and Covid-related delays are pushing delivery into the end of this month with planned readiness by November. The second half of the project will deliver the second half of compute and other components and should be ready to roll in time for Linpack runs for SC ’22, if Pawsey elects for the benchmark.

Be the first to comment