We took a look recently at the compute engines at the heart of the future – and as yet unnanmed – Sunway exascale system that will be installed at the National Supercomputing Center in Wuxi, China. This exascale machine will be a follow-on to the current Sunway TaihuLight system, both of which were designed and built by the National Research Center of Parallel Computer Engineering and Technology (NRCPC).

As we explained in our original story, to reach 1 exaflops of peak 64-bit floating point performance, what NRCPC seems to be doing is using a much more advanced chip making process from Semiconductor Manufacturing International Corp to double up the compute elements on the Sunway processors, double up the width of the vector engines on those compute elements to 512 bits wide, and then doubling the node count to well over 80,000 nodes, which gets you from 125 petaflops peak theoretical performance with the Sunway TaihuLight machine to 1 exaflops with the Sunway exascale machine.

Some readers expressed dissatisfaction with this approach, hoping that the Chinese government would do more with the microarchitecture of the Sunway chip design, particularly given a process shrink from what we reckon was 28 nanometer manufacturing with the original Sunway SW26010 processor to either 14 nanometer or 10 nanometer (called N+1) processes with the chip in the future exascale system, which we are calling the SW52020 (520 compute cores, second generation). We didn’t say that there could not be more tricks inside the next-generation Sunway chip architecture, but rather pointed out that they would not be required to get to exascale peak performance at 64-bit processing. No one said that performance could not be higher than this 1 exaflops, and it very well could be. But we don’t think clock speeds can rise by that much from the current 1.45 GHz with the SW26010 chip because every increase in clock creates more heat per unit of performance. There could be other architectural improvements on the compute front. We shall see.

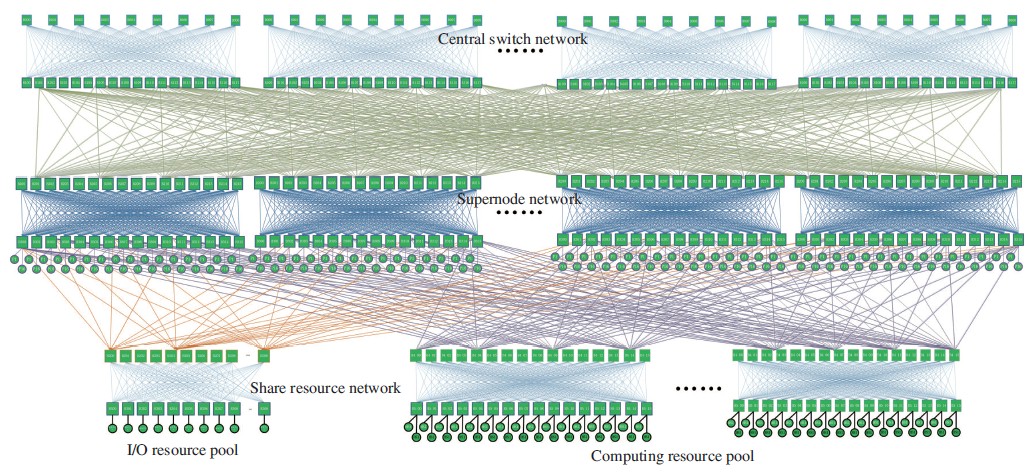

In this follow-up, we are looking at the networking and storage in the future Sunway exascale system, which was talked about some in the paper that NRCPC has put out about its architecture. The Sunway exascale system has what its designers call a “high-throughout, multi-track, pan-tree network,” which looks like this:

We think that NRCPC, like a number of other Chinese HPC centers, has licensed InfiniBand technology from Mellanox Technologies to create its own variant of a high speed, low latency interconnect, and that with this pan-tree interconnect, which NRCPC calls a “self-developed network chipset,” is a superset of this original InfiniBand and that has perhaps been forked without breaking backwards compatibility with the ”proprietary” interconnect used in the TaihuLight machine.

With the Sunway exascale machine, NRCPC has created its own router chip – called the SWHRC – that implements a leaf/spine architecture as shown above and as is commonly used among hyperscalers and cloud builders in their large scale infrastructure. The difference – and here is where the “pan” comes in, which means “everything” or “all” – is that with the next-generation Sunway interconnect is smarter and has some features hyperscalers do not, like adaptive routing and congestion control.

“The network layer utilizes the redundant paths of the multi-track pan-tree network structure, combining adaptive routing policies, route reconfiguration policies and transport layer message retransmission techniques, to maintain uninterrupted message service for an application in the event of single port failure, single chip failure, or even a whole switch failure,” the researchers designing the Sunway exascale system write in the paper cited above. “The problem of downlink paths being determined by uplink paths in fat tree networks, which causes downlink path failures affecting node communication, is solved in the pan-tree structure.”

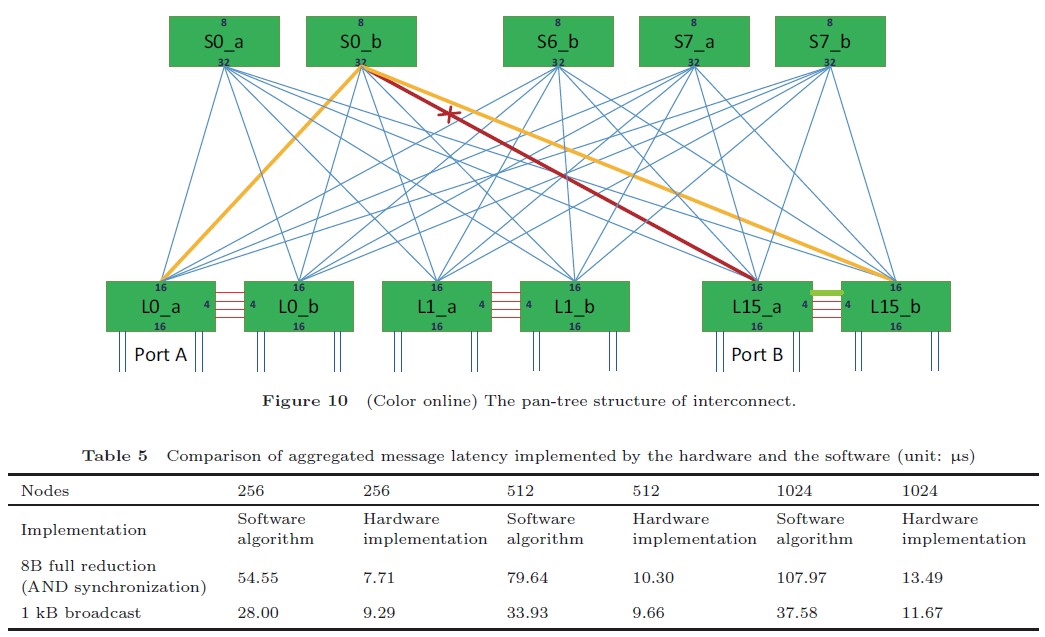

Like this:

This pan tree network has a pair of ports on each node, and the network can select the ports to link Node A to Node B based on whether a port is busy or idle. This pan tree structure, says NRCPC, has better fault tolerance than a standard fat tree network. What is not obvious in the table above is that there seems to be software offload to certain scatter-gather and other aggregation functions, and the table above shows the effect of the hardware onload of these routines into the network and off of the I/O processors in the SW processor nodes. The difference is a big deal, as you can see.

The pan-tree network is also more reliable, apparently. “The two-track pan-tree structure is used to increase the redundant ports,” the NRCPC researchers say. “Combined with the forward error verification codes, link degradation, link retransmission, credit recovery, path reconstruction and other reliability techniques, high reliability of the interconnect network is realized in large-scale systems at high link transmission rates.”

Each second generation SW chip has two PCI-Express 4.0 x16 interfaces and four 56 Gb/sec network interfaces, supplying more than 400 Gb/sec of bandwidth into and out of the processor complex. That these network interfaces have not been bumped up to 100 Gb/sec or even 200 Gb/sec seems odd at first, but four ports (two each way) comes to an effective 224 Gb/sec of bandwidth into and out of the chip, which is a little more than two 100 Gb/sec EDR InfiniBand ports or one 200 Gb/sec HDR InfiniBand or one 200 Gb/sec Slingshot Ethernet port.

The details on the storage for the Sunway exascale machine are a bit thin. There is a local storage system based on flash SSDs in the service nodes of the cluster (presumably one or more per rack and shared in some fashion across the nodes). The global storage looks to be comprised of arrays of disk drives with a metadata server and a data server cluster driving that storage – what we usually call a parallel file system. It looks like those SSDs are grouped together into a kind of distributed burst buffer, and that the storage software stack knows how to do automatic migration of data from SSD to disk. This system is also aware of hot spots and can replicate data as necessary to avoid them.

Let’s talk about the elephant in the room! In 2034 we will have reached the Zettaflops and the Playstation 7 will have 1 Petaflop consuming 300w … From that point 95% of humanity will live in almost perfect virtual reality, we will feed ourselves with Soylent or Huel delivered by robots in our homes and the planet earth will be taken by the machines!

I fully agree with you that is the fate aimed at a huge chunk of mankind, although some of us will go different ways. I watched this from the days of ZX Spectrum in 1982 ..

By the way, this Exascale system is in Qingdao, not Wuxi, although all the systems are made in Wuxi. I was there in both places last week. The system runs swimmingly, to use Austin Powers lingo.