When it comes to traditional HPC, it has taken a bit longer for cloud and AI to catch on. This is no surprise given the strict performance requirements and often decades-old codes at the heart of supercomputer-spanning machines. However, there are some surprising places to look for ways the HPC, cloud, and AI balance is being struck for cues.

The stickiest challenge in geospatial seems to be pulling together various aspects of HPC and AI and that falls pretty low into the stack at the orchestration level. This is because some parts can be easily parallelized and massively distributed but the layer that adds value is one that is dependent on rich, complex data that needs to be integrated. Meshing the two worlds of HPC and AI is its own challenge, but there are some bright spots, namely infrastructure flexibility in the form of containers and clouds.

Location-based data is finding its way into almost every field and the stakes for providing it at scale, quickly, and with the most depth possible is the differentiator for the handful of companies who have made this their mission. For those like Enview CEO, San Guanwardana, having an HPC background and a driving business need to integrate AI without dealing with the hassle and lack of flexibility of on-prem infrastructure has been eye-opening.

“When we think about how to make what we do computationally tractable, the only way to do it is by parallelizing as much as possible, which is the goal of most HPC. The problem is, we have some unique requirements and characteristics that mean our frameworks have to be different,” Gunawardana tells us. At the highest level, part of what makes their problems so difficult is that on the one hand, they have embarrassingly parallel jobs for one part of their workflow, but those need to be meshed with multi-dimensional elements (weather, time, changing conditions, etc.). These need to be meshed with the parallel parts but that’s not so simple, especially when machine learning is looped into the overall flow.

This is a dramatic oversimplification of Enview’s geospatial workflows, but the key point is that they have to have infrastructure and software frameworks that can seamlessly handle traditional HPC, machine learning, near real-time workloads, and very large models that encompass vast distances in addition to higher-resolution per-kilometer analyses. For our purposes here, we are focusing on what they built and run and why because it has more in common with large-scale multi-dimensional HPC than it might first appear and can showcase how HPC centers can, with some work and the right motivation, be more flexible with their infrastructure.

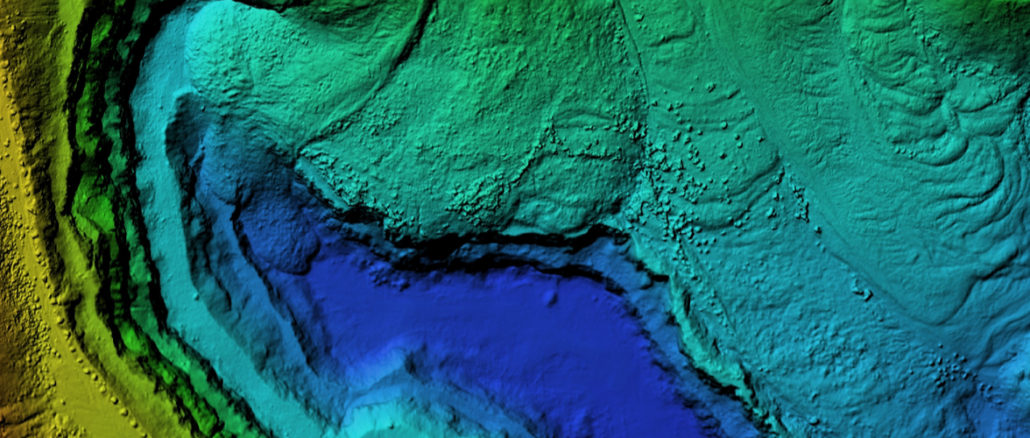

In the creation of transforming 3D data, fusing it with multiple datasets, and creating a “living” 3D model of the physical world at centimeter to national scale, Enview is working some seriously large datasets. A single kilometer can host anywhere from 50 million to 500 million data points. Some of Enview’s customers are dealing with territories that span thousands to tens of thousands of kilometers. Further complicating things, some need output in near real-time, especially in emergency aid situations.

When it comes to the AI piece, it might seem that this would be largely image based given the prevalence of satellite imagery, but this is just a small slice, Gunawardana says. LIDAR and other data collection points are also incredibly important, as are more project-specific data elements.

“Training and inference have to be done at massive scale and can’t be decoupled from the other operations that are more akin to HPC. Our first step is to use ML approaches to ingest and create a digital twin of the physical world. We have to extract insights from that 3D model, including real-time elements like weather. The deployment of that model is at high frequency and large scale.” He says that they don’t have to worry too much about constant retraining of their models, inference is where the high performance is needed.

What is notable is that despite the high performance requirements, they are doing everything on cloud infrastructure, popping in and out of the various IaaS clouds to get the CPU and GPU resources needed.

Gunawardana says Enview uses thousands of cloud-based nodes for most applications. “One of the nice things about some of our workloads is that unlike traditional HPC there are parts of this you can parallelize very efficiently. Here we can have tens of thousands of pieces, each with its own cluster to classify and segment with CPU, GPU, typically around 100-200 cores and 100 GPU cores. Each of those pieces represents a node point and there can be many thousands. This is the static part. Then, once you reassemble into the 3D model weather and other data points remove that parallel opportunity and that’s when we get to the next level of complexity.”

There has been quite a learning curve in selecting the ideal architecture for balancing the capabilities and challenges of a workload that’s part HPC, AI, and cloud-based, especially when there are both real-time and long-haul, massive-scale demands for Enview’s geospatial insights. “We’ve learned a lot about the various combinations of compute clusters but orchestration—automatically ingesting these petabyte-scale datasets, cutting them up, then figuring out the optimal compute environment and where and how to distribute—all of that is challenging. It has to be automated,” he explains.

Enview’s infrastructure teams have found that orchestration tools native to the various clouds are useful, but tailored enough for what they need so they had to build their own unique orchestrator based on workload management ideas that have been around for years and the newer tools available with containerization. “A lot of what we do now is packed into Docker but we’re moving toward Kubernetes to diversify the environments we can move into. Each cloud system has some of its own tools we leverage but overall, those tools are set up for more traditional big data management problems, which leads to inefficiencies to our calculations,” Gunawardana explains. “We are in a position where a lot of geospatial companies are, we all have to build enhancements atop existing workflow tools available in an HPC environment. For instance, HPC is heavily dependent on a divide and conquer approach to parallel processing. It’s tricky for us because we have five dimensions for any geospatial data point and the tough part for us is that we can’t decouple those.”

Gunawardana’s background, by the way, is in strict HPC working with fluid simulations on NASA supercomputers. He sees a serious lack of tools that translate well from HPC to domains that need more complicated, multi-layered data integration. For now, he says, most of the geospatial companies like his have been stuck cobbling together a broad range of tools and tweaking them to make them efficient for the kind of depth of models they need. On that note, as traditional HPC looks for more ways to integrate deep learning into some of its workflows, even if it’s just as a strict offload for a certain part of the problem, these workflow management challenges will keep rising.

In other words, is it time for something approaching a domain-specific workflow and large-scale data management offerings from the handful of companies that do this for HPC sites now?

“I remember doing my PhD work on NASA supercomputers and it’s amazing to think you can do that now on a commercial cloud at a fraction of the cost. Anyone can do that now,” he says, adding that the hardware architectures don’t need to change. What needs to change now are the architectures for data.

“What’s interesting and sensible is that a lot of HPC methodologies have naturally been driven by problems that generate revenue but there are implications: They end up being good at handling structured data, are well-tuned to business insights, and that describes geospatial. We are on the cusp of being able to build and maintain a model, a prediction, of how the world will change and not just in the long term; predicting wildfires, flooding, and so on. But right now the computational tools and architectures aren’t really set up for us to do that. Each research group is having to craft their own capabilities or build on top of things designed to track web clicks or interface with ad systems.”

“Our problems are about compute, software, and especially data architectures. These changes will have to happen everywhere that deals with the physical world, there will be a convergence.”

It is not unreasonable to see an opportunity here for providers of orchestration and data management tools because what’s happening in geospatial is not so far off from what will be needed in other areas in research and enterprise. Geospatial happens to walk the line between scientific computing and commercial value, but if they, like many others across domains, are stuck cobbling together their own tooling, couldn’t there be something to help management of HPC, AI, and farming that work efficiently on prem or in and across clouds?

Be the first to comment