As is the case with any new technology, there is a lot of hype and misunderstanding that comes along with something that actually improves some aspect of the system. Containers are no exception, and one has to separate the reality from the exaggeration and confusion to figure out how to correctly deploy this very useful technology, particularly in an HPC setting.

Michael Jennings, a scientist at Los Alamos National Laboratory, has taken on this task and knows the real deal about containers. In addition to his work at LANL, Jennings was one of the co-founders of the Caos Foundation (which managed the CentOS distribution of Linux) and has been the lead developer on three separate Linux distributions. Jennings is also the vice president of the HPCXXL group for heavyweight supercomputer and storage installations. Earlier this year, Jennings gave a presentation at the HPC-AI Advisory Council conference, laying out a number of ‘myths’ and incorrect conventional wisdom about high performance Linux containers and then refuted, rebutted, and, in some cases, reinforced them.

The first thing Jennings did was define what a container is, which seems obvious but there seems to be quite a bit of confusion about this with everyone having a different definition. According to Jennings, the most correct definition is that a container is a process and all of its children that are grouped together to give a customized view of the machine. It is the process at runtime that is invoked by the container engine, such as the one created by Docker, and is the entry point of the containerized application.

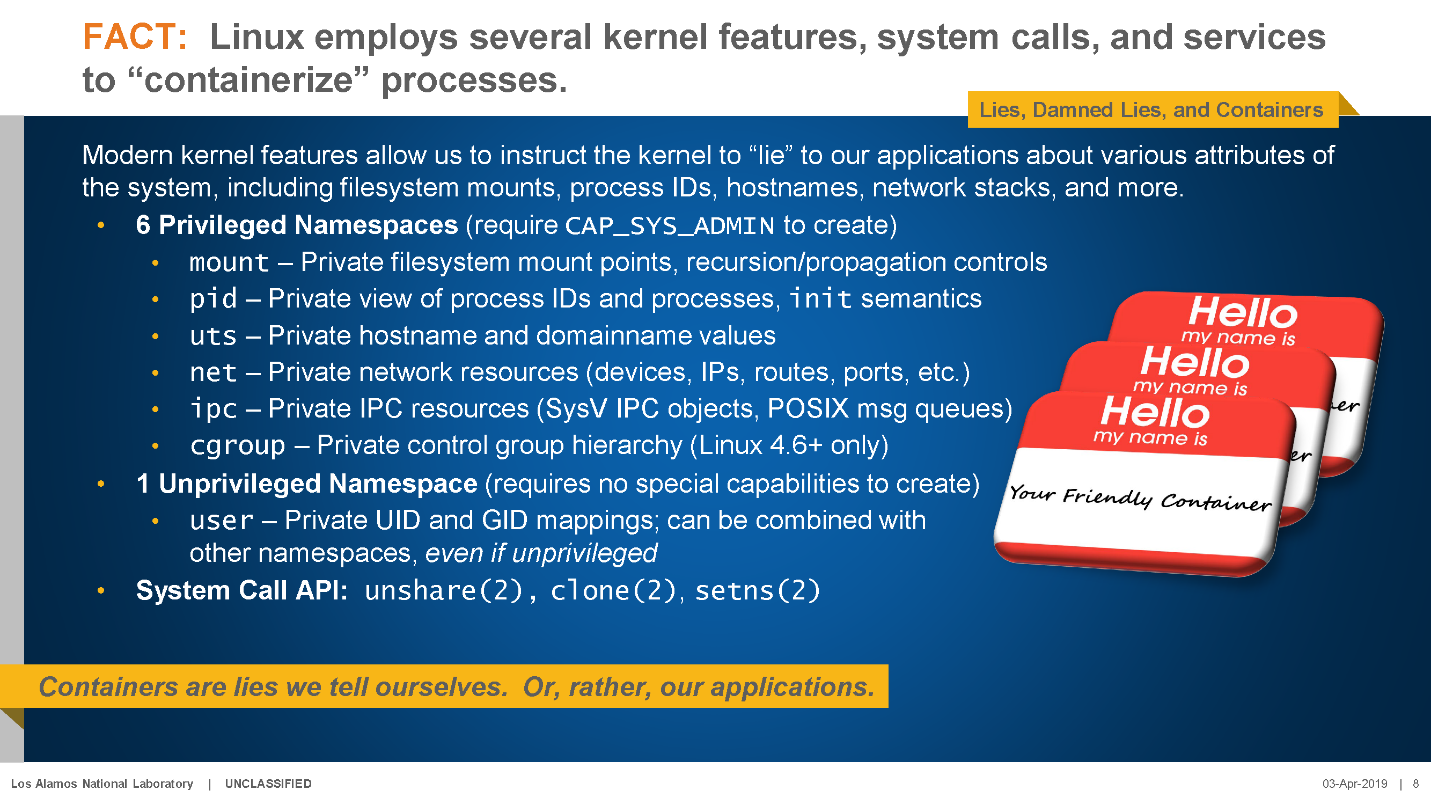

The modern Linux kernel already has several features that allow users to create containers – that is, to “lie” to applications about how and where they sit in the software stack and on the system. Here are a couple of slides from Jennings outlining these capabilities. Here is the first one:

And here is the next one:

Getting deeper into the mythbusting, Jennings next takes on the idea that containers are more than just lightweight and efficient virtual machines. With containers like Docker, it’s not about virtualizing machines as is done with a server virtualization hypervisor, it’s all about virtualizing applications. Docker containers are application environments, not machine-based virtual machines. The entry point of a Docker container tells Docker to run an application, not to boot up a virtual machine like you do with with VMware ESXi, Microsoft Hyper-V, Red Hat KVM, or Citrix Systems Xen.

If the goal is to virtualize an entire machine, Docker and its ilk are not the right tools, although they can be layered on top of virtual machines if that is desirable for security or workload isolation or other reasons. Jennings suggests that users check out LXD, a container manager which gives much of the VMware-like functionality (like live migration, for example) to containerized workloads.

“Containers Don’t Contain” is the next myth to be busted. It’s a myth because most people think the word “contain” implies a certain amount of, well, containing, in the sense that it will keep the code inside a virtual jail cell where it can’t get out and other code can’t get in. This simply isn’t the case. Containers are not inherently more secure than any other piece of code on a system. As Jennings says, “containers are more like buckets than prisons.”

There’s nothing in the Linux kernel that automatically acts as cell bars to keep a contained process from leaving its container. In fact, Linux explicitly allows containerized processes to cross in between namespaces via the “setns()” system call. Containers, and particularly privileged containers, need additional security measures in order to be “safe.”

If you set up an unprivileged container, then the Linux kernel applies mechanisms to ensure that the contained code can’t interact between namespaces. This has been the subject of a lot of research, development, and testing and has proven to be a secure mechanism to manage privilege boundaries between contained processes and the rest of the system.

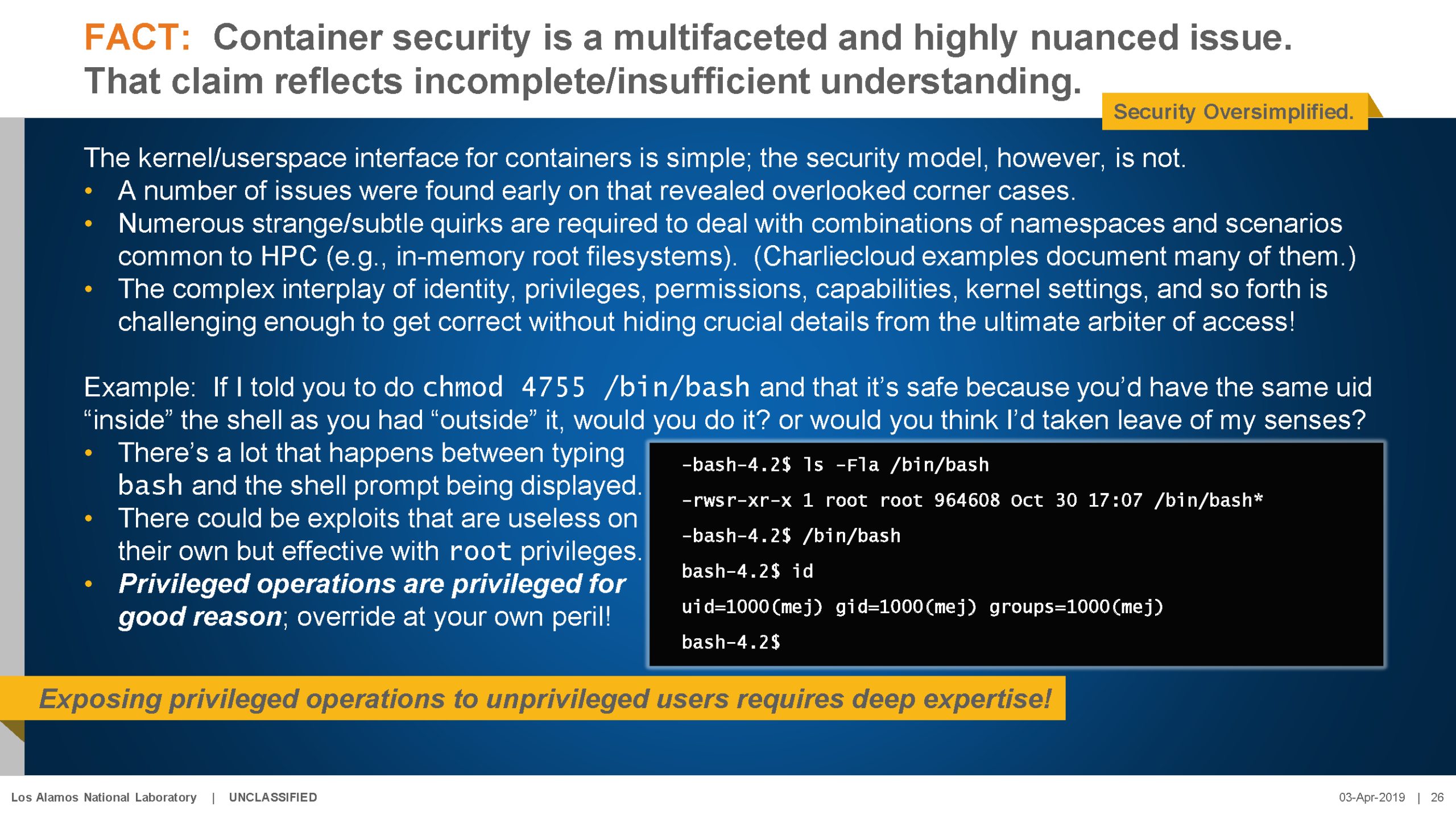

Container security is a very important issue and solving for it isn’t as easy as simply making sure your containers are unprivileged. As Jennings said in his presentation, “Privileged operations are privileged for good reason; override at your own peril!”

Once you give a container system privileges you open up a big can of highly complex worms from a security perspective. The interplay between namespaces and HPC application requirement scenarios is difficult to plan for and requires both art and science to navigate while keeping your container secure.

However Jennings argued convincingly against the myth “User namespaces are too new to be considered secure” by noting that user namespaces were introduced into Linux in 2015 and have remained essentially unchanged since 2015. Moreover, there haven’t been many CVE (Common Vulnerabilities & Exploits) associated with user namespaces since 2017. Finally, most experts working on end-user containers are using user namespaces and this kernel-based trust and security model seems to be working well.

Jennings also knocks down the myth that as long as the UID inside a container is the same as the UID outside a container, the container is secure.

As Jennings points out, there are a lot of machine operations that take place between commands like bash. And exposing privileged operations in containers is risky. In his opinion, unprivileged containers are the only way to go unless it’s absolutely vital to the applications. In this case, the best option is using the highly secured SELinux as the host to these containers.

Container Alternatives And Some History

Docker isn’t the only container out there, although it is orders of magnitude more popular than any other in terms of mindshare and real-world users. Charliecloud, Shifter and Singularity are Docker alternatives, albeit niche players currently. Most are built around or to leverage OCI standards.

Docker had a bit of myopia in the early days. They figured that their popularity meant that their vision was “the vision” for containers and discounted critics who brought up Docker security shortcomings. For example, Docker didn’t have a mechanism to prove container provenance, or container continuity, making it difficult to prove that the container was unaltered and created by an authorized source.

Docker’s inattention to security concerns motivated the creation of competing container technologies, which caused big container users to fear that container technology would become forked and fragmented between competing mechanisms. This was the reason behind the formation of the Open Container Initiative. The initial code base was donated by Docker, which ensured that the company remained relevant in the industry.

When it comes to containers, HPC customers have different needs than enterprises. Primarily, the Docker client/server architecture and root-only access model are nonstarters for HPC infrastructures. HPC customers, including Jennings, approached Docker with some ideas about making the tool more HPC-friendly, but were mostly rebuffed. Docker was much more interested in containerizing workloads like web applications and didn’t see the opportunity in the HPC market. This led to the creation of more HPC-centric Docker alternatives as on the slide below:

These days, Singularity is probably the most widely used container mechanism in HPC. It runs on every major Linux distro with the exception of RHEL 6 (it runs fine on other RHEL versions). The logo to the extreme right is something from RedHat called Podman, which was designed as a complete substitute for Docker, but with enhancements that will be attractive to HPC users; like the ability to run unprivileged containers. LANL is working to make Podman even more HPC-centric.

Containers Are Hard?

According to Jennings, basic containers are actually pretty easy. A container can be created with only three system calls, “unshare(2)” creates the namespace and moves the current process into it, “clone(2)” creates the new process/thread and setns(2) places the calling process/thread into the new namespace. Even better, recent versions of util-linux can reduce the number of commands to two, a bargain. This all sounds pretty simple to us, but Jennings cautions that the details are “gory” and “unless you can clearly articulate the technical rationale, don’t write your own (container)!”

Are Containers the Answer To Reproducibility in Science?

Many container advocates assert that containers are the answer to reproducing scientific or computational results. Makes sense on the face of it. Containers are the same every time you fire them up, right? They run the same instructions in the same order every time, so the results must be the same. Not so fast, according to Jennings.

There are many obstacles around reproducibility that are not solved, or even addressed by containers, Jennings says. There is no reason why build instructions and artifacts will remain the same over time – even over the course of days, not to mention years.

Reproducibility means a bit-by-bit, byte-by-byte reproduction of the original work with exactly the same results, nothing less. Today this is impossible, given software and hardware differences.

Containers make it clear how someone built the environment where the science is taking place. They give a roadmap to the components of that environment and how it was constructed. But if you’re building a house, your materials won’t be exactly the same and your nails will be placed in slightly different locations – meaning the house isn’t, and can’t be, a duplicate of another house, even if the detailed plans are the same. Containers are definitely the wave of the future for HPC sites as they allow researchers to run their unique applications while using the same hardware as everyone else. In the HPC world, the goal of this type of virtualization isn’t to get higher system utilization, as is the goal for the typical enterprise user. MPI and job schedulers can map workloads to compute cores pretty well across a cluster without resorting to any kind of server virtualization or containers. The real goal of containers in HPC is to provide researchers with their own custom-built environment to enable them to perform exactly what they need on any system in the on premises datacenter or in the public cloud. And probably both.

Be the first to comment