True to its name, Google’s famous Borg cluster controller has absorbed a lot of different ideas about how to manage server clusters and the applications that run atop them at the search engine and now cloud computing giant. And while the Kubernetes container controller that Google open sourced in June 2014 was certainly inspired by Borg, Kubernetes was really more of a kernel than it was a complete system, and the way you know that is that it took a long time to get Kubernetes to be truly usable in the enterprise.

Oddly enough, Airship, a mashup of Kubernetes, the OpenStack cloud controller with bare metal extensions, and a slew of other open source projects spearheaded by AT&T – yes, the same Ma Bell that created the C compiler and then the Unix operating system back in 1969, starting the open source and Unix revolutions – has surprisingly and, at least to some, quietly created a complete software stack that arguably rivals Borg and its extensions inside of Google.

This is a considerably different outcome than anyone might have predicted only four years ago, when we were all trying to figure out if OpenStack, Mesos, or Kubernetes was going to emerge as the ultimate cluster controller and application scheduler.

We wanted to make sure that what we were seeing unfolding with Airship made sense, so we reached out to Jonathan Bryce, executive director of the OpenStack Foundation, to make sure we were interpreting what AT&T was doing with systems software – and specifically with the Airship extensions to OpenStack and Kubernetes –correctly. And to be very precise, we asked if Airship was analogous to Borg and Omega at Google and Autopilot at Microsoft Azure, which control the clusters and overlaying layers of workload isolation, including virtual machines and containers, at these two hyperscalers.

The answer was a qualified probably, but Airship is more than Borg/Omega, which we covered here in detail more than four years ago, or Autopilot, which we discussed a few months later. We are not sure that Airship should not be called an “uberating system,” a conglomeration of runtimes, virtualization layers, and system management tools that can span an entire distributed computing system. Bryce, being a software developer – and one with open source keenly in mind – thinks of Airship as a framework more than an operating system.

“From what I know, Airship is similar in concept to Autopilot and Borg/Omega,” says Bryce. “The way that I think about it, Airship is really a lifecycle management framework for operating open source software. It’s focused on Kubernetes and OpenStack now, but down the road there, if there emerges a leading serverless framework or new AI tooling, these could plug in.”

The real issue that Airship is addressing, says Bryce, is not only creating an operating system that can span bare metal, virtual machines, and containers (or any mix of them), wrapping around OpenStack and its bare metal extensions like Ironic or the MaaS layers added by Canonical, and the Kubernetes podding system for containers, but in taking control of how all of this open source software, which innovates at different rates, can be managed itself, including all of the release dependencies and including rollback capabilities when some piece of the open source software stack doesn’t work out right.

“For me, Airship is not just about installing software and getting it up and running, but it’s how do you benefit from this continuous innovation that open source projects deliver. The concept of lifecycle is so critical and it’s really a core of Airship.”

Flying Above The Clouds – Or Rather Below Them

Almost eight years ago, AT&T made a commitment to using and extending open source software to build out its global network, and to use whitebox hardware to run it, as a means of lowering the cost of its network services. This has been a huge transition for the company, but it is putting control back in its own hands rather than those of its equipment suppliers.

Airship is not to be confused with other open source efforts that AT&T has undertaken in recent years to provide orchestration and virtualization in its network. Airship has nothing to do with the Open Network Automation Platform, an orchestration and virtualization layer for network function virtualization that AT&T developed internally as ECOMP – short for Enhanced Control, Orchestration, Management and Policy – and merged with the competing and Open Orchestrator (Open-O) project back in 2017. Airship also has nothing to do with the DANOS open source network operating system that AT&T created, enhanced, and preserved starting in 2017 in the wake of Brocade Communications buying routing NOS provider Vyatta in 2012 and then losing interest. ONAP and DANOS have both been moved under the Linux Foundation umbrella, like many open source projects. Airship is thus far a free-standing community that administered under the OpenStack Foundation umbrella.

There are a lot of moving parts to Airship, but before getting into that, it is probably helpful to understand why AT&T created its own mashup of OpenStack and Kubernetes. Most large enterprises have a few to many datacenters, and the largest hyperscalers and cloud builders have maybe dozens of regions with a few datacenters in each region. AT&T has tens of thousands of datacenters, and deploying applications across these datacenters, which have a wide mix of sizes and types of equipment, is a very big hassle. What was needed, says Bryce, was a systems-level, pluggable approach to software, allowing for all kinds of open source projects to be snapped into Airship and maintained as a whole even though it is really a collection of pieces all being innovated at different rates. The other interesting thing about Airship is that it has a declarative approach to describing, deploying, and configuring software atop hardware. Airship is for deploying a datacenter, not a server or a switch or a storage server.

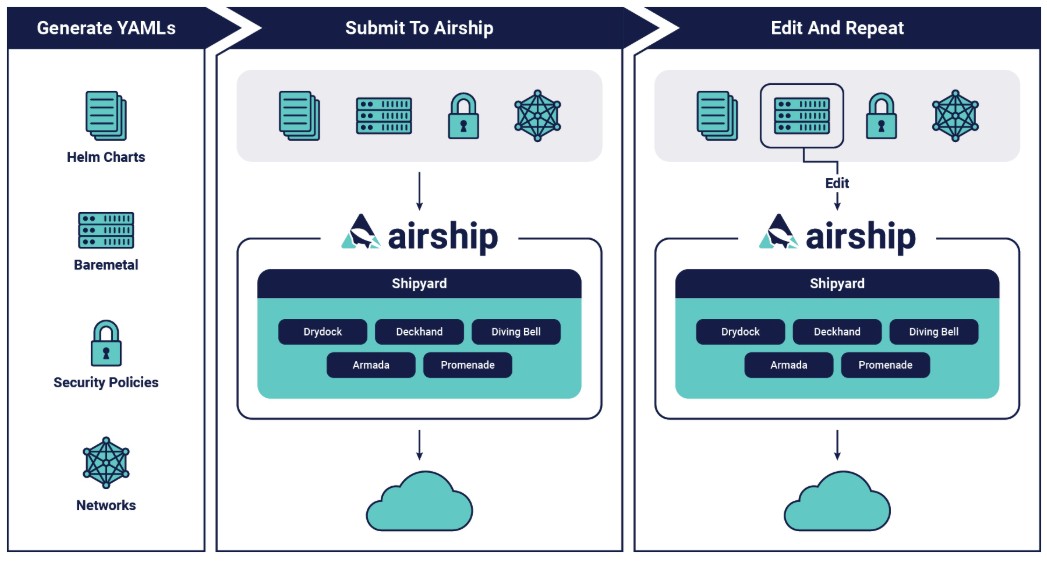

Google’s Borg is also declarative when it comes to setting up clusters, and uses the Borg Control Language, or BCL, which is itself a variant of the General Configuration Language that the search engine giant created to configure and deploy hardware and the applications that run on it. That Airship declarative language is YAML, a variant of XML that is used in conjunction with OpenStack Helm, a document-based package manager for OpenStack that has been extended to Kubernetes. Here is what the Airship workflow looks like:

That makes it look simple, we know. It takes a lot of software, all working in concert, to make anything this complex look that simple.

Airship starts out with a minimalist Kubernetes environment that can in turn bootstrap OpenStack and other services. In fact, OpenStack is containerized with Airship, inside of Docker containers under the control of Kubernetes, which is like the package manager, and can be thought of as just another microservices application with components that can be tweaked or swapped out independently from the others in that collection of microservices that is called OpenStack.

Once OpenStack is up and running on Airship, you can, of course deploy Kubernetes on either bare metal using Ironic or on virtual machines using Nova. In most cases, service providers like AT&T want exactly this kind of isolation – not just to keep applications and data separate from different users, but to keep users and administrators both among AT&T’s IT staff and the customers that use its network away from the underlying Kubernetes and OpenStack layers that implement the environment.

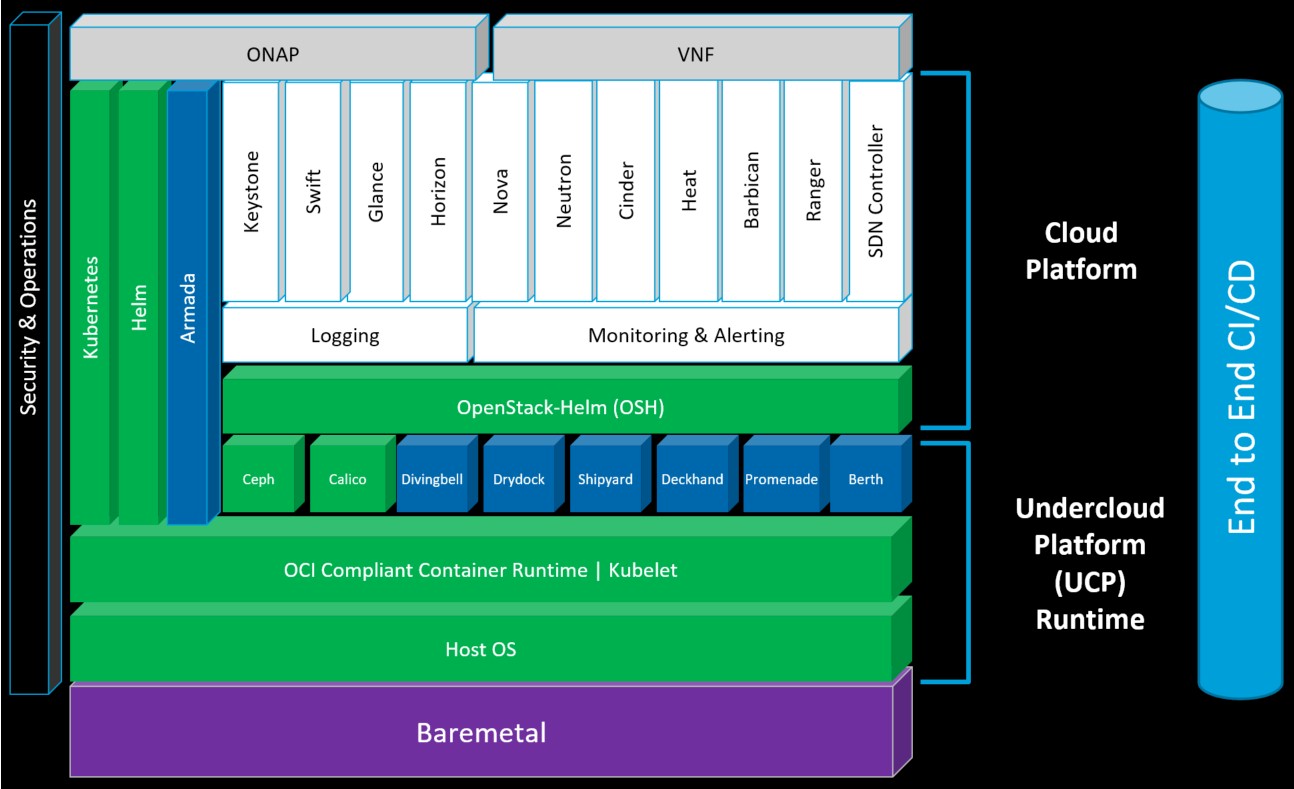

Here is how OpenStack, Kubernetes, and the unique code created by AT&T and then others who joined the Airship project last year, such as SK Telecom and Intel, that glues Airship all together:

The chunks that AT&T and the Airship team have been working on are in blue, and with the exception of one piece, they are all relegated to a layer of the complete system stack that AT&T calls the Under Cloud Platform, or UCP, runtime. This all starts with bare metal servers, on top of which is a host operating system – in this case Linux – and a container runtime that is compliant with the Open Container Initiative specification – meaning, in essence, a Docker container – running atop that.

Airship then has containerized services that manage the configuration of the hybrid OpenStack/Kubernetes setup and that run underneath OpenStack and beside Kubernetes and Helm. OpenStack is the controller for virtual machine and bare metal provisioning, and Kubernetes is the controller for podded containers. Airship uses its own Ceph object store as the back-end for the Airship control plane, which is separate from the OpenStack or Kubernetes storage that will be used by applications. The stack also uses the Calico Layer 3 software-defined networking framework to provide routing functions for Airship that are separate from the networking stack used by OpenStack and Kubernetes applications. Sitting next to Kubernetes and Helm is a bit of orchestration software that AT&T created called Armada, which is where all that declaring with YAML in Helm documents, called charts, gets done.

In the blue boxes underneath OpenStack Helm sit Promenade, Shipyard, Drydock, Deckhand, Divingbell, and Berth. Promenade, through a process AT&T calls “genesis” (another Star Trek reference, no doubt) takes a single host system, loading it up with the current stack of Kubernetes and OpenStack software and use all of the code and configuration in this initial host to build out the remainder of the Kubernetes/OpenStack cluster that is described in the Helm documents. Here is the important bit: The same process that creates the initial Airship host is the one that is used to update the hosts from that point forward.

Shipyard is the REST front-end to Airship, which allows it to be integrated with continuous integration/continuous development platforms and to do audits and take various operational actions. Drydock replicates and configurations additional nodes in the cluster once the genesis machine is created, including control plane hosts to run the Airship elements as well as compute and storage hosts for applications. This bare metal provisioning done by Drydock includes setting up BIOS and firmware, RAID drive configurations, operating systems, and network configurations on these host machines. Deckhand is a central repository for site designs and changes to them, as expressed in those YAML documents. Divingbell is a minimalist bare metal configuration manager that aligns with Kubernetes pods and is used to repair or otherwise tweak a setup that is running, much as a Navy specialist in a diving bell can work on a ship. Berth is a minimalist VM that runs inside a container that also aligns with Kubernetes pods and is used in very specific ways. Aligning to Kubernetes means if you kill the pods, you kill the bare metal or VM instances.

The interesting thing about Airship is that although software could be provisioned to run on bare metal, in a virtual machine, or inside of a container in this entire environment, it is assumed that all elements of Airship itself are only deployed using containers.

Airship v1.0 went live in May and the project has just been graduated to a top-level project by the OpenStack Foundation. That means it is ready for prime-time deployments.

It will be interesting to see how this stands up in production against Google’s on-premises Anthos stack, which was formerly known as Cloud Services Platform and which is a containers-only environment for applications as well as for Kubernetes itself.

The other thing that needs to be resolved is how various job schedulers can plug into Airship. Presumably the schedulers that work with OpenStack, such as Qonos, or Kubernetes, such as Volcano, kube-scehduler, or Navops and GridEngine from Univa, can plug right in at a higher level here in the Airship stack. One of the key things about Borg was not just that it set up hardware and software for applications, but it figured out when to actually run a mix of batch and interactive workloads to maximize utilization across Google’s vast fleet of millions of servers. This is one of the trickier bits to manage, and even Google has had to make Borg pluggable and able to support different schedulers, including Omega and a slew of ones that exist but Google has not named outside of its own walls.

Be the first to comment