For the first decade that Amazon Web Services was in operation, its Elastic Compute Cloud (EC2) raw compute was available in precisely one flavor: Intel Xeon. But now, AWS is not only putting its own multi-core “Graviton” Arm processor out there for people to play around with, but is expanding its deployment of AMD’s first generation “Naples” Epyc 7000 processors.

While the Graviton chip is interesting, and lays the foundation for Amazon to be a credible vendor of processors – albeit one that builds its own machines and only uses them itself or rents them out to customers, the use of AMD’s X86 processors presents the Intel Xeons with real competition because applications written for the Xeon can run unchanged on the AMD Epycs.

To be fair, many applications will benefit from tuning specifically for either the Xeon or Epyc architectures, and under the covers they are different enough that you can’t just assume that given the same core counts and clock speeds that the performance of similar Xeons and Epycs will be equivalent. This is why testing applications on the two architectures is so critical, and the advent of Epycs on AWS means that plenty of customers can rent time and do their own bakeoffs with their own applications and try to figure how to tune them or to discover it is not even necessary.

The first AMD instances appeared last November, and at its AWS Summit event in Santa Clara this week, the cloud juggernaut unveiled two more instance types that deploy a custom Epyc chip. The M5a Epyc instances are general purpose virtual machines that range from 2 to 96 virtual CPUs (threads) across a two-socket server with from 8 GB to 384 GB of main memory allocated for them. According to AWS, the M5a instances and their Xeon M5 instance predecessors; they are suitable for general purpose workloads web servers, application servers, development/test environments, gaming, logging, and media processing.

The R5a EC2 instances based on the Epycs are aimed at workloads with heavier memory requirements, and as such they have the same physical configuration, except twice as much memory – from 16 GB to 768 GB – is configured to the instances. These are aimed at data mining, in-memory analytics, caching, and simulations, the same ones that the R5 instances based on the “Skylake” Xeons are aimed at. All of the M5/M5a and R5/R5a instances.

Both types have dedicated bandwidth for Elastic Block Storage (EBS) ranging from 2.1 Gb/sec to 10 Gb/sec and have external network bandwidth that ranges from 10 Gb/sec to 20 Gb/sec.

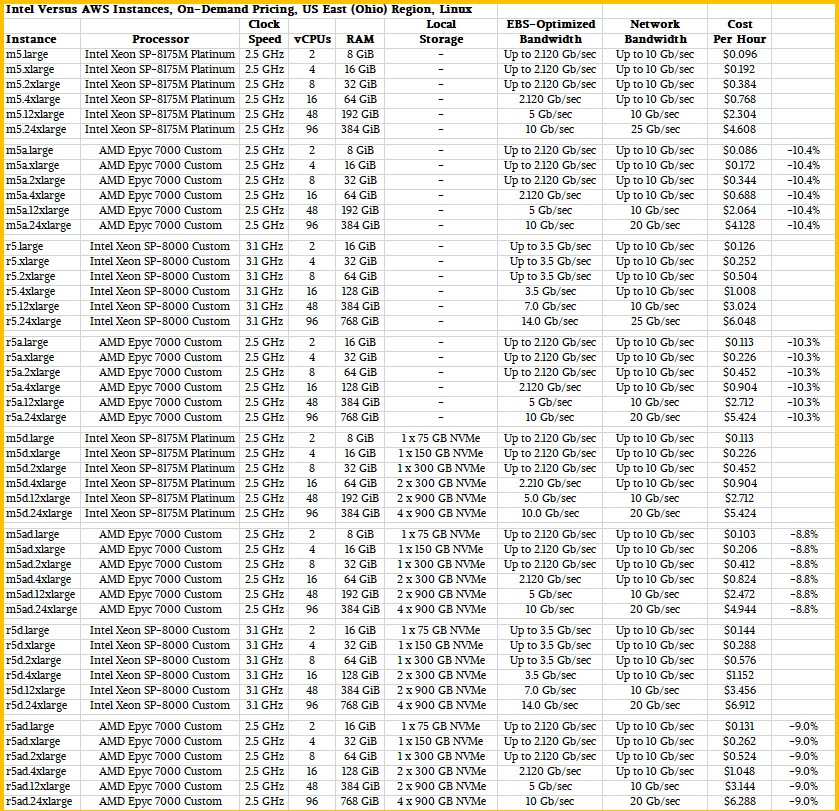

This week, AWS added local storage to these instances to create the M5ad and R5ad instances, which have local SSD storage, which has lower latency than the EBS storage that is also still available. (It looks like somebody has not deployed NVM-Express fabrics in their storage networks yet. . . . ) And to help make this all a little more obvious, we put together this handy table showing the Naples Epyc instances compared to their Skylake Xeon SP equivalents:

As you can see, the price difference between the Epyc and the comparable Xeon instances ranges from 8.8 percent to 10.4 percent, depending on the instance type. AWS has picked processors such that they have the same number of threads, and that means they are both 24 core processors. The M5d uses a modified Skylake Xeon SP-8180M running at 2.5 GHz, which AWS revealed, but the other two Skylake instances in the table above only show them running a custom chip in the Xeon SP-8000 series. (If you look at the commercial Skylake Xeon SPs announced back in July 2017, AWS could be using a slightly overclocked Xeon SP-8168 Platinum, which normally runs at a 2.7 GHz base clock speed, for the 3.1 GHz chips in this table. AWS not divulged the custom SKU it is using of the Epyc instances shown above. The AMD chips used in the EC2 service could be a slightly overclocked Epyc 7451, which runs at 2.3 GHz; it would not be a big deal to push that up to 2.5 GHz. (The commercial Epyc SKUs are shown here.)

Not that list price means much to a hyperscaler (it is a ceiling, certainly not a floor), but the Xeon SP-8168 lists for $5,980 a pop, while the Epyc-7451 lists for $2,400. There is a lot of room for the price cut in there, with the difference in the price for a two socket server being $7,160 for the processors alone. If you take the top bin Xeon M5d and Epyc M5ad instances and run them straight for three years, the Xeon machine costs $142,640 to rent from AWS and the Epyc machine costs $130,017 – the difference being $12,623. AMD is giving most of the savings and then some back to the Epyc buyers. (The math is the same if the machines are hosting multiple VMs from multiple customers so long as the machines is bin packed with all 96 threads being used.)

Here is the neat bit: The configurations between the Xeon M5d and Epyc M5ad instances are about as normalized as any two configurations on the clock can be. These are perfect machines upon which run bakes offs. (There are some EBS and network bandwidth differences in some of the other Epyc/Xeon analogues.) So have some fun testing and tell us all about it.

The Epyc instances are available in the US East (N. Virginia), US West (Oregon), US East (Ohio), and Asia Pacific (Singapore) regions of AWS and with On-Demand, Spot, and Reserved pricing.

Be the first to comment