A major part of China’s several initiatives to build an exascale-class supercomputers has been the country’s determination to rely mostly on homegrown technologies – from processors and accelerators to interconnects and software – rather than turn to vendors outside of its borders, particularly those from the United States. The drive is part of a larger effort by China’s leaders to grow key industries inside the country, including its technology sector, to the point where they can compete with others across the globe.

Some of the fruits of the push for China-made technology components can be seen in the country’s Sunway TaihuLight supercomputer, the massive system that sat atop the Top500’s list of the world’s fastest systems that use the Linpack benchmark until it was toppled in June by Summit system, which is based on technologies from IBM, Nvidia and Mellanox and is housed at the Oak Ridge National Laboratory in the United States. The Sunway TaihuLight, which delivers a peak performance of 93 teraflops, is powered by Sunway’s SW26010 processors, uses an interconnect technology from Sunway and runs the Sunway RaiseOS 2.0.5 operating system.

But as the country works toward its exascale system, engineers looking at the technology have to weight such factors as how the systems will be used and the budgets available for the development of various components, and the reliance on homegrown technologies is raising its own challenges, including the need to develop an ecosystem to support them, according to Qian Depei, Beihang University, and Sun Yat-sen University and dean of the School of Data and Computer Science at Sun Yat-sen University, who spoke at this week’s SC18 supercomputing conference in Dallas.

Such discussions about the ongoing competition between the United States and China in supercomputing and HPC tend to crop up around the times of the ISC and SC supercomputing shows, and it’s no different this week. Even with the latest version of the Top500 list, much of the focus was not only on the fact that Sierra, another IBM-based supercomputer at the Lawrence Livermore National Lab, muscled its way into the number-two spot and dropped TaihuLight into third place, but also that China grew its share of the 500 systems on the list to 227 – accounting for 45 percent – while the United States saw its numbers fall to 109 supercomputers, or 22 percent. However, those U.S. systems are on average more powerful, giving the country 38 percent of the aggregate system performance on the list. China had 31 percent.

The competition is not only about national pride. The leaders in supercomputer, HPC and particularly exascale computing – which is needed to run increasingly complex HPC workloads that more and more include big data analytics and artificial intelligence – will have an edge in everything from scientific research and the military to healthcare and the economy. The United States and China appear to be in a race to see which will get there first, though readers of The Next Platform know that the European Union is aggressively pursuing its own exascale initiatives, as is Japan.

During his address, Qian Depei told attendees that China has made high-performance computing a focus since 2002 and now has turned its efforts to building an exascale system.

“HPC has been identified as one of the priority areas in China since the early 1990s,” Qian Depei said. “In the last 15 years or so we have implemented three key projects. It was quite unusual [for a country] to continually support key projects in one area under the national high-performance program. That reflects the importance of the high-performance program. The result of the project was some petascale machines.”

The most well-known of those systems was TaihuLight and Tianhe-2, which went online in 2013 and held the top spot in the Top500 until being knocked off by TaihuLight two years ago. The country’s supercomputing infrastructure – called China National Grid – now includes 200 PFLOPs of shared computing power and more than 160PB of shared storage running 400 applications and services that serve about 1,900 user groups. It includes two main sites, six national supercomputing centers, 10 ordinary sites and one operations center.

Now the country is in the midst of the project to build an exascale system, which is based on building three prototype systems – Sugon, Tianhe, and Sunway. Sugon will use traditional technologies like x86 processors and accelerators made by Chinese chip maker Hygon, a multi-level interconnect design and immersive cooling that will do away with the need of fans. The Tianhe prototype will use new 16-nanometer MT-2000+ many-core processor from Matrix, a 3D butterfly network with a maximum of four hops for the whole system.

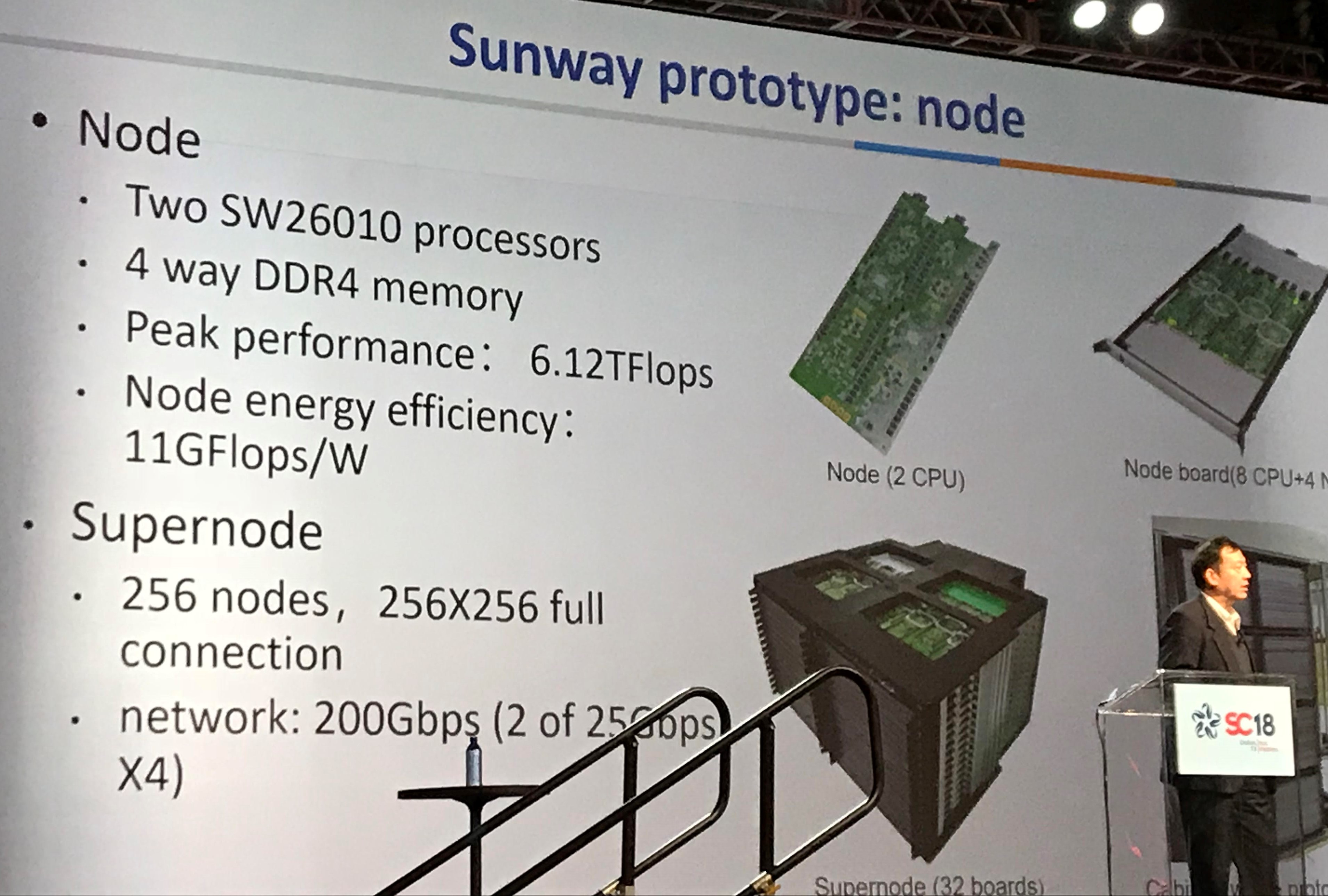

The Sunway prototype will use the SW26010 chips, a high-bandwidth and high-throughput network powered by a self-developed network chip, and a water-cooling system with enhanced copper cold plate. A node will include two processors and four-way DDR4 memory, while a supernode will comprise 256 nodes and full 256-x-256 connection.

Qian Depei said the challenges that need to be overcome include power consumption, application performance, programmability and resilience.

“The energy efficiency is the most challenging part of the project,” he said. “Without that limitation, I think it’s relatively easier to build an exascale system. So how can we balance the power consumption, performance and programmability? How can we support wide range of applications while keep high application proficiency and how do we improve the resilience for long-term, nonstop applications?”

The engineers are weighing such questions as whether to develop a heterogeneous, accelerated system or one that leverages a many-core architecture. They’re focusing on hybrid memory that includes DRAM and non-volatile memory (NVM) and putting the memory closer to the processor. They also considering an optical interconnect and placing it closer to the chips by shrinking the size of the optical devices. As far as compute goes, the question is whether to go with a special-purpose or general-purpose processor.

“The number of exascale computing applications is small, so should we use a very efficient special-purpose architecture to support those applications?” he asked. “On the other hand, Chinese machines will be installed at general purpose computing centers, so it’s impossible to support only small number of applications. Our solution is combining general purpose plus special purpose.”

Work also is being done outside of the system itself. The country has upgraded the China National Grid, creating a service environment that includes a portal for users, growing it to 19 sites and improving the bandwidth. They’re creating an application development platform and another platform to drive HPC education and increase the country’s talent pool, as well as working to build an application ecosystem for its exascale system.

“Because the future exascale system will be implemented with our homegrown processor, the ecosystem has become a very crucial issue,” Qian Depei said. “We need the libraries, the compilers, the OS, the runtime to support the new processor, and we also need some binary dynamic translation to execute commercial software on our system. We need the tools to improve the performance and energy efficiency, and we know we also need the application development support. This is a very long-term job. We need the cooperation of the industry and also the end users.”

93 teraflops –> petaflops