The golden grail of deep learning has two handles. On the one hand, developing and scaling systems that can train ever-growing model sizes is one concern. And on the other side, cutting down inference latencies while preserving accuracy of trained models is another issue.

Being able to do both on the same system represents its own host of challenges, but for one group at IBM Research, focusing on the compute-intensive training element will have a performance and efficiency trickle-down effect that speed the entire deep learning workflow—from training to inference. This work, which is being led at the T.J. Watson research facility by IBM Fellow, Dr. Hillery Hunter, focuses on using low-latency, high bandwidth communication techniques pulled from supercomputing. It also emphasizes tweaks to deep learning models (in one case, ResNet for scalability to 256 Pascal GPUs) for HPC caliber performance and scalability.

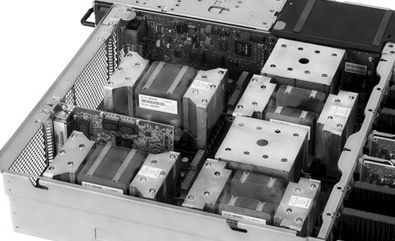

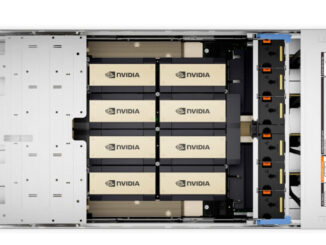

Granted, the systems that Hunter’s team is working on are at the bleeding edge in terms of both price and performance, but Hunter says that the hugely iterative training step on increasingly large models requires supercomputer-class nodes like those we will see on the forthcoming Summit supercomputer ala the forthcoming Power9/Volta GPU Minsky machines. The current results of Hunter’s group’s use of the PowerAI framework (a bundled, optimized package of common deep learning frameworks) is tuned for the Power 8 processor—specifically, nodes with two of those, four Nvidia Pascal P100 GPUs and doubled-up NVlink between the GPUs and back to the CPUs (each GPU has four NVlink connections).

“Our software does deep learning training fully synchronously with very low communication overhead. As a result, when we scaled to a large cluster with 100s of NVIDAI GPUs, it yielded record image recognition accuracy of 33.8% on 7.5M images from the ImageNet-22k dataset vs the previous best published result of 29.8% by Microsoft. A 4% increase in accuracy is a big leap forward; typical improvements in the past have been less than 1%. Our distributed deep learning (DDL) approach enabled us to not just improve accuracy, but also to train a ResNet-101 neural network model in just 7 hours, by leveraging the power of 10s of servers, equipped with 100s of NVIDIA GPUs; Microsoft took 10 days to train the same model. This achievement required we create the DDL code and algorithms to overcome issues inherent to scaling these otherwise powerful deep learning frameworks.”

“The core algorithm is a multi-ring communication pattern that provides a good tradeoff between latency and bandwidth and adapts to a variety of system configurations. The communication algorithm is implemented as a library for easy use,” hunter explains. This library has been integrated into Tensorflow, Caffe, and Torch. The team trained Resnet-101 on Imagenet 22K with 64 IBM Power8 S822LC servers (256 GPUs) in about 7 hours to an accuracy of 33.8 % validation accuracy. “Microsoft’s ADAM and Google’s DistBelief results did not reach 30 % validation accuracy for Imagenet 22K. Compared to Facebook AI Research’s recent paper on 256 GPU training, we use a different communication algorithm, and our combined software and hardware system offers better communication overhead for Resnet-50. A PowerAI DDL enabled version of Torch completed 90 epochs of training on Resnet 50 for 1K classes in 50 minutes using 64 IBM Power8 S822LC servers (256 GPUs).”

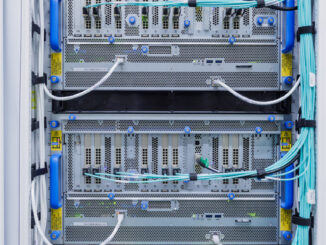

In terms of scaling across so many GPUs, Hunter says that there are already methods including Nvidia’s NCCL but what her team has done is optimize these approaches for Power8. “Our team focused on getting the wait times out of deep learning with this co-optimization between hardware and software. We have taken the approach of a deep learning framework with independent communication libraries with low latencies to scale neural networks.

“The best scaling for 256 GPUs shown before is by a team from Facebook AI Research (FAIR). FAIR used a smaller deep learning model, ResNet-50, on a smaller dataset ImageNet-1K, which has about 1.3 million images, both of which reduce computational complexity, and used a larger batch size of 8192, and achieved 89% scaling efficiency on a 256 NVIDIA P100 GPU accelerated cluster using the Caffe2 deep learning software. For a ResNet-50 model and same dataset as Facebook, the IBM Research DDL software achieved an efficiency of 95% using Caffe as shown in the chart below. This was run on a cluster of 64 “Minsky” Power S822LC systems, with four NVIDIA P100 GPUs each.”

“Ironically, this problem of orchestrating and optimizing a deep learning problem across many servers is made much more difficult as GPUs get faster. This has created a functional gap in deep learning systems that drove us to create a new class of DDL software to make it possible to run popular open source codes like Tensorflow, Caffe, Torch and Chainer over massive scale neural networks and data sets with very high performance and very high accuracy,” Hunter explains.

Hunter says that most systems today are designed with a fairly narrow draw for data transfer via the PCIe links between the CPU and GPUs. “A lot of our focus in this work and going forward is on our system balance. As the GPUs and CPUs get faster it is more important to keep them fed, so for us, the future is about driving system designs that emphasize this fluid data movement,” she adds.

For developers and data scientists, the IBM Research (DDL) software presents an API (application programming interface) that each of the deep learning frameworks can hook into, to scale to multiple servers. A technical preview is available now in version 4 of the PowerAI enterprise deep learning software offering, making this cluster scaling feature available to any organization using deep learning for training their AI models.

Be the first to comment