Not every organization that relies on supercomputers can replace a whole machine in one fell swoop. In fact, we would venture to generalize and say that it is a rare organization that can do such a thing. In a lot of cases that we know of, companies keep two and sometimes three or four generations of systems in their clusters as they squeeze all of the economic and technical life out of that iron before sending it to the crusher.

The National Oceanic and Atmospheric Administration, which does weather and climate forecasting on a regional, national, and global level, has just fired up a pair of shiny new Cray XC40 systems, dubbed “Luna” and “Surge” and located in NOAA’s National Centers for Environmental Prediction (NCEP) facilities in Reston, Virginia and Orlando, Florida. NOAA has been testing the Cray machines alongside of its existing clusters since last fall and accepted the XC40s back in December. (Cray has been on a tear, winning deals in weather forecasting centers all over the globe in recent years.) NOAA made that announcement this week, and Ben Kyger, director of NCEP central operations, tells The Next Platform that the first operational weather forecasting models will be put on the Cray machines next month.

The most recent upgrade at NOAA’s NCEP facility is part of a ten-year, $502 million contract awarded to IBM in March 2011 to supply a pair of systems called the Weather and Climate Operational Supercomputing System, or WCOSS, and upgrade them every few years or so. (Technically, the WCOSS contract ran for five years with a three year extension and a two year transition period in the event that NCEP decided to make a big change in system design or architecture.) Under that deal, IBM installed two different generations of its Xeon-based iDataPlex hyperscale-style systems and moved NCEP off of IBM’s Power-based systems, in this case Power6-based water-cooled machines known as the Power 575s.

The sale of IBM’s System x division in late 2014 to Lenovo complicated things a bit for the IBM contract with NCEP, but Big Blue worked out a deal to resell Cray’s XC40s since it no longer had an X86 server line of its own. (If Lenovo was counting on preserving this account as well as a similar supercomputing facility at the National Center for Atmospheric Research, which does weather and climate research and modeling but not the day-to-day forecasting done by the National Weather Service, then the company has been sorely disappointed.)

Supercomputing always has a nationalistic and political flavor, given the sources of funding for development of machines come from governments and they are the largest procurers of these big, bad boxes, but what really matters to most of us is that the weather forecasting gets better. And the Luna and Surge systems are all about setting the foundation to make this happen and are part of a much larger investment in numerical weather simulations that The Next Platform discussed in detail last summer.

“Luna and Surge are going to allow us to increase the resolution of all of our models, but especially the global model and the global ensemble model,” says Kyger, referring to the Global Forecast System (GFS) and the Global Ensemble Forecast System (GEFS).

The ensemble models are interesting in that they put some probability on the forecasts generated by the Weather Research and Forecasting (WRF) model that is at the heart of the applications used by NOAA. “You take the global model and you lower the resolution of it and then you run 20 versions of it at the same time with slightly different input conditions,” Kyger explains. “And by doing that, you see how quickly the forecasts diverge so you can get some kind of sense of the uncertainty of your forecasts. If they diverge quickly, that means it is very sensitive to the initial conditions, and it means you are more likely to be wrong. However, if all of the answers come out to be the same no matter how much you jiggle the initial conditions, your forecast is probably going to be right.”

With the additional capacity, the resulting cluster of clusters – two iDataPlex generations plus the XC40s –– has a total of 5.78 petaflops of aggregate peak computing power that is evenly split across the two centers, 2.89 petaflops each, so the workloads are redundant and highly available.

As the new XC40 machines are shaken down, various codes will be tweaked to take advantage of the extra computing oomph and also, in some cases, to take advantage of the “Aries” interconnect at the heart of the XC40 machines. NOAA plans to upgrade its High Resolution Rapid Refresh (HRRR) model, which is used to predict the timing and strength of winder snowstorms and severe thunderstorms at other times of the year. The Weather Research and Forecasting Hydrologic (WRF-Hydro) model will be expanded to cover 3,600 locations in the United States and forecast flow, soil moisture, snow water equivalent, evapotranspiration, runoff, and other metrics for 2.67 million river and stream locations across the country; this is a 700-fold increase in spatial density coverage, according to NOAA. The extra computing from the two XC40 systems will also be used by a tweaked version of the Hurricane Weather Research and Forecasting (HWRF) model, with links between the air, ocean, and wave parts of the simulation all being linked for the first time to improve the forecasting of hurricane strength and tracking. The HWRF system will also be boosted so it can track up to eight storms at the same time.

The upgraded systems at NOAA are, in part, thanks to Hurricane Sandy, in fact. The pair of XC40s cost $44.5 million, of which $19.5 million came from the operational contract with IBM and the other $25 million came from a one-time infusion as part of the Disaster Relief Appropriations Act of 2013 that paid for the damages from Hurricane Sandy. All weather centers and the research institutions want to have more computing and better models to take advantage of it, and NOAA is understandably sensitive to the fact that the European Centre for Medium-Range Weather Forecasts (ECMWF) models predicted landfall for Hurricane Sandy early on when other models, such as HWRF, did not.

“Keep in mind, our global model sometimes outperforms the European model – they don’t win every day,” Kyger tells The Next Platform. “And in the case of Hurricane Sandy, they won day five when they said it was going to turn left and we said it was going to turn right. By day four, everyone said it was going to turn left, to give you a sense of the difference. It is pretty subtle, actually, but it was high profile enough that Congress didn’t like it. The only way we can do state-of-the-art science is if we have enough computing to do that. We needed a boost, and now we are pretty well positioned. We usually upgrade every three years or so and we usually have a 2.5X to 3X increase, and this was like an extra upgrade.”

The first node to make the jump to the new XC40s will be the High Resolution Windows (HIRESW) forecast system, according to Kyger. This is an intermediate-scale model that allows forecasters to take an area of North America – say where there is a forest fire – and zoom in on it to do modeling with higher fidelity (3 kilometers to 4 kilometers for the cells that are simulated in the atmosphere – than the full national or global models. The North American Mesoscale forecast system used to model weather in the lower 48 states, by contrast, has 12 kilometer cells in its current incarnation

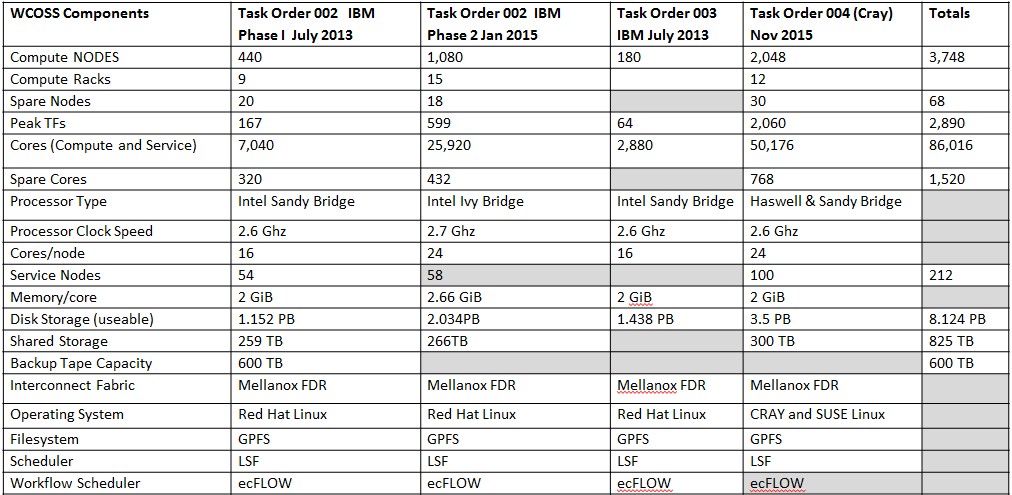

When IBM and Cray announced the Luna and Surge procurement this time last year, very little was known about the systems. Here are the feeds and speeds of the prior iDataPlex machines, which were nicknamed Tide and Gyre, and the Luna and Surge upgrades:

The first parts of the WCOSS clusters at NOAA, called Tide in the Virginia datacenter and Gyre in the Orlando backup center, processed 3.5 billion observations per day from various input devices used in weather forecasting and cranked out over 15 million simulations, models, reports, and other outputs per day. These machines were initially fired up in July 2013, and failover between the two active systems is tested regularly, with the goal of having at least 99.9 percent uptime for running models and 99 percent on-time generation of products kicked out from the models. These phase one machines were rated at 167 teraflops peak and were almost immediately upgraded with another 64 teraflops, as you can see in the procurement table.

In January 2015, as The Next Platform was being created, IBM added a bunch of iDataPlex nodes to Tide and Gyre, boosting the performance of the aggregate cluster by 599 teraflops per system and to a total of 830 teraflops of compute. All of the nodes run Red Hat Enterprise Linux, link to each other using 56 Gb/sec FDR InfiniBand, and use IBM’s Platform Computing Load Sharing Facility (LSF) to schedule work on the two clusters.

With the Luna and Surge upgrades, NOAA is introducing a new network, the Aries interconnect, to its compute clusters (even though the chart above says FDR InfiniBand), and it is getting the big bump in performance that NOAA has been seeking to improve its forecasting models. The XC40 systems have 2,048 nodes using “Haswell” Xeon E5 processors, and deliver 2.06 petaflops of their own performance. Add it all up, and the resulting NOAA machines have 2.89 petaflops of oomph. The Cray nodes will run a modified version of SUSE Linux Enterprise Server, as all Cray XT and XC machines have for a long time, and will make use of LSF to schedule work on the cluster.

It is interesting to contemplate the issue of mixing different kinds of networks for compute. But Kyger says this is not a big deal, particularly since all parts of the cluster (with mixed iDataPlex and XC40 nodes) will be linked to the same 8.1 PB parallel file system, which runs IBM’s General Parallel File System (GPFS) aimed at HPC and now some analytics workloads.

“You keep them separate,” says Kyger, referring to the two different compute networks. “They share a file system but they don’t share the compute network. We made the business decision that when we run the production suite, we never run it across the seams. So the global model only runs on one of those architectures, and it always runs on a particular one and it never runs across two different ones.”

The important thing is that NOAA doesn’t have to wrestle with too many different Xeon processor generations. “We don’t want to have more than three chip families in there – it is hard enough with three,” Kyger says with a laugh, and that means the Sandy Bridge nodes will be unplugged next when whatever next machine comes into the NOAA datacenters some years hence. “The interconnect is pretty transparent to us, and we are still using Intel compilers on both types of machine. There are differences, but it is not anything like going from a proprietary system like IBM’s Power machines to a Linux machine – that was tough. This is relatively easy.”

Way back in the dawn of time, throughout the 1990s, the NCEP facilities at NOAA employed various generations of Cray Y-MP vector supercomputers to do their weather forecasting. In 2002, IBM won a deal to supply NCEP, and over the years various Power-based clusters using Power4 and Power5 processors were deployed in NOAA’s own datacenters in Maryland and West Virginia. The two final Power-based clusters, “Stratus” and “Cirrus,” went live in August 2009 and had 156 nodes of IBM’s Power 575 water-cooled machines linked by a 20 Gb/sec DDR InfiniBand network, with a total of 4,992 cores running at 4.7 GHz. These machines delivered a mere 73.1 teraflops. (Not too bad at the time, mind you.) The jump from Tide/Gyre to Luna/Surge over the past seven years is, in retrospect, the more dramatic number, a factor of 40X performance boost.

NOAA is four years into that ten year deal with IBM to provide systems for weather forecasting. In year five in 2017, NOAA can do a two or three year extension or go fishing for a new supplier. IBM could try to convince NOAA to go with a Power-GPU hybrid system or it could continue to resell Cray machines to fulfill the contract. Cray may be able to win the NOAA account on its own, or some other aggressive companies like SGI, Hewlett-Packard Enterprise, or Dell may try to get their foot in the door. As was announced this week, NCAR has over the years shifted from IBM Power machines with InfiniBand to IBM iDataPlex machines with faster InfiniBand networks – a path that NOAA sort of followed. With NCAR’s most recent acquisition, it went with Xeon-based SGI ICE XA with even zippier 100 Gb/sec EDR InfiniBand networks and did not go with Cray XC40s and Aries.

NCAR and NOAA do not mirror each other, but they often make similar supercomputing choices. In this case, they are diverging a bit, but perhaps not as much as people might think, at least from what NOAA says about mixing different networks on the compute side. With so many options available in the coming years, with weather codes being adapted to run on hybrid CPU-GPU systems, and the competitive pressure rising in HPC, supercomputer centers like NCAR and NOAA will have some interesting decisions to make. We will be watching along with the rest of the HPC community.

Be the first to comment