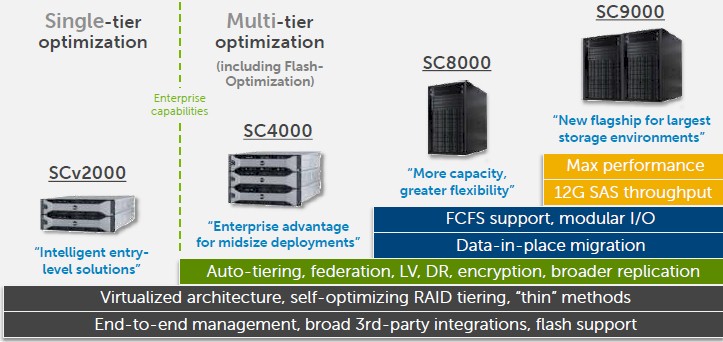

Five years ago, when flash was still an exotic thing in enterprise storage but had been a mainstay for accelerating databases and metadata at hyperscalers for a number of years, Dell and EMC were partners, but its acquisition of Compellent Technologies for $960 million essentially broke up that relationship. The irony is now Dell is trying to buy EMC for $67 billion and it has to rationalize a slew of overlapping and complementary storage technologies that it will control.

In the meantime, everything has to progress as normally, and Dell has to sell its Compellent SC9000 arrays against disk, hybrid, and all-flash products from EMC and the swelling ranks of competitors that are trying to take a bite out of datacenter storage from many different angles.

“There is a definite trend in datacenters for two different approaches to infrastructure,” Travis Vigil, executive director of product management for Dell Storage, tells The Next Platform. “One is the current model, which is a best-of-breed servers, storage, and networking, and the other is much more software-defined with servers as the building block. The common thread across both of these models is that customers want this technology to be simpler and easy to use.”

Not to split hairs, but to our eye, it is looking more like there is one kind of storage, or maybe 1.5 kinds if you want to get all fractal about it. Both models that Vigil referenced above are all based on designs that use X86 servers (and let’s be honest here and say machines using Intel Xeon processors) as the controllers for the storage, when it the past companies created custom ASICs or maybe used a RISC processor to be the main brains running the data storage and retrieval algorithms. Indeed, the big news this week for Dell is that it is moving the Compellent SC storage arrays up to its PowerEdge 13G server platform, which is based on Intel’s “Haswell” Xeon E5 v3 processors. (The prior SC8000 generation was based on the “Ivy Bridge” Xeon E5 v2 motors.)

If you look at a hyperconverged stack, the server node is the storage node and the SAN software is running across those nodes and expansion enclosures are not used to expand disk or flash capacity. The SCOS storage operating system used in the Compellent SC arrays has its own scalability limits – in this case, it tops out at about 3 PB in a single array, and customers who need more than that can federate SC8000 and SC9000 arrays and move workloads and volumes across clustered arrays without impacting their snapshot or replication routines. The Nutanix hyperconverged software that Dell sells on its hyperscale-inspired XC series of storage servers has its own scalability limits, too, as all software does, and in this case, the underlying scalability of the management software that controls the hypervisor a customer chooses determines the scalability of the hyperconverged storage cluster. Generally speaking, hyperconverged storage scales with CPU and storage in lockstep, adding nodes, but there is no technical reason why nodes could not be equipped with storage expansion enclosures. But the point of hyperconverged storage is that particular appliance configurations are made for specific workloads – general purpose server virtualization, virtual desktop infrastructure, databases, and so on – and then scaled out in a uniform fashion.

Our point is that the differences between traditional storage and hyperconverged are subtle, and we agree that vendors are tweaking servers to meet their different hardware requirements. But that is different from building two totally different architectures, as was the case for servers and storage before the hyperscalers ran out of storage scale a decade ago and started doing things differently.

How Does All-Flash At 65 Cents Per GB Grab You?

The battle to make flash-based storage ever less expensive continues and the goal is to get the price of all-flash arrays equipped with sophisticated wear leveling, compression, and possibly de-duplication software to be competitive with hybrid disk-flash and maybe even all-disk arrays. These are all moving targets, and everyone seems to have a different way of comparing themselves to each other. (Which makes this no different than any other segment of the IT market for the past few decades, we suppose.)

The flash fabs of the world can’t make enough non-volatile memory to replace disks – or so we hear from some in the industry – so we had better hope all-flash doesn’t take off much faster than it already is doing. But it is probably not a coincidence that Intel just announced this week that it will be spending $5.5 billion converting its 65 nanometer foundry in Dailin, China to making 3D NAND flash memory; initial production will start in the second half of 2016. (Intel has a fab in Lehi, Utah that it co-operates with memory maker Micron Technology, where it makes NAND flash and where it will be making the jointly developed 3D XPoint non-volatile memory that will roll out in SSDs next year and will eventually be part of the “Purley” server platform that sports Intel’s “Skylake” Xeon E5 v5 processors. Intel has been keen on accelerating cloud rollouts to get companies to eat more of its Xeons and maybe it is going to be pushing non-volatile memory hard to make up for a slumping PC business. (That’s what we would do, and the wonder is that more Intel capacity isn’t being converted given how fast its flash business is growing.)

The new SC9000 controllers have a pair of eight-core Xeon E5 v3 processors running at 3.2 GHz, and each array has a pair of controllers that run in an active/active mode to spread work across the pair of servers and to provide fault tolerance in the event one controller crashes. The SC9000 controller nodes top out at 256 GB of main memory each, four times that of the SC8000’s nodes, which used a pair of six-core Xeon E5 v2 processors running at 2.5 GHz. That’s about 70 percent more raw computing power in the controllers, and with the jump to 12 Gb/sec SAS ports on the backend out to disk drives (double the bandwidth of those used in the SC8000), it is not much of a surprise that this array can, at 385,000 IOPS with an average latency of under 1 millisecond for an all-flash setup, deliver about 40 percent more IOPS than the SC8000 that is its predecessor. The new SC9000 controller nodes cost a little bit more than those of the SC8000, Vigil says, based on the higher bandwidth and IOPS they deliver.

The price for an all-flash configuration is probably what is going to get Dell as much attention as the improved performance. With two SC9000 controllers, plus 336 read-intensive flash SSDs with 3.84 TB of capacity (based on TLC 3D NAND) and including the SCOS 6.7 array software, installation fees, and three years of Dell Copilot support, Vigil says that Dell can get the price down to $1.42 per GB of raw capacity for that 1.3 PB of capacity. That price included enough of the SC420 expansion enclosures to hold all of those SSDs (in this case, 14 of the units). (The SC420 enclosures support 24 2.5-inch drives while the SC400 supports a dozen 3.5-inch drives.) Turning on compression and assuming a little better than 2:1 compression on data, the price drops down to 65 cents per effective GB. That compression feature is new with the SC0S 6.7 software update that also comes out this week; prior to this, Dell could do compression but only for inactive snapshots on Tier 3 archival storage.

Based on its own competitive analysis and that from Gartner, Travis says all-flash array makers assuming more aggressive 3:1 or 4:1 or 5:1 de-dupe and compression reductions on their data on their systems – not necessarily valid for all data types, obviously – cost anywhere from $1.60 to $2.00 per effective GB of capacity after that data reduction. Without being specific about who ranks where, Dell says it is comparing to all-flashies from EMC, Hitachi, Hewlett-Packard, IBM, NetApp, and Pure Storage.

Vigil says that Dell can get the pricing down a little lower per GB on the smaller SC4000 midrange array, which is probably not going to interest large scale enterprises.

The Compellent SC series is not just an all-flash product, of course, and customers can also deploy 7.2K RPM nearline SAS drives as well as regular 10K RPM and 15K RPM SAS drives to store data.

Dell doesn’t report financials any more, but Vigil says the SC series had 90 percent year-on-year growth in Dell’s last fiscal quarter, which ended in July, and this was driven primarily by all-flash and hybrid disk-flash configurations. Of the SC arrays that Dell sells, over 50 percent of them have flash as a component, with about 85 percent of them being hybrid and 15 percent being all-flash.

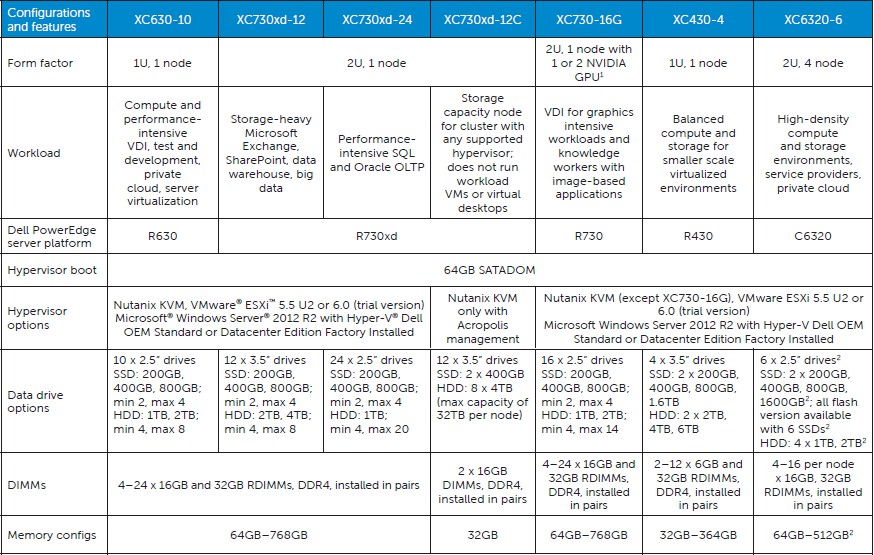

XC Is For Nutanix Server SAN Hybrids

On the hyperconverged side of the storage house, Dell launched its first machines licensed to run Nutanix server-SAN software a year ago at Dell World, and this year the systems are getting a refresh. Vigil says that Dell has shipped thousands of the XC boxes to hundreds of customers, and pointed out that the US Department of Justice and the Federal Bureau of Investigation has just inked a deal for $28 million to install 600 nodes to power a virtual desktop infrastructure (VDI) stack for the 55,000 employees who work for these two US government agencies.

The new XC appliances are based on the latest Haswell Xeon E5 v3 processors from Intel, and come in various form factors and sizes and with either one or two processors per node. And they are not just based on hyperscale machines, but also on various PowerEdge platforms, depending on the compute, memory, and storage capacity that companies want to bring to bear. So what makes these XC machines different from regular PowerEdge and PowerEdge-C machines? They are precisely configured to run Nutanix and for very precise workloads such as VDI, test and development, Exchange or SharePoint, SQL Server or Oracle databases, or a balanced configuration for private clouds.

The most interesting of the XC machines is a the XC6320, an all-flash setup that puts four two-socket compute nodes into a 2U chassis along with two dozen 1.6 TB 2.5-inch flash SSDs. This is the highest density XC appliance Dell has shipped, and it will be available in November. The other configurations based on the PowerEdge R630, R730xd, R730, and R430 platforms are shipping now. Dell does not provide pricing on the XC appliances.

Which configuration does have 385,000 IOPS performance referenced in this article?

I hope it’s not the one with 336 SSDs as that’ll come to just 1,146 IOPS/SSD while (assuming actual SSDs are Samsung PM863 3.84TB – and those cost $2,000 i.e. $0.52/GB retail in small qtys) EACH of those 336 (or however many were included) SSDs is rated at 99,000 IOPS reads and 18,000 IOPS writes.

385,000 IOPS performance (mostly reads) is something I’d expect from a 1U server with 10 SSDs (or if RAID6 is required then 2U server with 24 SSD that can do more than that).

Hi Tim,

How did you calculate 385,000 IOPS? My experience with Dell Compellent is worse like nightmare. Dell Compellent runs like dog.

Can you elaborate how did you glorify Compellent?

Regards,

Raihan

I did not calculate it. Dell supplied that number.

I don’t agree that:

https://www.theregister.co.uk/2015/07/20/dell_arrays_drop_costs_with_threedimensional_flash_chippery/