Hewlett-Packard wants a bigger slice of the high performance computing and data analytics markets, and so does chip maker Intel. And the two have formed a much tighter alliance than they have had in the past with a goal of expanding their respective share of the IT wallet in these areas. And that alliance is going to perhaps make HP a much stronger competitor against supercomputer makers Cray and SGI.

The alliance between HP and Intel, announced at the ISC 2015 supercomputing conference in Frankfurt, Germany, comes at a time when there is a convergence of a sort coming to the HPC and data analytics worlds. This is one of the founding principles of The Next Platform, and it comes as no surprise to us that HP and Intel want to work more closely together to provide scalable systems that can address both traditional HPC and data analytics jobs. While such systems may include many of the same components – and if you squint your eyes, you would not be able to tell the difference between a Hadoop cluster chewing on data and a simulation using the Message Passing Interface (MPI) protocol – the way the components are woven together to reflect the data flowing into and out of the system can be very different.

There is much talk about the democratization of HPC out there in the vendor community and the customer base, to which we would say that these technologies have always been available to those who truly needed massively scalable systems that can chew on lots of data for analysis or simulation or a combination of both. What we believe has happened is more like the commodization of HPC at precisely the same time that large enterprises – not just academic supercomputing centers, government agencies, oil and gas companies, and a few telcos and hyperscalers – are facing a step function (or two) increase in data that they want to analyze and correlate. The technology has ridden Moore’s Law and provided them with compute and switching capacity at exactly the same time Moore’s Law allowed them to collect and keep the data to chew on. That’s not democracy so much as the progress we have come to expect.

Whatever the cause, it is plain that every organization is handling more data and wants to do more and varied kinds of processing against it.

HP’s alliance with Intel mimics the very close relationship that Intel has had with supercomputer maker Cray for the past three years, and indeed, this is so by design. The pendulum of datacenter computing is swinging away from the general purpose architectures that have dominated for the past decade and a half back toward more customized systems that tailor servers, storage arrays, and the switching that lashes them all together to specific systems software for running very precise applications.

The pendulum never swings completely one way or another, and is driven as much by economics as it is technology. But the need for custom systems is without a doubt fueling the increasingly diverse portfolio of Atom, Xeon, and soon Xeon Phi processors from Intel, which not only include commercial chips that any OEM customer can buy for a server, storage array, or switch but also for the invisible custom parts that Intel has been marking for hyperscalers and certain OEM customers. Throw in a mix of networking options – Ethernet, InfiniBand and soon Omni-Path – and alternative processor architectures – including Nvidia Tesla GPU coprocessors, a half dozen ARM server chip makers eyeing the same opportunities, and FPGAs from Altera and Xilinx too. And then add to that the breadth of main memory, non-volatile memory, various disk and tape storage hardware, and a slew of middleware for creating databases and datastores, for implementing block, file, or object stores, or for myriad application frameworks.

The component matrix is quite large, and it is getting harder for enterprises, HPC centers, and cloud service providers to figure out precisely what hardware and systems software they need for their complex workloads.

This is where HP wants to step up its game, explains Bill Mannel, who left supercomputer maker SGI last fall to become vice president and general manager of a combined HPC and Big Data group within the HP server organization. One of the biggest issues that commercial HPC customers, who tend to use third party applications, are facing is that processors are not getting faster and therefore they cannot reduce their software licensing costs. This is great for the ISVs who create the applications, who tend to price their wares by core. But an even larger issue that traditional HPC centers and enterprises alike are facing is that traditional computing architectures are running out of gas.

“So people are willing to explore other things,” says Mannel, who remembers building systems with FPGAs more than a decade ago and who was convinced that they were too difficult to program and that they would never come back. And given all of the caveats that a software ecosystem has to develop for ARM processors and that they do have to get into the field and they have to provide a real advantage over X86 alternatives, Mannel believes that ARM processors will probably find a place in HP products for HPC and data analytics.

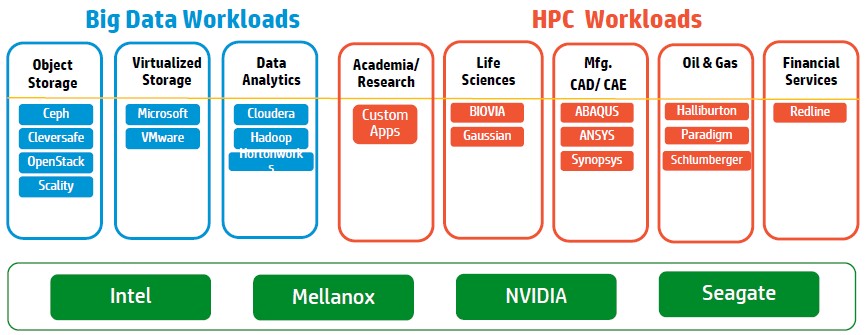

But for now, the big partnership is between HP and Intel when it comes to HPC and analytics. Here is how the bits stack up so far, conceptually:

By taking a market basket approach to Intel’s server, storage, and switching technologies, Cray and HP are no doubt getting price breaks on the technology that Intel is providing. The fact that they will be creating big compute solutions based largely on Intel technology will not preclude them, or any other vendor that adopts Intel’s Scalable Systems Framework, from adopting other technologies – such as ARM processors, Mellanox switches and adapters, or third party storage and file systems. (We detailed this framework back in April.)

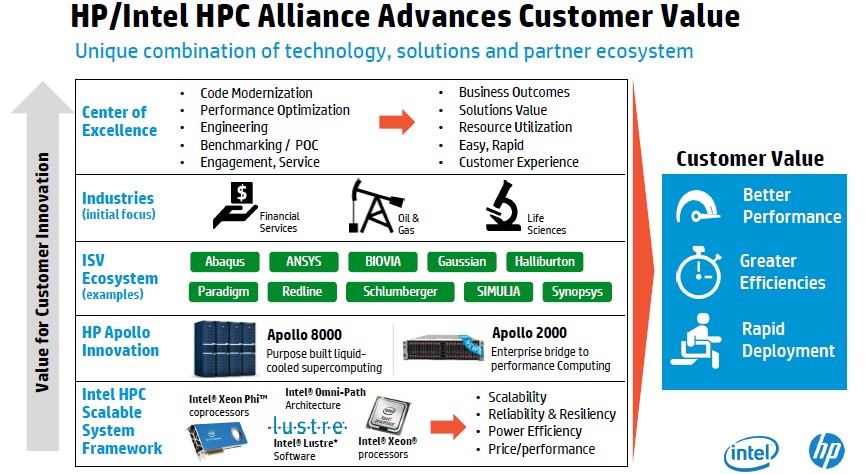

HP has set up two centers of excellence for HPC and data analytics – one in Grenoble, France and the other in Houston, Texas – that will be staffed by engineering teams from both companies to build common roadmaps and systems optimized for specific workloads and customers. As part of the deal, HP will be redistributing Intel’s implementation of the Lustre parallel file system, too.

“For us, what drove the conversation was that HP is a heavily enterprise-driven company, and HPC was almost something that we did as a hobby. Recently we decided to build a business unit dedicated to HPC and big data, and HP, with its enterprise chops, has an opportunity to take all of these HPC technologies and push it down into a broader channel.”

“We are providing that base level of functionality as well as some expertise from the center of excellence, but there is a whole lot of work being done by HP to bring these solutions to life,” Charles Wuischpard, vice president and general manager of the Workstations and High Performance Computing division in the Data Center Group, tells The Next Platform in describing the HP-Intel alliance. Intel is the foundation and HP provides other elements on top of that, tuning the iron and systems software to run particular applications. In a sense, HP becomes an integrator of Intel technologies and an interface to the ISV community and to those customers who create their own code. “I think you will see more of this from the other partners over time,” adds Wuischpard, who reminded us that in April, Cray announced its future “Shasta” systems will be based on the technologies in the Scalable Systems Framework, too.

So what makes the current alliance between HP and Intel different?

“What is different is that the level of engagement is significantly higher,” explains Mannel. “We are really looking for ways to create differentiated product. Before, we understood what Intel was doing, and we took it into HP and built what we felt like the customers wanted. Now we are much more engaged in a feedback loop. We show Intel what we have in mind, get its feedback, then get customers in that conversation, and do some benchmarking so we understand the opportunities. You could somewhat say that we accepted the Intel technology in the past and integrated it the way we thought it should be, but now we are much more engaged in a collaborative process in building new products.”

In the case of Cray, it was continuing delays with AMD Opteron processors on which it depended and then the acquisition by Intel of its “Gemini” and “Aries” interconnect business that got the two collaborating much more closely on supercomputer designs. And now they collaborate very tightly on interconnect, processing, and storage. But, Cray is also doing research on ARM-based systems and their potential in HPC and with Steve Scott, who managed the creation of the SeaStar, Gemini, and Aries interconnects back as CTO at Cray after brief stints at Nvidia and Google, there is speculation that Cray is interested in building its own interconnect again, too.

As for the HP-Intel alliance, Wuischpard says that it started out as a conversation between the CEOs at the companies – Meg Whitman and Brian Krzanich – as HP was beginning the process of splitting itself into an enterprise business and a PC and printer business.

“So we did a deeper dive with our counterparts at HP to see if there was a fit,” Wuischpard says. “HP is looking to double down on HPC. HP already does a lot already, as you know, and they see a lot of opportunity in traditional HPC and big data. The idea was that if we develop all-Intel solutions and work closely with HP using the Scalable Systems Framework, both at the development level and at the go-to-market level, could we be more successful. We certainly think of HP as a big scale partner – the size of their sales organization and their channel reach is greater than ours.”

The companies are aligning their current product roadmaps and are working to develop the next three generations of high-end systems through 2020, according to Wuischpard. Both are kicking in R&D money to drive this common roadmap, which will provide differentiation on top of the Intel hardware.

The deal is non-exclusive for both parties, meaning HP can forge similar alliances with other component suppliers and Intel can do similar deals with HP’s competitors. Dell and Lenovo Group, which bought IBM’s System x server division last October, are the two next obvious candidates for an alliance with Intel, but perhaps others such as Supermicro, Fujitsu, Hitachi, Bull, and others will follow a similar path. They certainly have over the years when creating general purpose systems from Intel processors, chipsets, and motherboards.

“It is quite attractive to all of our OEM partners, and each one can take a different tack,” says Wuischpard of the Scalable Systems Framework. “One of the critiques of our strategy has been are we taking up all of the territory and leaving nothing for anyone else. But as you can see from the pictures, there is a lot of room for differentiation on top of this framework. So we do look at this as being much more than us and HP.”

HP is interested in growing its HPC and affiliated businesses, plain and simple, and filling in what it sees as a vacuum created by IBM selling off its X86 server business and discontinuing development on BlueGene/Q and big SMP Power servers.

“For us, what drove the conversation was that HP is a heavily enterprise-driven company, and HPC was almost something that we did as a hobby,” explains Mannel. “Recently we decided to build a business unit dedicated to HPC and big data, and HP, with its enterprise chops, has an opportunity to take all of these HPC technologies and push it down into a broader channel.”

Depending on who you ask, the total addressable market is about a $9 billion to $10 billion business, and HP has somewhere north of a third of the market already. “Given the Lenovo-IBM situation – before, it used to be IBM and HP that were neck-and-neck for HPC sales – the closest competitors to us have less than half our revenues right now. We feel like there is room to grow. We have focused on the bottom 250 of the Top 500, a lot of commercial accounts and not the heavy supercomputing centers, but as a part of my new business unit, we are going to attack that Top 500 list more broadly with optimized platforms. ”HP has about a third of the systems on the bi-annual Top 500 supercomputer rankings, but among the Top 100 machines on the list, HP’s share is usually only around 8 percent or 9 percent,” according to Mannel. “That is a measure of the opportunity that we are looking at as we go forward.”

Ultimately, what the alliance between HP and Intel means is that Cray and SGI will see more competition from HP at the high end (and IBM to a certain extent as it pushes hybrid Power-GPU and Power-FPGA machines) and HP will try to take a bite out of the IBM cluster base in the middle and low end of the supercomputer market.

This alliance approach that Intel now has with both Cray and HP also allows Intel be the prime contractor on big deals like the Aurora supercomputer being built for Argonne National Laboratory, which has Cray as the system integrator and Intel as the prime contractor.

Be the first to comment