When we broke the news about the upcoming Intel and Cray contract for the Aurora supercomputer coming to Argonne National Laboratory in 2018, we talked in depth about the unique Knights Hill based architecture of the future machine and referenced a new paradigm for high-end HPC systems that Intel refers to as the HPC scalable system framework.

At the time, it was hard to wrap how the new memory, interconnect, and switching approaches fit into this overall framework, but during a conversation with Alan Gara, Intel Fellow and Chief Exascale Architect for Intel’s Technical Computing Group (not to mention one of the key architects of three of the previous BlueGene supercomputers from IBM)

The Next Platform dug into more detail about how the HPC scalable system framework could allow for far more configurability and flexibility than contemporary supercomputers do, particularly across the new levels of memory, in the switching fabric, the I/O and storage subsystems, and within the interconnect. In short, it is an effort to embed further intelligence at both the hardware and software levels so big supercomputing sites can tune for optimal performance depending on how they want to use the machine (i.e. compute-intensive versus data-intensive workloads which emphasize different components).

When it comes to a large system like Aurora, Gara says that “There are many fabrics that run around in these machines to push performance—sometimes they are isolated for repeatability and reliability—but this is central.” On the software front, Intel has created a layer serves as a sort of reference design for what the fabric management software should look like. Intel has pushed some intelligence into the switches for these next-generation supercomputers that have quite a bit of complexity (and thus, configurability) to provide more fine-tuned ways to handle prioritization among different types of packets, how congestion is handled, and other aspects of the fabric that might change depending on how the system is being used.

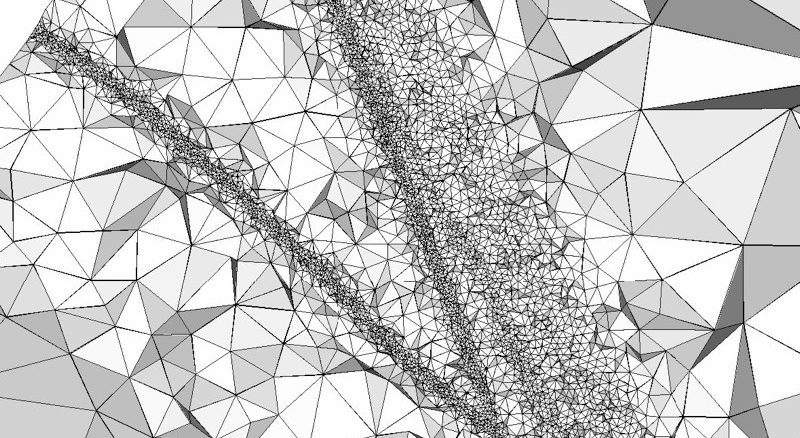

As we noted during the announcement of the new Aurora system, the machine will sport a Dragonfly Aries-like network topology, although this is but one of several possible future configurations. At the time, Gara described the HPC scalable system framework as a flexible approach enabled by a high-radix switching framework that allows for multiple topologies. “The radix of the switch is related to the number of hops or distance of the fabric, which has an impact on both bandwidth and latency,” he noted then. “It turns out to be a critical parameter and is one of the dimensions where we’ve worked hard. It’s not just the radix, it’s also how you do the routing and we’ve done a lot of that work with Cray in terms of minimizing congestion is a result of both hardware and software.” The system will be tied together with the forthcoming OmniPath-2 fabric, which will boast an aggregate bandwidth of around 2.5 petabytes per second at the system level with a bisectional bandwidth of around a half a petabyte per second. But the real story here isn’t in the numbers, it’s in how this can be adapted, flexed, and bent for other systems based on the machine’s purpose.

The initial goal at the outset of creating this machine, Gara told us this week, was to create a “plug and play sort of environment for high-end machines” where using the fabric, it is possible to put new layers in the I/O and storage system as well as the compute system, but with an emphasis on configurability so it is possible to tune for high I/O or compute requirements based on application needs, and the HPC scalable system framework is aimed providing both the hardware and software layers to do that.

There’s little doubt we will have to watch how all of this plays out on the Aurora system, but the real question is how Intel’s HPC scalable system framework will squeeze the bottleneck into. All large systems are in a constant game of whack-a-mole when it comes to moving the strain around the system to achieve balance. The bottleneck buck is always being pushed around—so with so much memory bandwidth, such a high-speed interconnect, one hell of a lot of cores, which mole is the one Intel will constantly be chasing around with its hammer? Is the memory bandwidth going to saturate the cores and network, will the network not be able to catch up, are the cores going to be too slow?

Gara agrees that their hammers are still poised, but they’ve sought a better performance balance across the machine. The memory bandwidth limitations on previous machines are nipped on Aurora, with bandwidth rates that are going to be 10x-20x what we’ve seen with machines. What’s left on the bottleneck front is more a matter of capacity and managing job partitioning to actually take advantage of it—as is the fabric, which has been the source of big investment at Intel in preparation for these new bandwidth capabilities. The second bottleneck is going to fall outside of Intel and Cray lines for Aurora and similar systems—and right into the laps of application developers who are going to thread their codes far more efficiently. A good place to start with that might be rewriting them altogether in some cases, as we’re starting to hear, and for all kinds of reasons (to take advantage of the new storage and I/O systems, among others).

When the Aurora system and its wide range of peak floating point capability (between 180-460 petaflops) was announced, along with the number of nodes, some speculated that the Knights Hill cores must not be much of a jump from what Knights Landing offers. The performance then, would have to be coming from somewhere else, which Gara said is indeed the case.

“The cores are formidable in this machine, but no one is claiming dramatic improvements in core speeds. The performance is coming from increased concurrency, not higher frequencies.”

This leads us back to the point we made a few weeks ago, the Knights Hill based machine stands on the HPC scalable system framework far more than it does the power of its compute, making this far more of a memory bandwidth machine than a high-test floating point monster. And there will likely be no complaints about this, especially since a dense-packed system with hot cores would be even more of a nightmare on the power and cooling fronts. It would simply be too expensive to run.

Since one can assume that applications will continue down the same lines of being partitioned into ranks, which each job split into of these ranks, which use a number of cores that depends on well that rank is threaded, its easy to see the impetus for the push on developers to bring their codes up to speed. From the Intel HPC scalable system framework point of view, the scaling of ranks across the machine is a fabric issue rather than one related to how powerful the cores are, especially since the communication is happening with ranks on the other side of the machine.

As Gara reminds, “The performance is coming from increased concurrency, not through higher frequencies. As a result you need to exploit those higher levels of concurrency—identify them in your code, have an architecture and programming model that lets you exploit that by taking the same amount of work, having the many cores work on the same amount of work, but getting much more performance. Really, it’s about that that bandwidth—it’s the real defining factor for both performance and scalability.”

As Doug Black reported for The Next Platform earlier this week after a chat with Gara about the Aurora machine, “Aurora is not a final destination for Intel. It’s a very important step in a journey that’s much longer for us, an exciting beginning of a new future. It’s a new turning point, the first introduction of some of the new concepts that we envision for the incredible insights this new scalable system design is going to enable.”

Be the first to comment