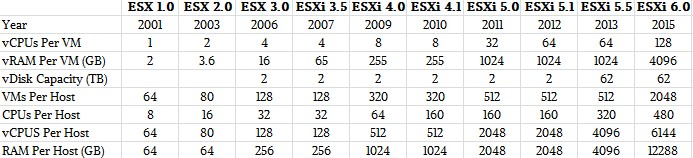

After a decade of expanding the various capacities of its server virtualization hypervisor, ESXi, and the virtual machines that run on it, you might think that VMware was pretty much done with boosting these two key components of its virtualization wares. But, as it turns out, customers keep hitting the upper limits and they are pushing the VMware’s coders to scale the software.

With over 500,000 customers and more than 45 million virtual machines in the datacenters of the world running on top of its software, VMware has to meet a very diverse set of customer needs. For a long time, the ESXi hypervisor and its virtual machines could host many relatively light workloads, but the heftiest databases and applications at large enterprises were too much to cram into a virtual machine. (The ESXi hypervisor is the key component of the vSphere server virtualization stack, which has various features of the hypervisor turned on or off depending on which version customers license.)

Over time, VMware has stretched ESXi over larger and larger host systems, allowing it to access more threads, cores, memory, network interfaces, and SCSI and SATA adapters and presenting larger virtual memory and disk capacity to virtual machines that in turn run operating systems and applications. But the workloads keep getting fatter, and that means VMware has to keep expanding the capacities.

In some cases, the capacities of the hypervisor and virtual machines are boosted to stay well ahead of customers, and in others VMware is just keeping pace. It all comes down to cases, explains Mark Chuang, senior director of product management for the software defined datacenter group at VMware.

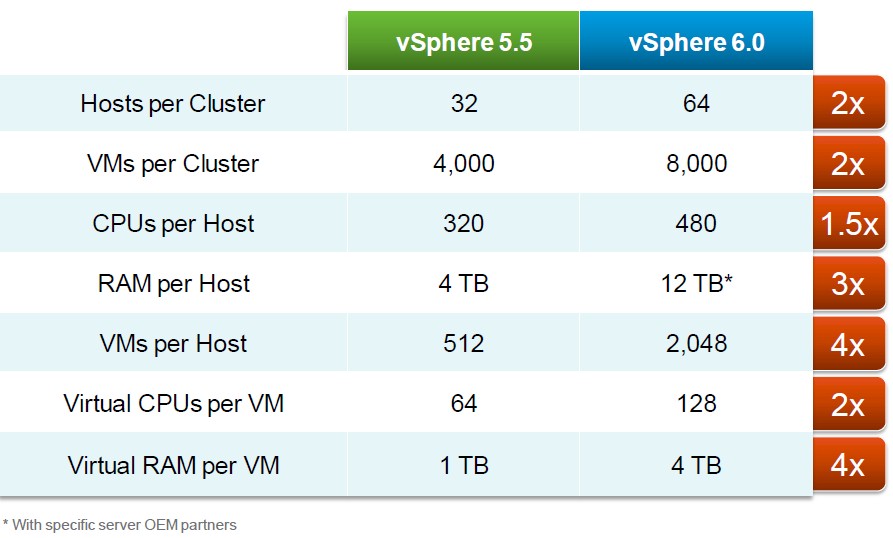

So, for instance, with ESXi 6.0, VMware is moving from to 64 hosts per cluster, and while most customers are not yet bumping up against the 32 host limit of ESXi 5.5, some are and, in particular, customers using the Virtual SAN (vSAN) converged storage are asking for more scalability here.

“With other characteristics, such as 480 CPUs per host or 12 TB of physical RAM per host, we want to be two or three steps in front of customers and we really work out all of the kinks so that once they get there, it has had plenty of bake time,” explains Chuang. “As I have been talking to customers over the past 24 months, I definitely see that the curve is accelerating. So two years ago, I definitely saw that the sweet spot for a host was 128 GB of memory, and now, it is not uncommon for customers to tell me 384 GB or 512 GB or sometimes 1 TB.”

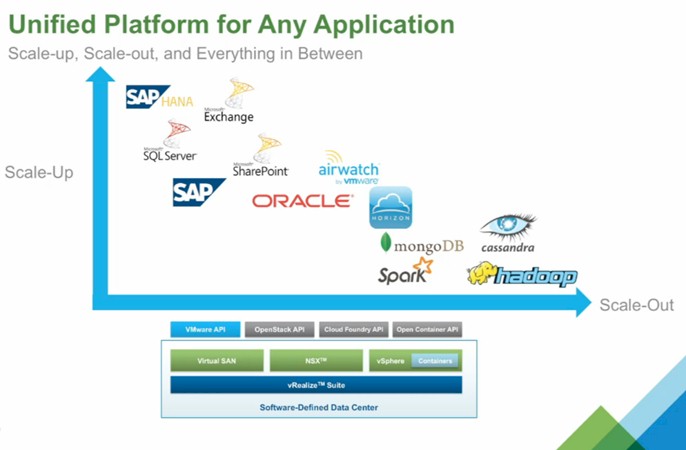

As for virtual machines, there is one big driver that is pushing up the virtual CPU and virtual memory capacities: in-memory databases. “If I took in-memory applications like SAP HANA out of the equation and we were looking at more second generation applications, then the sweet spot would be from 4 to maybe 16 virtual CPUs and from 32 GB to maybe 512 GB.”

VMware will not release the official configuration maximums document for the new vSphere 6.0 stack and its ESXi 6.0 hypervisor until the software is released sometime before the end of March, but the company did give out some of the key feeds and speeds for the capacities of the new hypervisor and its virtual machines:

Because of applications like SAP HANA, the virtual CPUs per VM is being doubled to 128 with ESXi 6.0 and the virtual memory is quadrupling 4 TB compared to ESXi 5.5. That gives SAP’s in-memory database lots more headroom. With ESXi 5.5, SAP HANA was certified to run in a virtual machine that could scale up to 64 virtual CPUs and 1 TB of virtual RAM, the largest VM available; with the new ESXi 6.0 hypervisor, a HANA VM will be certified to scale up to 128 virtual CPUs and 4 TB of virtual memory, also the largest size of a VM with the latest release.

As you can see from the table above, the ESX hypervisor has come a long way in the past fourteen years in terms of its capacities. (ESX Server was the original software, which was then replaced by ESXi, the embedded and skinnier version of VMware’s bare metal hypervisor. We have ignored the type 2 or hosted version of VMware’s server hypervisor, which was called GSX Server and which predated ESX Server.) Generally speaking, VMware has kept a balance between the absolute maximums in terms of threads and memory on X86 servers and the largest practical machine that most enterprises will buy to run workloads in their clusters. There are always larger NUMA machines on the market, but generally speaking such machines have not been used as hosts for virtualized workloads. Such big iron tends to be used as database engines, and enterprise shops tend to have mirrored configurations with replication between the machines for high availability for their most critical transaction processing jobs.

That said, the advance in terms of core count, memory capacity, and network bandwidth in standard X86 servers with two, four, or eight sockets has meant that companies that might have otherwise needed a pair of large NUMA machines can get by with a much less hefty – and much less expensive – smaller NUMA machine to support their workloads. And with ESXi 6.0, VMware will have no trouble spanning such systems with its hypervisor and offering up hefty VM slices on them for the big jobs.

While VMware’s leading edge customers are pushing the thread and memory limits on VMs, it is important to understand that most workloads running in the enterprise are not so dense. VMware did a poll of customers who collectively had over 300,000 VMs running atop ESXi 5.5, and 93 percent of those VMs had four virtual CPUs or fewer to run their workloads.

This was an important statistic to have on hand as VMware was reworking the fault tolerant clustering feature for the ESXi hypervisor. The original FT feature used a record and play methodology, formally called vLockstep by VMware, that provided the synchronization of bits between two VMs running on two distinct physical servers for fault tolerance. This record and play method of doing FT had high overhead on both the physical processors and networks linking the machines, explains Chuang, and it did not scale well beyond a single virtual CPU and thus FT could only be provided for relatively small workloads. The new FT implementation uses a fast checkpointing technique to keep the two VMs synchronized and this method has a lot less overhead.

There are no technical limitations to the scalability of this new FT method, says Chuang, but VMware is being conservative and only allowing the version that comes out with ESXi 6.0 to scale to four virtual CPUs, even though it can and will almost certainly scale to 8 or even 16 virtual CPUs as VMware gets it into the field and customers push the limits again. If the scale-up of the Virtual SAN software is any measure, which has gone from 8 to 16 to 32 and now to 64 nodes in a single instance in a little less than a year, then the FT feature should see its scalability ramp quite quickly. The assumption is that for key workloads, customers do want fault tolerance, just like they want scalable virtual storage area networks.

The key message that VMware is trying to convey with the vSphere 6 and related component announcements is that its server virtualization software is suitable for any application, whether it is a scale out application running atop a virtualized Hadoop cluster or it is a scale up application running on an SAP HANA in-memory database.

The VMware installed based is dominated by Windows-based applications that are used to run enterprises, but to be a platform means tuning up the VMware stack so it can wrap around new kinds of workloads. VMware has to create virtualization and management software that runs the old stuff well, but that can embrace and control the new stuff, too. To that end, VMware has commercialized its “Project Fargo” effort to provide instant cloning of images inside of virtual memory, and at the product launch for vSphere 6.0 Ben Fathi, the company’s CTO, said that this new capability was tested setting up a virtualized Hadoop cluster and was able to fire up 64 virtual machines per ESXi 6.0 host server in under six seconds. Fathi was also touting the fact that on a 30 TB TeraSort benchmark test running on top of Hadoop, a 32-node cluster with four virtual machines per physical node was able to run the test 12 percent faster than the same machine in bare metal mode because the virtualized instances allowed the cluster to squeeze more work from the machinery. (The obvious question there is whether or not the extra cost of the VMware software justifies the extra performance gained, and the answer is probably no until you start quantifying the operational benefits that come from virtualization and entertain the possibility of running Hadoop alongside other and probably related workloads on the same cluster.)

For virtual desktop infrastructure workloads, the instant cloning feature allowed for a cluster to start 500 virtual desktops 13X faster than was possible using last year’s ESXi 5.5. So the usefulness of the Project Fargo instant cloning is not limited to scale out workloads like Hadoop.

Another platform play for VMware is the inclusion of the OpenStack cloud controller as an option for the vSphere stack. Rather than fight OpenStack directly in the marketplace, VMware decided last year to embrace it and to extend it. The VMware Integrated OpenStack, as the option for vSphere is called, is based on the “Icehouse” release of OpenStack, which is deployed as a virtual appliance inside of ESXi VMs with optimized drivers for the Nova compute, Neutron network, and various storage drivers in OpenStack.

The target customers, says Chuang, have large installations of vSphere virtualization already for their production workloads, but who have developers that want to use OpenStack for their test and development environments or who want a subset of their applications to run in a manner that is compatible with OpenStack. The OpenStack distribution hooks into various VMware cloud management tools, including vRealize Operations, Log Insight, vRealize Automation, and vRealize Business.

The OpenStack appliance software is free to any customer who has vSphere Enterprise Plus or higher and if they want tech support from VMware for this OpenStack implementation, the fee is $200 per socket per year. At the vSphere 6.0 launch, VMware CEO Pat Gelsinger said that over half of the VMware customer base would have access to VMware Integrated OpenStack, which tells us how many shops are now running vSphere Enterprise Plus or higher. (That would be in excess of 250,000 companies.) Gelsinger cited one early customer, who was unnamed, that had 300 virtual machines running in an OpenStack development and test cloud that could now be absorbed into the vSphere private cloud for production applications, which had over 30,000 VMs. This is a story that VMware hopes to tell again and again, rather than the other option, which was hearing about VMware customers moving to free-standing OpenStack clouds.

One feature in the vSphere 6.0 stack that is worth mentioning is long distance vMotion live migration of virtual machines. This is something that will give VMware customers the kind of remote failover capabilities for virtualized infrastructure that the Googles of the world have created for themselves.

With regular vMotion live migration, a running virtual machine can flit from one physical machine and its hypervisor to another one, which is handy for workload management and disaster recovery. Up until now, that vMotion live migration was limited to a cluster operated by a single instance of the vCenter Server management console. VMware wanted to extend vMotion beyond a single vCenter Server instance, which it has done with vSphere 6.0, allowing for VMs to jump between clusters. But then the company went one step further and tweaked the vMotion code so that it could provide failover between geographically dispersed datacenters. So long as the host and target machines are on the order of 100 milliseconds apart on the round trip time (RTT in the lingo), then the long distance vMotion will work. For most network links, that will be something on the order of a trans-continental distance.

vSphere 6.0 has been in use on VMware’s own test and development cloud since March 2014, when it was still in pre-alpha stage, and it was tested in alpha and beta stage by that cloud as well, according to Fathi. That cloud spins up and takes down as many as 30,000 VMs a day, and this “dogfooding” of the software was a key aspect of the development and testing cycle for vSphere 6.0. With over 650 new features and enhancements and the largest and deepest beta testing phase in the company’s history, vSphere 6.0 is being billed by Gelsinger as the largest and most important release in VMware’s history. VMware has not announced pricing for vSphere 6.0 as yet, but will when the product becomes generally available before the end of March.

Be the first to comment