Virtualization has been an engine of efficiency in the IT industry over the past two decades, decoupling workloads from the underlying hardware and thus allowing multiple workloads to be consolidated into a single physical system as well as moved around relatively easily with live migration of virtual machines.

It is difficult to imagine how today’s hyperscale datacenters would have developed without server virtualization hypervisors and their encore and sometimes add-on, software containers.

One aspect of virtualization that is often overlooked is I/O, or how to deal with hardware devices in a virtualized system. If there are multiple virtual machines running on a server, how do you let each one access the network, for example, when there may be only one physical network interface?

In the early days of X86 hypervisors and virtual machines, this was done via software emulation, presenting each virtual machine with its own virtual NIC while various software layers handled all the complexity. This approach proved to be a bottleneck to I/O performance, as well as stealing CPU cycles from the host processor that impacted the number of virtual machines it could run.

One workaround to this overhead was Single Root I/O Virtualization, or SR-IOV, developed by Intel and taken over by the PCI-SIG, which defined extensions to the PCI-Express peripheral specification that enabled a PCI-Express hardware device to appear to be multiple separate PCI-Express devices. Each physical device can support up to 256 virtual functions, which means that in theory a network interface card (or NIC) on the PCI-Express bus could comprise up to 256 virtual NICs. In practice, each virtual function requires actual hardware resources in the silicon, and so the number of virtual functions implemented in production devices tends to be far fewer than this.

A more up to date approach, also developed by Intel, is Scalable I/O Virtualization. An initial technical specification for this was published back in 2018, but now Intel and Microsoft have contributed the finished SIOV specifications to the Open Compute Project, opening it up to the vendors that provide the equipment for the hyperscale datacenter operators.

This is significant because, as The Next Platform once calculated, OCP-compliant kit possibly represented about 25 percent or so of the 11.7 million server shipments in 2019, but also, where the hyperscalers go, the rest of the datacenter market tends to follow later.

As its name suggests, Scalable I/O Virtualization (SIOV) was designed to operate at greater scale than SR-IOV, to meet the demands of newly popular compute models such as software containers and serverless compute (also known as functions-as-a-service) which often call for hundreds or thousands of instances running on the same system, rather than a few dozen virtual machines.

According to Ronak Singhal, senior fellow and chief architect for the Xeon roadmap and technology at Intel, SIOV accomplishes this by moving much of the device logic (as defined in the SR-IOV specification) to the virtualization stack (on the host system). “Also, SIOV delivers greater composability of virtual device instances by software while preserving the performance benefits of direct access to device hardware,” Singhal said.

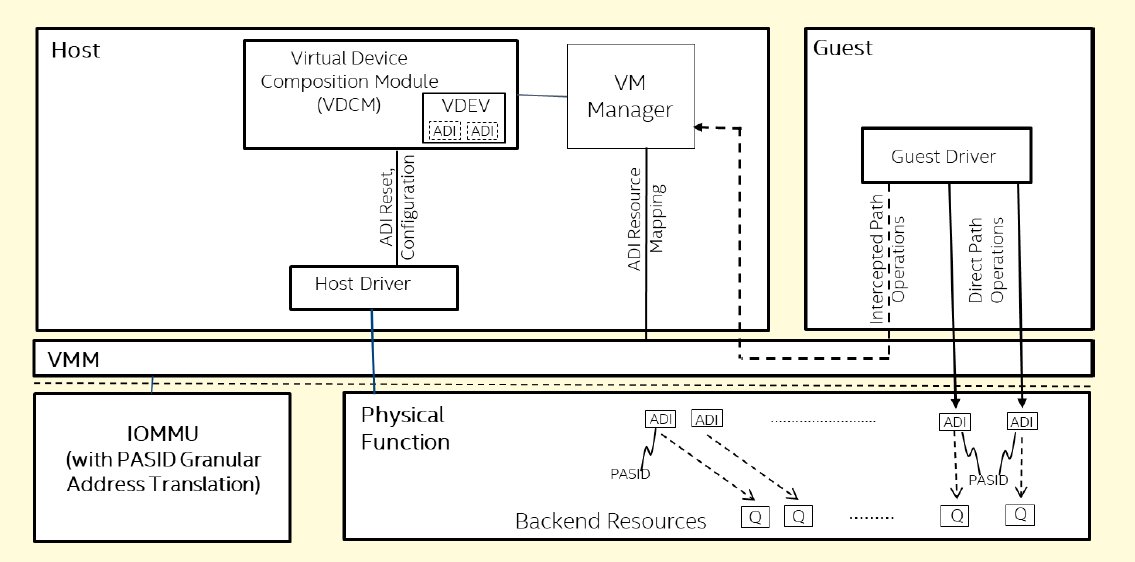

In fact, looking at the specifications shows that this is accomplished by SIOV separating out management and configuration functions to a Virtual Device Composition Module (VDCM) for greater flexibility. Accesses between a virtual machine and a hardware device are defined as either “direct path” or “intercepted path,” with the direct path operations getting mapped directly to the underlying device hardware for higher performance. The intercepted-path operations are those handled by the VDCM.

Intercepted-path accesses will typically include those management functions such as initialization, control, configuration, quality of service QoS handling and error processing, whereas direct-path accesses will typically comprise data path operations.

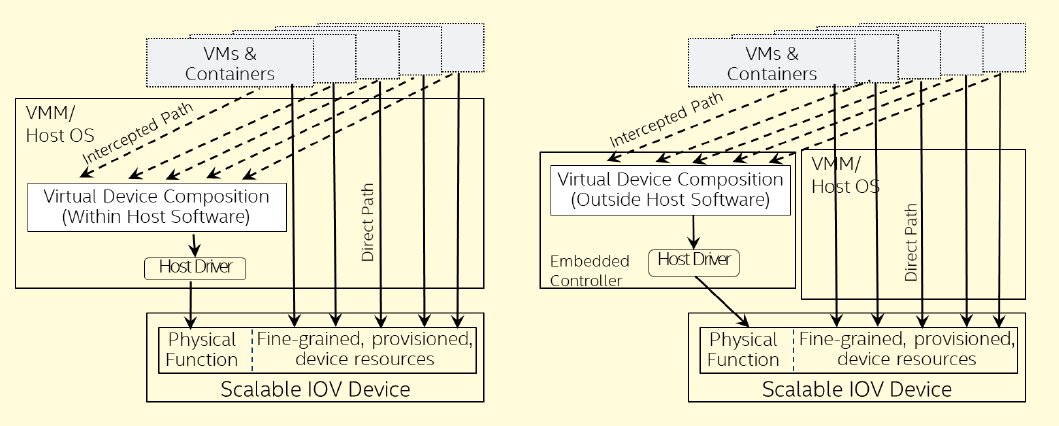

Interestingly, the specifications state that the VDCM function may be implemented either in the host operating system or a virtual machine manager (VMM), or else implemented as part of a discrete embedded controller deployed inside the platform.

In fact, the specifications also state that each operating system or VMM may require the VDCM to be implemented in a specific way, such as being packaged as user-space modules or libraries that are installed as part of the host software driver.

This separation of the VDCM from the hardware devices allows the virtualized functions on each device to be simpler, which enables increased scalability and flexibility with a lower hardware cost and complexity, according to Intel. The result should be near-native I/O performance delivered to each virtual machine or container or microservice that these virtualized functions support.

The specification defines an Assignable Device Interface (ADI) as being the set of resources on the hardware device that are configured and allocated together as a discrete unit to implement a virtualized function, making an ADI the unit of device sharing. Examples of ADIs might include the transmit and receive queues associated with a virtual NIC in the case of a network controller, or command submission and completion queues in the case of a storage controller.

Scalability is also aided by a new identifier as part of the SIOV standard, called Process Address Space Identifiers (PASIDs). These are used to identify different ADIs and pinpoint the address space targeted by the transaction with a 20-bit PASID.

Meanwhile, A VDEV is a virtual device instance that gets exposed to a specific virtual machine, and this gets mapped by the VDCM to a specific ADI inside the physical device when the VDEV is configured.

SIOV was built with the intention of supporting all current SR-IOV usages and more, and with increased ecosystem support, it has the potential to eventually supersede SR-IOV, according to Intel. For now, SIOV and SR-IOV can co-exist within a server, with a mix of some hardware devices using SR-IOV while others make use of SIOV. A single hardware device that uses both specifications is not suggested, but technically possible.

According to Intel, SIOV will be supported in its upcoming “Sapphire Rapids” Xeon SPs, which are currently expected in the second half of this year, as well as by Intel’s own Ethernet 800-series network controllers and future PCI-Express and Compute Express Link (CXL) devices and accelerators.

Intel also said that work on upstreaming Linux kernel support is underway with anticipated integration due to come later in 2022.

But support from other vendors will also be essential if SIOV is to get off the ground. We asked Intel how many vendors or devices it was aware of that are either in production or coming soon, but the firm responded only with “we are aware of third parties who are pursuing adoption. As part of the OCP contribution, we encourage vendors who support SIOV to sign-on as SIOV OCP suppliers and publicize their support to the ecosystem.”

However, once SIOV is supported in Intel Xeon processors, it is likely only a matter of time before support diffuses out into the rest of the server hardware ecosystem. If Intel can be believed, this will provide a pathway to enabling high-performance I/O and acceleration for advanced AI, analytics and other demanding virtual workloads that are increasingly common in datacenters.

The url of “SIOV specifications” in this article is not available,check again pls.