Nvidia co-founder and chief executive officer Jensen Huang paced the stage at the company’s GTC Washington DC event dressed in his standard black leather jacket over a black T-shirt, with black pants and black sneakers, but the messages he delivered during his keynote was decidedly red, white, and blue.

Like a stone skipping across the water, Huang touched on a range of areas where Nvidia and its partners are leaving their mark, from AI and quantum computing to telecommunications, supercomputers, robotics, and cybersecurity – via a partnership with security firm CrowdStrike – and all in the name of returning the United States to the pole position in the increasingly AI-based IT industry.

The way to do that is to bring technology development and manufacturing back to the country. One place to do that is in telecommunications.

Huang said Nvidia is partnering with Nokia to drive AI deeper into wireless networking infrastructure, a move that Huang will reduce America’s reliance on foreign technology in an industry that is central to the country’s economic and national security interests.

“Ever since the beginning of wireless, where we defined the technology, we defined a global standard, we exported American technology all around the world so that the world can build on top of American technology and standards,” he said. “It has been a long time since this happened. Wireless technology around the word largely today [is] deployed on foreign technologies. Our fundamental communication fabric, built on foreign technologies. That has to stop. We have an opportunity to do that.”

That will come in a few ways with the help of Nokia, a Finnish company that Nvidia is investing $1 billion in. Nokia will incorporate Nvidia’s commercial-grade AI-RAN products into its own RAN portfolio, paving the way for a range of communications services providers to run their AI-native 5G and 6G networks on Nvidia technology and giving Nvidia a bigger presence in a multi-trillion-dollar global market.

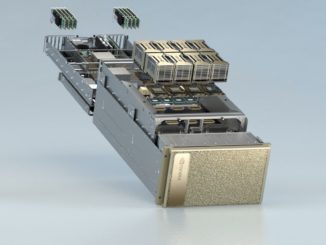

Building on that, Nvidia also unveiled its Aerial RAN Computer Pro (ARC-Pro), an accelerated computing platform for 6G the including connectivity, computing, and sensing capabilities and giving telcos a software-upgrade path to move from 5G-Advanced to 6G.

“ARC is built from three fundamental new technologies: the Grace CPU, the Blackwell GPU, and our Mellanox ConnectX networking designed for this application,” Huang said. “All of that makes it possible for us to run this CUDA-X library called Aerial. Aerial is essentially a wireless communication system running on top of CUDA-X. We’re going to create, for the first time, a software-defined programmable computer that’s able to communicate wirelessly and do AI processing at the same time. . . . and Nokia is going to work with us to integrate our technology [and] rewrite their stack.”

Huang said the global telecom market sits at about $3 trillion and includes hundreds of billions of dollars in infrastructure and millions of base stations, adding that “if we could partner, we could build on top of this incredible new technology, fundamentally based on accelerated computing and AI, and for United States, for America, to be at the center of the next revolution in 6G.”

He also sees this as open the edge even more to AI, which will lead to opportunities on a more powerful AI-based wireless telecommunications network, similar to what Amazon Web Services (AWS) and other cloud providers did by building their business atop the internet, which initially was a communications system. Cloud computing will leverage base stations worldwide – rather than datacenters – to reach the edge.

In quantum computing, Nvidia unveiled NVQLink, an interconnect that links quantum processors with Nvidia’s GPUs. An ongoing challenge in quantum computing is error correction, a technique that enable errors made by the systems to be detected and corrected without disturbing the fragile qubits at the heart of the computers. Huang said what needs to be done is adding extra entangled qubits so that measuring them offers information to find the errors without damaging the qubits. However, doing so calls for conventional computing, in this case GPU accelerators.

This is where NVQLink comes in.

It’s “a new interconnect architecture that directly connects quantum processors with Nvidia GPUs,” he said. “Quantum error correction requires reading out information from qubits, calculating where errors occur, and sending data back to correct them. NVQLink is capable of moving terabytes of data to and from quantum hardware the thousands of times every second needed for quantum error correction.”

Foundational to is CUDA-Q, Nvidia’s quantum-classical computing platform, Using the two together will let researchers do more than error correction, but also orchestrate quantum devices and AI supercomputers to run quantum GPU calculations, with Huang adding that “quantum computing won’t replace classical systems. They will work together, fused into one accelerated quantum supercomputing platform.”

In addition, 17 quantum computing companies are supporting NVQLink, and eight supercomputing labs – Brookhaven National Laboratory, Fermi Laboratory, Lawrence Berkeley National Laboratory, Los Alamos National Laboratory, MIT Lincoln Laboratory, Oak Ridge National Laboratory, Pacific Northwest National Laboratory and Sandia National Laboratories – will use it.

Nvidia also will work with Oracle to build “Solstice,” which will be the US Department of Energy’s largest AI supercomputer, powered by 100,000 Blackwell GPUs. It’s the largest of seven AI supercomputers Nvidia will build for the DOE. Solstice and “Equinox,” another of the systems that will hold 10,000 Blackwells, will be housed at the Argonne National Laboratory and will deliver a combined 2,200 exaflops of performance.

Huang gave kudos to the Trump Administration for enable these large systems. An ongoing concern about the rapid adoption of AI is the enormous amounts of power and water that the systems consume. Huang applauded Trump for his administration’s energy policies and Energy Secretary Chris Wright for bringing “a surge of energy, a surge of passion, to make sure that America leads science again.”

Two of the seven systems will be HPE supercomputers based on the OEM’s upcoming GX5000, the successor to the EX4000 architecture that was the foundation for the vendor’s three exascale systems, El Capitan, Frontier, and Aurora. HPE announced this week that the GX5000 will be the architecture for “Discovery,” the successor to Frontier that will be housed at Oak Ridge National Lab.

At GTC Washington, the systems maker announced the “Mission” and “Vision” that be housed at Los Alamos National Lab and will feature Nvidia’s upcoming “Vera” Arm server CPUs and “Rubin” GPUs combined into superchips, which Huang showed off onstage. Mission will be used to manage the U.S. nuclear stockpile and will go online in 2027. Vision will build on the work of the Venado supercomputer around AI research and national security.

Huang said Vera Rubin is the result of what Nvidia calls extreme co-design, the idea of designing and engineering all layers of a computing stack simultaneously to create highly optimized AI systems and AI factories.

“We’re preparing Rubin to be in production this time next year, maybe slightly earlier,” Huang said. “So every single year, we are going to come up with the most extreme co-design system so that we can keep driving up performance and keep driving down the token-generation cost. This is just an incredibly beautiful computer. This is amazing. This is 100 petaflops.”

Right now, Nvidia is seeing only an acceleration in demand for its AI chips, which isn’t surprising given the rapid enterprise adoption of AI, he said. Sales of Grace Blackwell GPUs illustrate the future trends.

Showing a chart, he noted that with Hopper, Nvida sold four million GPUs. With Blackwell – each one contains two GPUs in a single large package – and Rubin orders thus far, there ae 20 million GPUs on the books.

“It’s driven by two exponentials,” Huang said. “We now have visibility. I think we’re probably the first technology company in history to have visibility into half a trillion dollars of cumulative Blackwell and early ramps of Rubin through 2026. As you know, 2025 is not over yet and 2026 hasn’t started. This is how much business is on the books. Half a trillion dollars worth, so far. We’ve already shipped 6 million of the Blackwells in the first several quarters. . . . We still have one more quarter to go for 2025 and then we have four quarters [referring to 2026]. So the next five quarters, there’s $500 billion, half a trillion dollars. That’s five times the growth rate of Hopper.”

As best we can figure, Huang is correctly counting (according to his own naming scheme for 2026 and beyond) GPU chiplets per socket, so some of this increase is due to adding two reticle limited chiplets per socket. Five times the money is five times the chiplets, more or less. Which means that Nvidia is not commanding much of a price premium for a Blackwell GPU versus Hopper, and that is very likely due to the high concentration of sales among hyperscalers, cloud builders, and model builders who command deeper discounts than large enterprises with much smaller volumes can ask for. As more enterprises start deploying their own GenAI, revenue per GPU chiplet will rise and, we believe, so will profits.

The other thing to note about this chart above is that it seems to include all of the Blackwell and Rubin GPUs, including eight-way NVL8 server nodes or custom architectures that have only four GPUs per node like the many systems installed at HPC centers in the US and Europe. Even though the chart talks about NVL72 systems at the top.

Be the first to comment