When Tim Sears ran complex portfolio risk analysis on Wall Street in 2004, an eight-hour, overnight run was the best that could be expected, even on high-end hardware. That calculation time is now down to under three minutes, at least on some systems.

After twenty years in finance, include a nearly decade-long stint at Morgan Stanley, and a long run building Target’s AI team from scratch, Sears is now running the software and applications business at AI chip startup, Groq. He says the speedups in complex risk calculations like Value-at-Risk (VaR) are revolutionary for the financial segment, allowing for hedging, insurance, and general trading decisions to happen in real-time.

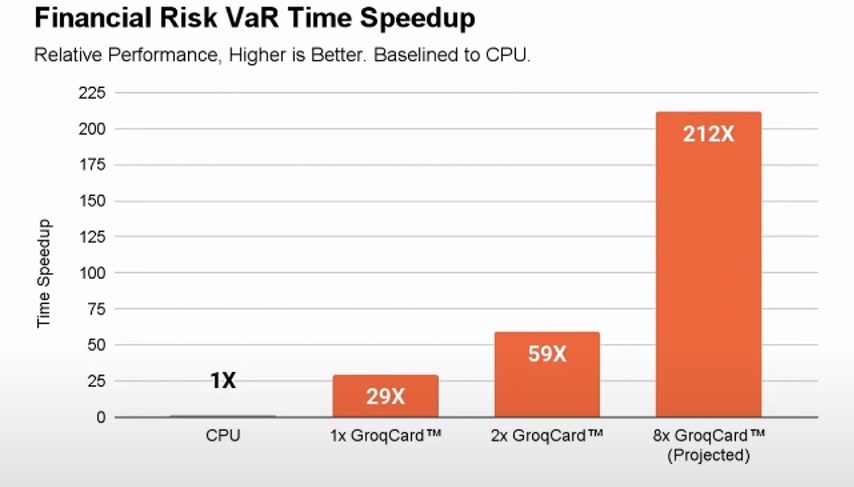

At the AI Summit in NY recently, Sears walked through a VaR proof of concept Groq put together showing some impressive speedups against commodity CPU systems. Time to result is important in this space, not just because decisions need to be made quickly, but finishing faster means the ability to run more simulations in a day. More simulations lead to richer distributions and thus, in theory, better results.

In essence, VaR is a metric designed to represent expected maximum losses for a portfolio of securities or other financial risks at a fixed probability. It helps firms pinpoint how much they might lose on a particularly bad day, for example.VaR results are computed by pricing a portfolio according to a number of different scenarios and picking a profit or loss at a certain probability.

It takes many simulations to capture the general shape of a distribute with the probabilities represented by a curve across all the scenarios. Calculating VaR is both compute-intensive and definitely data-intensive. It requires systems to quickly define scenarios, collect market data about the portfolio, model and price positions and then isolate only the risk metrics needed.

Sears and team at Groq wanted to put their GroqCard to the VaR test, building a 4 GroqCard unit for demo and a full deployment single node server with 8 GroqCards. The initial portfolio included 1,000 simulated interest rate swaps, 1280 scenarios generated with historical data, and a simulated market data feed with new trades being added periodically and VaR recalculations happening with new market ticks and trades. This is a traditional Monte Carlo run rather than a sophisticated AI-driven risk calculation but Groq shows it can do general purpose as well.

Comparisons were made against an AMD Ryzen 9 3900X 12-core CPU. This might not be a normal comparison for actual trading shops but it does provide at least some baseline for their 212X speedup in an 8-GroqCard node.

“This proof of concept was similar to one of my old jobs on Wall Street in a risk management role with a similar scale of positions and scenarios. It took about eight hours overnight but results down to three minutes would have meant a dramatically different world.”

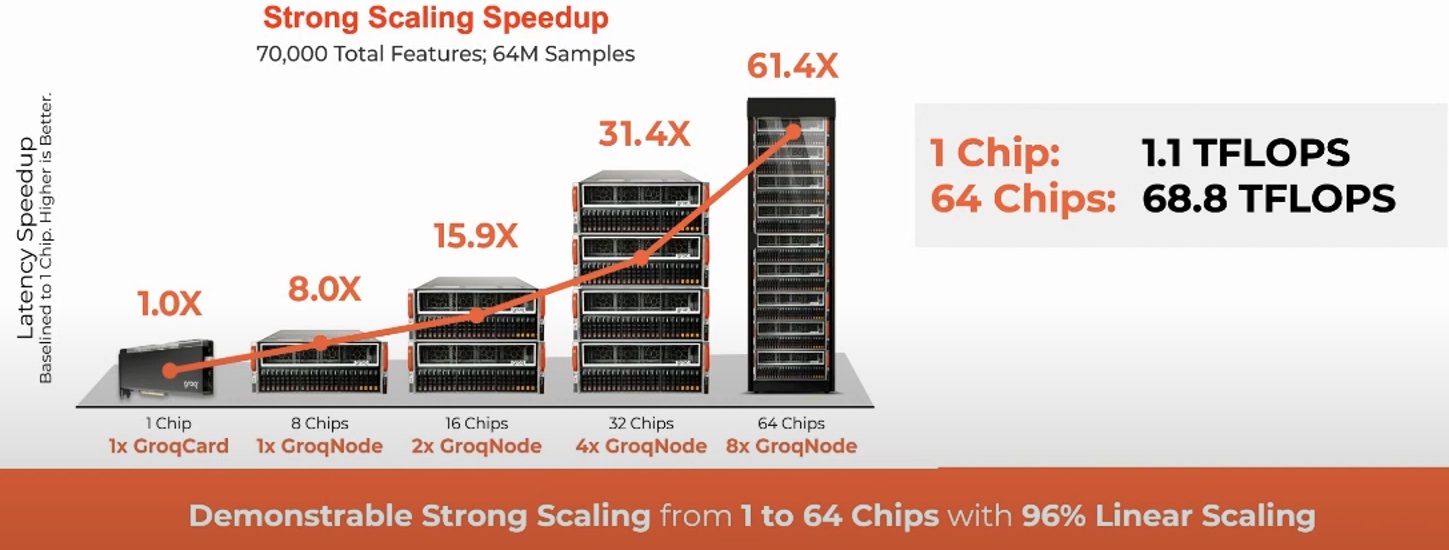

This VaR calculation example shows off the GroqCard in more practical workload context but the hardware is also aimed at large-scale AI as well. The one story Groq has been telling since the beginning is one of strong scaling.

The results above are from an example involving a large statistical learning workload Groq developed with a partner. It is based heavily on a lot of matrix multiplications and scales well as more hardware is thrown into the mix. “It’s normal for many solutions in this world to stop scaling but the GroqChip is different. The ideal number we’d like to see is 64X at rackscale. That means a process that would have taken an hour can now take less than a minute,” Sears adds.

Be the first to comment