Intel is still placing bets on neuromorphic computing with its Loihi devices. While the datacenter hook for the architecture might take a second seat to embedded and edge use cases, at least for now, its second generation device shows commitment to the concept — as does the new open-source software stack to support neuromorphic computing more generally.

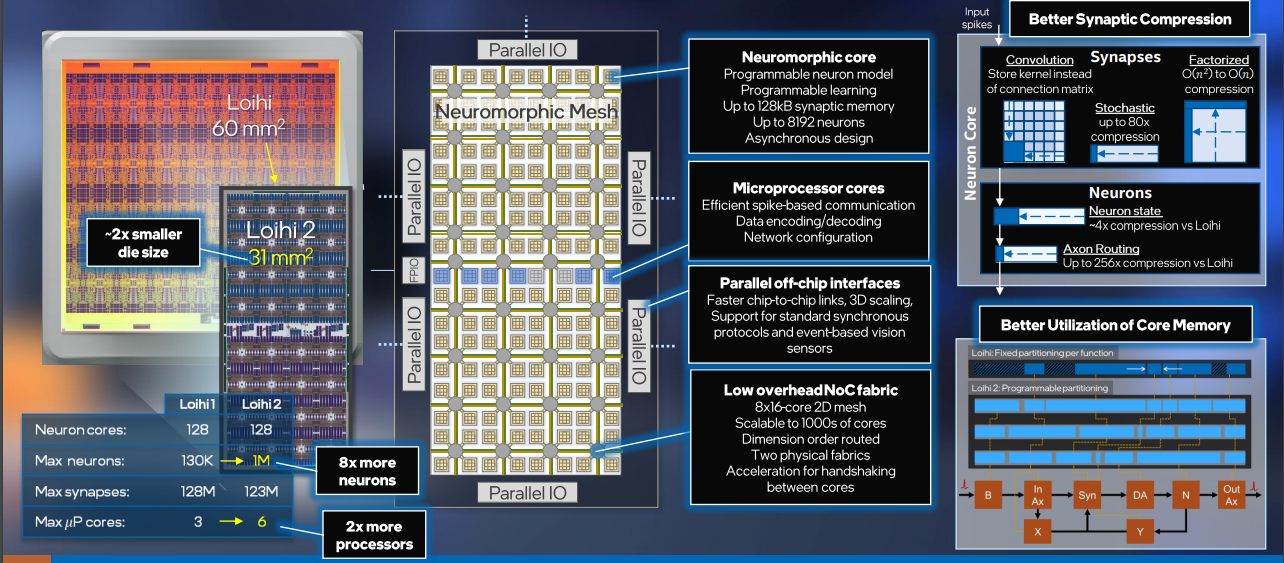

Garrick Orchard, a research scientist at Intel Labs focused on Loihi, tells us that the improvements in the pre-production, next-generation devices largely come from the Intel 4 process and EUV technology. “This has simplified the layout design rules compared to past process technologies and that made it possible for us to rapidly develop Loihi2 in the new process.” This switch also lends significant scaling advantages: each neuron core in the chip will be half the size of one in the first-generation Loihi devices.

“We will see performance improvements due to the architecture and asynchronous design methodology in addition to the process itself, with speeds up to 10X faster and resource density up to 15X greater.” On the ground, this means up to one million neurons per chip (up from 128,000 in prior device).

As in all tales of chip architecture, design shifts in the new devices were sparked by some of the first runs by early users and research teams. One common theme in these first use cases was memory — specifically, running out of the limited SRAM native to each core. With area reduced and densities up with the Intel 4 process, the team had some wiggle room to think about how to solve the memory shortage. Adding more was less pressing that unifying it. And perhaps more interesting, the shared memory on Loihi2 isn’t just about memory ratio — it’s also about memory reclamation.

In the first generation of Loihi, all SRAM was internal to the core for a combined 208KB. Memory has been reduced overall in Loihi2 to 192KB.

With discrete memory in the first generation there were fixed memory allocations for neuron states and synapse states. Early users were, for instance, finding synapse memory half-full but neuron states were completely full, which limited what was possible. Now, with all of that data pulled into a single memory that can be flexibly partitioned without major software complexity (the user will still see what looks like separate memories) users can allocate depending on the workload.

“In Loihi, learning requires memory and fixed allocation of memory. But if you have a model that doesn’t need to learn, then memory is dead anyway — it’s a dedicated feature you aren’t using. But in Loihi2, because of this flexible allocation, any feature you’re not using you can reclaim and put toward more neurons or synapses,” Orchard explains.

Intel has figured out a few other tricks for making Loihi more flexible and tunable. For instance, it is providing better compression for certain features (synaptic connectivity, optimizations for convolutional connections). One of the most critical areas is in the neuron program itself. There was only one monolithic approach in the first generation but now it is possible to program individual neuron behavior to come up with a custom neuron model. This means more control over neurons and more customization of workloads going forward.

Although Loihi devices do not operate like traditional neural networks, as they do learn (usually on the fly with small samples) it would be useful to have the DNN equivalent of weights. In the case of Loihi2 it is possible to attach a “payload” to spiking neurons with graded values for more nuanced outcomes.

Hardware aside, central to all of Intel’s ambitions to make neuromorphic a broader technology in certain areas is an effort to get a community of early users and programmers on board. Orchard says that one reason the neuromorphic field as a whole has been held back is that there isn’t one open-source framework everyone can get behind. The new “Lava” framework will not be owned by Intel and, most interesting, it can run on GPU or CPU or even other neuromorphic devices. “Others are free to write their own compilers to provide support for whatever neuromorphic platform they have,” he adds.

The community around the Loihi devices hovers around 150 with a balance between academia, government labs, and commercial entities, including Raytheon and Ford Motor.

European rail giant, Deutsche Bahn AG has been exploring neuromorphic techniques to route and optimize train schedules. According to Jörg Blechschmidt, head of Technology Trend Management at DB Systel, IT subsidiary of Deutsche Bahn AG, the architecture shows promise for specific optimization problems.

“We identified Intel’s first-generation neuromorphic chip Loihi as a promising approach for optimization problems such as route planning for our railway network. In first evaluations we observed significantly lower energy consumption and a fast response time on a proof-of-concept problem. Advanced new features such as flexible choice of neuron models and non-binary communication between spiking neurons in Loihi 2 show promise for scaling up to real-world constrained optimization problems, some of which challenge the state-of-the-art approaches in computing.”

Dr. Gerd J. Kunde, staff scientist, Los Alamos National Laboratory and his team have been exploring the interplay between quantum and neuromorphic computing, also for specific optimization problems.

“This research has shown some exciting equivalences between spiking neural networks and quantum annealing approaches for solving hard optimization problems. We have also demonstrated that the backpropagation algorithm, a foundational building block for training neural networks and previously believed not to be implementable on neuromorphic architectures, can be realized efficiently on Loihi. Our team is excited to continue this research with the second-generation Loihi 2 chip,” Kunde says.

Be the first to comment