For much the three decades that Rambus has existed, it has largely focused on its patent portfolio and licensing its designs of memory interface technologies for a broad array of computer products. For a while it also was known for high-profile legal battles with the likes of Micron Technology, Samsung, Hynix, Hewlett Packard, Nvidia, and others, claiming infringement on its patents for DDR and SDRAM memory.

Over the past five or six years, while continuing to focus on the challenge of moving data quickly – particularly between processors and memory – Rambus has been making its own products, something the company was speaking about as far back as 2017. It has a lineup of buffer chipsets for DIMM modules, logic controllers and a range of hardware-based security offerings, such as protocol engines and crypto accelerator cores. The goal is for Rambus to reach a point where half of its revenues are coming from products and the other half from patents, something that the company is closing in on, according to Steve Woo, Fellow and distinguished inventor for Rambus.

The ongoing rapid and much-talked-about changes happening in the IT industry given its increasingly distributed nature and the exponential growth of data – and the work that Rambus had been doing for decades around moving data quickly and securely – helped convince Rambus that there was opportunity in getting into the product area, Woo tells The Next Platform. The transition from on-premises datacenters to the cloud, the drive for more processing at the edge, the growing adoption of artificial intelligence and machine learning technologies, and the need for faster connectivity and greater security all seemed to be moving the industry to where Rambus was.

At the same time, customers and partners were telling Rambus that it was time for the vendor to start pushing into various product areas.

“If you think about what’s going on in the datacenter, what our customers tell us is obviously what we see as well in memory and really I/O bandwidth,” Woo says. “Data movement is the thing that’s now limiting performance. The kinds of technologies that we have had historically and that we continue to build on are extremely relevant and becoming more relevant in the near future. The other thing that … is an important part of it as well is that – especially in the datacenter – there’s a lot of need for hardware security, both to help secure the data but more almost more importantly, that infrastructure as well.”

It’s a company with some muscle behind it. It has about 600 employees with headquarters in California and offices in Europe, Asia and India, more then 3,000 patents and applications, $185.5 million in cash from operations and 41 percent year-over-year growth in 2020. More than 75 percent of its product revenues come from offerings for datacenters and edge deployments.

The company has been building up its product-making capabilities for a while. In 2011, Rambus bought Cryptography Research, forming the basis for its hardware-based security division. Rambus bought InPhy’s memory interconnect business in 2016 and the same year acquired Semtech’s Snowbush IP to bolster its SerDes capabilities. It got into controllers in 2019 when it bought Northwest Logic, giving enterprises a single place for PHY and controllers. In late 2019, it bolstered its security offerings with its Secure Inside acquisition.

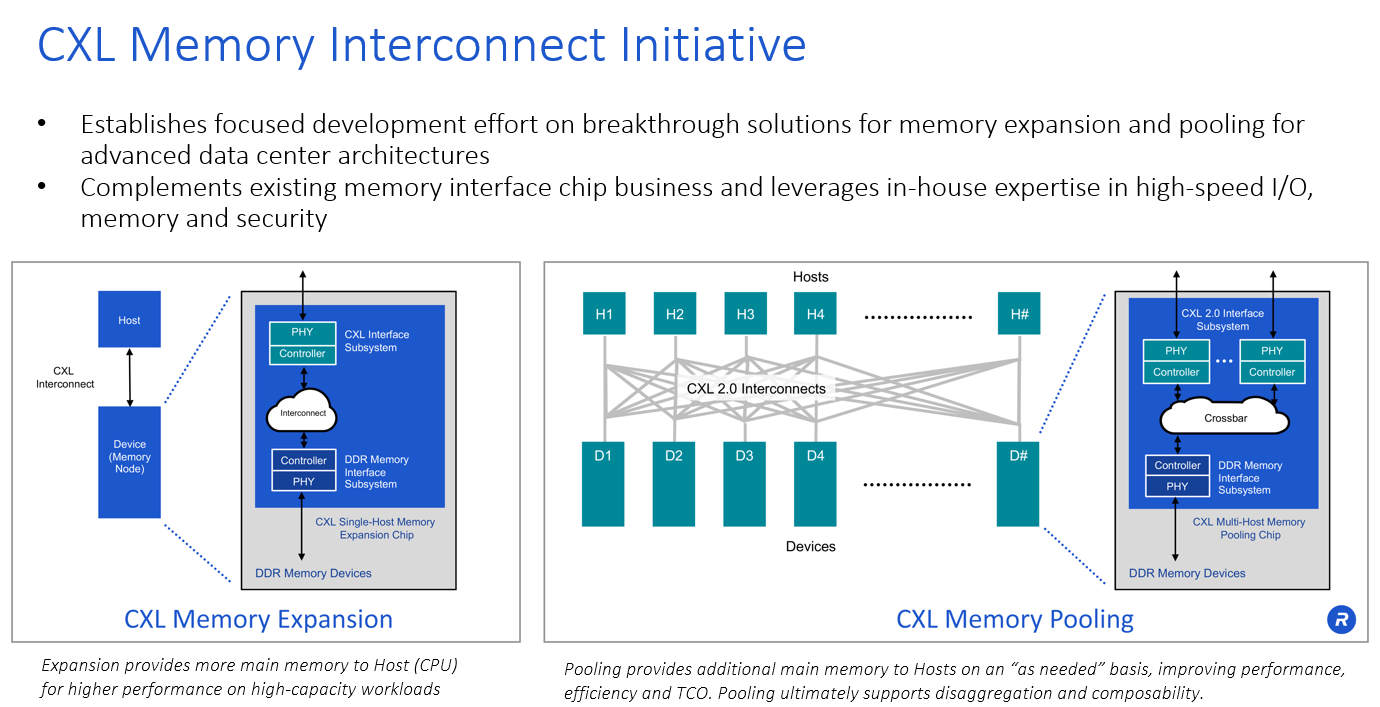

Most recently, Rambus has turned a focus to CXL, an open-source memory interconnect designed to drive high-speed connectivity between memory and a range of processors being used in datacenters and the cloud, including CPUs, GPUs, data processing units (DPUs) and Tensor Processing Units (TPUs) – created by Google for AI and machine learning workloads – as well as NICs and storage. CXL (Compute Express Link) offers low latency and memory coherence and, being an open technology that relies on PCIe technology, can reduce the need to rely on proprietary memory interconnects.

CXL also has a broad range of support, from chip makers like Intel, AMD, Ampere, Nvidia and Arm to hardware manufacturers like Dell EMC, Cisco Systems, Hewlett Packard Enterprise, Oracle and IBM. Cloud-based companies like Google, Facebook, Alibaba and Microsoft also are on board.

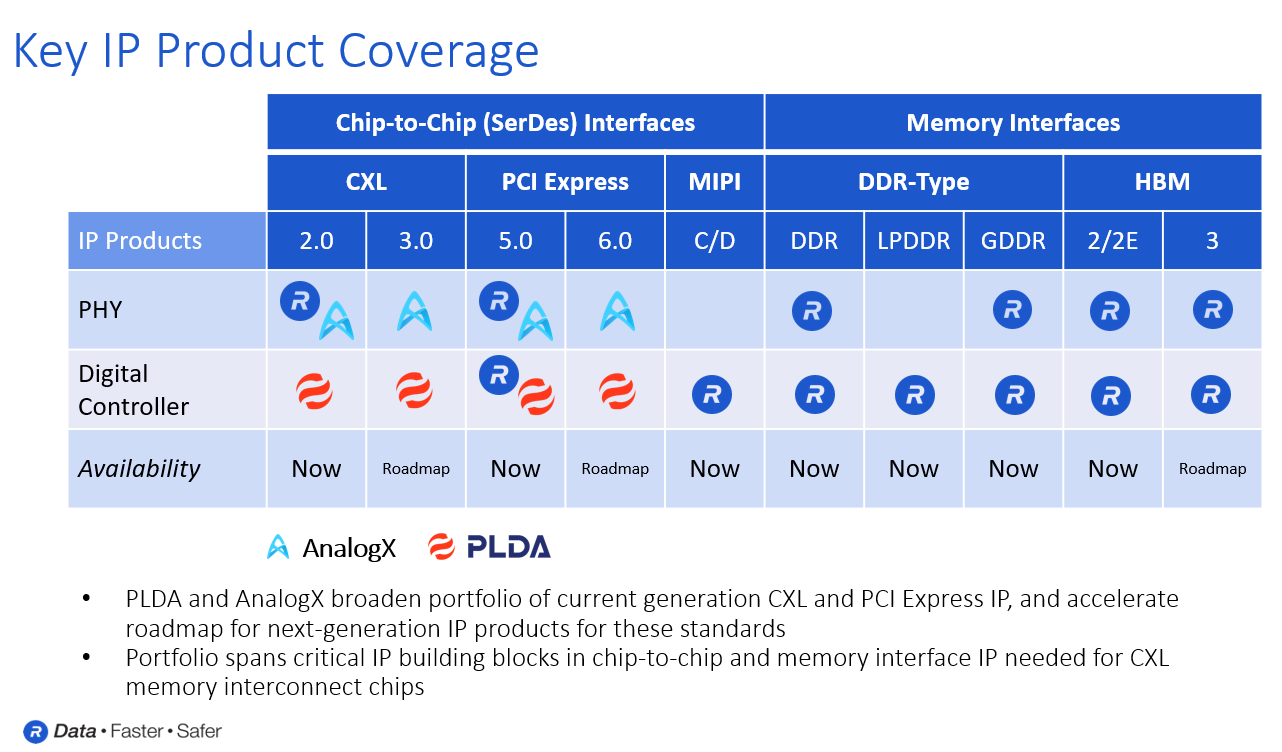

Rambus this year announced two acquisitions aimed at bolstering its CXL strategy. On June 16, the company announced it was buying PLDA and its CLX and PCIe controller and switch IP and acquiring AnalogX for its PCI3 5.0 and SerDes technologies. The same day, Rambus announced the creation of the CXL Memory Interconnect Initiative to accelerate the drive toward CXL. The PLDA and AnalogX are part of Rambus’ initial research focus on semiconductor solutions for memory expansion and pooling use cases.

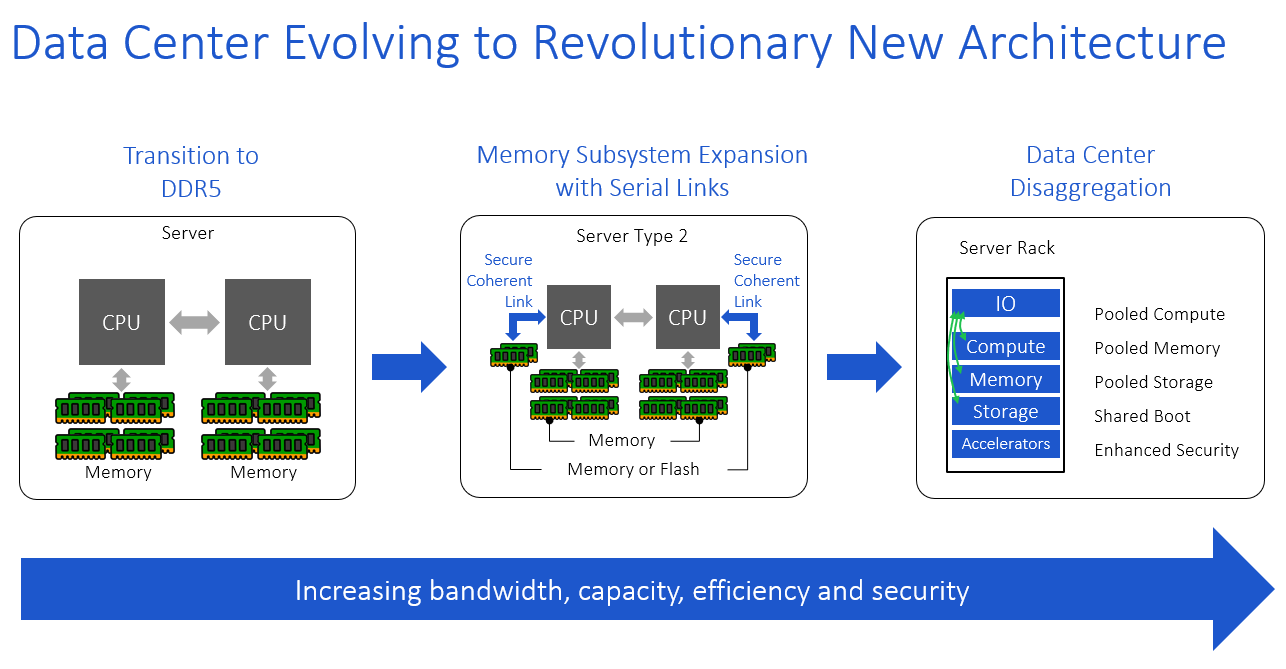

Rambus sees a multi-step process as the industry moves toward a more disaggregated datacenter environment, Woo says.

“We’re also seeing this desire to be able to add memory in auxiliary channels, something that’s outside of a DIMM socket,” he says. “It would be really nice to expand the memory capability of an individual server. Then further out, people have talked about this disaggregation thing, where they want that ability to move data outside of the server chassis to put it into a pool and be able to really provision it as needed and return it to the pool when they’re done. The feeling is you can run much bigger workloads, get better efficiencies and really get more out of your datacenter. That’s something that’s in the interest of everyone. Those are the things that are driving the way we think about this and the way we evaluate the opportunities.”

“We’re also seeing this desire to be able to add memory in auxiliary channels, something that’s outside of a DIMM socket,” he says. “It would be really nice to expand the memory capability of an individual server. Then further out, people have talked about this disaggregation thing, where they want that ability to move data outside of the server chassis to put it into a pool and be able to really provision it as needed and return it to the pool when they’re done. The feeling is you can run much bigger workloads, get better efficiencies and really get more out of your datacenter. That’s something that’s in the interest of everyone. Those are the things that are driving the way we think about this and the way we evaluate the opportunities.”

The first step is the transition to DDR5 memory, which Rambus has been working on for several years and which will be supported in Intel’s next-generation “Sapphire Rapids” Xeon server chips, which after a delay are now expected to hit production in the first quarter next year. In addition, Intel will start debuting CXL links as well in Sapphire Rapids.

“For the first time, what we’ll have is these platforms that you can actually add memory not only in directly-connected DIMM sockets, but also off of these serial link-based channels,” he says, adding that companies will use PCIe 5.0 to start. “It is leveraging a technology that now allows you to add memory beyond just the DIMM sockets. Further out down the line is this discussion about disaggregation. Once you have the CXL interconnects enabled in a platform, like what is going to happen with Sapphire Rapids, it makes it much easier to do something like disaggregation. It’s a two-step process to get there.”

Below is a look at the product coverage areas enabled by both Rambus’ in-house innovation and the acquisitions of PLDA and AnalogX:

Matt Jones, general manager of IP cores at Rambus, tells The Next Platform that the first deployments will be around DDR, but with a CXL-enabled buffer to handle the types of chores managed by DIMMs today.

“Where we see the acquisitions playing is, from an IP standpoint, giving us key building blocks for building a chip like this, for example, by enabling others in the industry to build similar chips with our IP, so having the ability to both internally and externally sell that device or that IP as we so choose,” Jones says. “You see again here fundamentally on the upstream side, the host interface being CXL and the fine control over PHY, in this case coming from AnalogX or a controller from PLDA. On the downstream side, again, the controller in PHY, those DDR assets that we talked about having historically at Rambus.”

It gets more complicated with memory interfaces, with the expected disaggregation in the datacenter, he says.

“Once you’ve got these CXL interconnects in place, there’s a whole lot you can take advantage of based on their high-bandwidth, low-latency characteristics to interconnect hosts and resources in the system,” Jones says. “We see the device evolving here into one that supports multiple hosts on the upstream side and being able to share efficiently a pool of memory on the downstream side so that you can assign multiple hosts to efficiently share that memory. The key building blocks are here from an IP standpoint that tie back to the acquisitions we made on the PHY and the controller on both sides. It gets a little more complex than that in the pooling space of managing the cross bar and the sharing, but with the cache coherency and some of the other hooks that are in CXL, it does make this possible with some of the know-how that Rambus brings to it.”

What’s driving all this is the ongoing changes in the datacenter and CXL is the enabling technology for the disaggregated future. How that disaggregated future plays out isn’t easy to predict, Woo says. The industry knows the platforms being released next year and later in the year there will be platforms with CXL and the ability to add modules.

“Given how people are talking about it, they’d like in a couple to a few years to start to seeing solutions where memory is getting disaggregated,” he says. “Some of that will depend on the infrastructure that gets put in place on those first platforms, but certainly the timeframe people are talking about is in the couple to few years.”

The computer industry will continue to be threatened until this criminal company is completely destroyed.