It seems like a question a child would ask: “Why are things the way they are?”

It is tempting to answer, “because that’s the way things have always been.” But that would be a mistake. Every tool, system, and practice we encounter was designed at some point in time. They were made in particular ways for particular reasons. And those designs often persist like relics long after the rationale behind them has disappeared. They live on – sometimes for better, sometimes for worse.

A famous example is the QWERTY keyboard, devised by inventor Christopher Latham Sholes in the 1870s. According to the common account, Latham’s intent with the QWERTY layout was not to make typists faster but to slow them down, as the levers in early typewriters were prone to jam. In a way it was an optimization. A slower typist who never jammed would produce more than a faster one who did.

New generations of typewriters soon eliminated the jamming that plagued earlier models. But the old QWERTY layout remained dominant over the years despite the efforts of countless would-be reformers.

It’s a classic example of a network effect at work. Once sufficient numbers of people adopted QWERTY, their habits reenforced themselves. Typists expected QWERTY, and manufacturers made more QWERTY keyboards to fulfill the demand. The more QWERTY keyboards manufacturers created, the more people learned to type on a QWERTY keyboard and the stronger the network effect became.

Psychology also played a role. We’re primed to like familiar things. Sayings like “better the devil you know” and “If it ain’t broke, don’t fix it,” reflect a principle called the Mere Exposure effect, which states that we tend to gravitate to things we’ve experienced before simply because we’ve experienced them. Researchers have found this principle extends to all aspects of life: the shapes we find attractive, the speech we find pleasant, the geography we find comfortable. The keyboard we like to type on.

To that list I would add the software designs we use to build applications. Software is flexible. It ought to evolve with the times. But it doesn’t always. We are still designing infrastructure for the hardware that existed decades ago, and in some places the strain is starting to show.

The Rise And Fall Of Hadoop

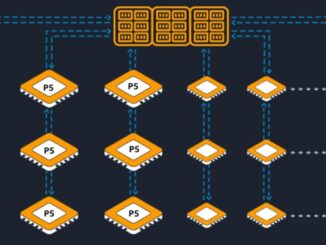

Hadoop offers a good example of how this process plays out. Hadoop, you may recall, is an open-source framework for distributed computing based on white papers published by Google in the early 2000s. At the time, RAM was relatively expensive, magnetic disks were the main storage medium, network bandwidth was limited, files and datasets were large and it was more efficient to bring compute to the data than the other way around. On top of that, Hadoop expected servers to live in a certain place – in a particular rack or data center.

A key innovation of Hadoop was the use of commodity hardware rather than specialized, enterprise-grade servers. That remains the rule today. But between the time Hadoop was designed and the time it was deployed in real-world applications, other ‘facts on the ground’ changed. Spinning disks gave way to SSD flash memory. The price of RAM decreased and RAM capacity increased exponentially. Dedicated servers were replaced with virtualized instances. Network throughput expanded. Software began moving to the cloud.

To give some idea of the pace of change, in 2003 a typical server would have boasted 2 GB of RAM and a 50 GB hard drive operating at 100 MB/sec, and the network connection could transfer 1Gb/sec. By 2013, when Hadoop came to market, the server would have 32 GB RAM, a 2 TB hard drive transferring data at 150 MB/sec, and a network that could move 10 Gb/sec.

Hadoop was built for a world that no longer existed, and its architecture was already deprecated by the time it came to market. Developers quickly left it behind and moved to Spark (2009), Impala (2013), Presto (2013) instead. In that short time, Hadoop spawned several public companies and received breathless press. It made a substantial –albeit brief – impact on the tech industry even though by the time it was most famous, it was already obsolete.

Hadoop was conceived, developed, and abandoned within a decade as hardware evolved out from under it. So it might seem incredible that software could last fifty years without significant change, and that a design conceived in the era of mainframes and green-screen monitors could still be with us today. Yet that’s exactly what we see with relational databases.

The Uncanny Persistence Of RDMBS

In particular, the persistence is with the Relational Database Management System, or RDBMS for short. By technological standards, RDBMS design is quite old, much older than Hadoop, originating in the 1970s and 1980s. The relational database predates the Internet. It comes from a time before widespread networking, before cheap storage, before the ability to spread workloads across multiple machines, before widespread use of virtual machines, and before the cloud.

To put the age of RDBMS in perspective, the popular open source Postgres is older than the CD-ROM, originally released in 1995. And Postgres is built on top of a project that started in 1986, roughly. So this design is really old. The ideas behind it made sense at the time, but many things have changed since then, including the hardware, the use cases and the very topology of the network,

Here again, the core design of RDBMS assumes that throughput is low, RAM is expensive, and large disks are cost-prohibitive and slow.

Given those factors, RDBMs designers came to certain conclusions. They decided storage and compute should be concentrated in one place with specialized hardware and a great deal of RAM. They also realized it would be more efficient for the client to communicate with a remote server than to store and process results locally.

RDBMS architectures today still embody these old assumptions about the underlying hardware. The trouble is those assumptions aren’t true anymore. RAM is cheaper than anyone in the 1960s could have imagined. Flash SSDs are inexpensive and incredibly responsive, with latency of around 50 microseconds, compared with roughly 10 milliseonds for the old spinning disks. Network latency hasn’t changed as much – still around 1 millisecond – but bandwidth is 100 times greater.

The result is that even now, in the age of containers, microservices, and the cloud, most RDBMS architectures treat the cloud as a virtual datacenter. And that’s not just a charming reminder of the past. It has serious implications for database cost and performance. Both are much worse than they need to be because they are subject to design decisions made 50 years ago in the mainframe era.

Obsolete Assumption: Databases Need Reliable Storage

One of the reasons relational databases are slower than their NoSQL counterparts is that they invest heavily in keeping data safe. For instance, they avoid caching on the disk layer and employ ACID semantics, writing to disk immediately and holding other requests until the current request has finished. The underlying assumption is that with these precautions in place, if problems crop up, the administrator can always take the disk to forensics and recover the missing data.

But there’s little need for that now – at least with databases operating in the cloud. Take Amazon Web Services as an example. Its standard Elastic Block Storage system makes backups automatically and replicates freely. Traditional RDBMS architectures assume they are running on a single server with a single point of storage failure, so they go to great lengths to ensure data is stored correctly. But when you’re running multiple servers in the cloud – as you do – if there’s a problem with one you just fail over to one of the healthy servers.

RDBMSs go to great lengths to support data durability. But with the modern preference for instant failover, all that effort is wasted. These days you’ll failover to a replicated server instead of waiting a day to bring the one that crashed back online. Yet RDBMS persists in putting redundancy on top of redundancy. Business and technical requirements often demand this capability even though it’s no longer needed – a good example of how practices and expectations can reinforce obsolete design patterns.

Obsolete Assumption: Your Storage Is Slower Than Your Network

The client/server model made a lot of sense in the pre-cloud era. If your network was relatively fast (which it was) and your disk was relatively slow (which it also was), it was better to run hot data on a tricked-out, specialized server that received queries from remote clients.

For that reason, relational databases originally assumed they had reliable physical disks attached. But once this equation changed, and local SSDs could find data faster than it could be moved over the network, it made more sense for applications to read data locally. But at the moment we can’t do this because it’s not how databases work.

This makes it very difficult to scale RDBMS, even with relatively small datasets, and makes performance with large data sets much worse than it would be with local drives. This in turn makes solutions more complex and expensive, for instance by requiring a caching layer to deliver the speed that could be obtained cheaper and easier with fast local storage.

Obsolete Assumption: RAM Is Scarce

RAM used to be very expensive. Only specialized servers had lots of it, so that is what databases ran on. Much of classic RDBMS design revolved around moving data between disk and RAM.

But here again, the cloud makes that a moot point. AWS gives you tremendous amounts of RAM for a pittance. But most people running traditional databases can’t actually use it. It’s not uncommon to see application servers with 8 GB of RAM, while the software running on them can only access 1 GB, which means roughly 90 percent of the capacity is wasted.

That matters because there’s a lot you can do with RAM. Databases don’t only store data. They also do processing jobs. If you have a lot of RAM on the client, you can use it for caching, or you can use it to hold replicas, which can do a lot of the processing normally done on the server side. But you don’t do any of that right now because it violates the design of RDBMS.

How (And Why) To Smash A Relic

Saving energy takes energy. But software developers often choose not to spend it. After all, as the inventor of Perl liked to say, laziness is one of the great programmer’s virtues. We’d rather build on top of existing knowledge than invent new systems from scratch.

But there is a cost to taking design principles for granted, even if it is not a technology as foundational as RDBMS. We like to think that technology always advances. RDBMS reminds us some patterns persist because of inertia. They become so familiar we don’t see them anymore. They are relics hiding in plain sight.

Once you do spot them, the question is what to do about them. Some things persist for a reason. Maturity does matter. You need to put on your accountant’s hat and do a hard-headed ROI analysis. If your design is based on outdated assumptions, is it holding you back? Is it costing you more money than it would take to modernize? Could you actually achieve a positive return?

It’s a real possibility. Amazon created a whole new product – the Aurora database – by rethinking the core assumptions behind RDBMS storage abstraction.

You might not go that far. But where there’s at least a prospect of positive ROI, it’s a good sign that change is strategic. And that’s your best sign that tearing down your own design is worth the cost of building something new in its place.

Avishai Ish-Shalom is developer advocate at ScyllaDB.

“Postgres is older than the CD-ROM, originally released in 1995.” Well, actually the CD-ROM was announced in 1984 and rolled out by Sony and Denon in late 1985. When I read an article and find facts that I know quoted incorrectly I lose faith in the rest of the information given, some of which is new to me. How do I know that data is accurate?

Database designs continue because the give a good model of abstraction. They provide a good model of implementing a reasonably strict schema and an abstract language SQL to retrieve and manipulate data in it.

The reason we continue to use them is because it was a good abstraction and they work. If used appropriately they can scale to volumes needed. Actually ACID is quite important and Postgres gives you a lot of capability of tuning what you consider durable. Happy to consider it’s committed if replicated to another database but not persisted on disk just set you’re config appropriately. God help you when you try and recover and discover that your replicas have different ideas of how far any transaction got. There is an reason that operators of these systems want to commit things to disk.

The reason Hadoop got bricked was because it solved a bunch of problems that most people didn’t have, it got such hype because the consultants in the industry found a new shiny tool to sell consultancy services around everyone adopting. For most people using Hadoop was like using a hammer to drive in a screw. Actually more like using a sledge hammer.

Hi but do you think database will be still useful till 2050

Snowflake is complete rethink with no legacy code.

I do not really think this article shows much understanding of why people use RDBMSs.

There are a lot of cases where you need atomicity and consistency and integrity guarantees. The classic simple example is the bank transfer one everyone knows, but there are lots of things you need to happen insider a transaction you can roll back.

The design makes developers life easier, and it makes software more reliable. If the DB guarantees consistency, that the correct type is stored, that required fields are stored, etc. it means less work for developers and bugs that are caught earlier and are easier to debug – its a lot easier to replicate an error that happens when data is saved than when it is retrieved.

There are also lots of things that will run on a single big server – and cheap powerful hardware means a lot more will run on a single big server – but RDBMSs have run on clusters for a long time – before AWS was around.

The article misses the point that the RDBMS concept was/is wholly about data integrity and abstraction. It has no relationship to the physical realisation of the data model.

A centralized RDBMS has decades of development and theoretical underpinnings to it, and countless number of people-hours of experience with it. In short, it is a proven, stable solution. The author’s point is valid that since the theoretical model for the RDBMS was defined, much has changed. However, these newer options do not all have the proven reliability and transactional consistency that RDBMSs have. That’s important to many organizations that have their own and their customer’s money on the line, with legal and financial ramifications.

Try using a nosql solution in production and you will run in to tons of problems that have long since been solved in an sql db. I have used in production NEO4J, mongodb, intersystems cache and they all through up horrible problems in deployment, replication, consistency scalability.

POSTGRES or MySQL are so much easier to rely on. Problems rarely occur and have well known solutions, nosql often has weird problems that you could not have imagined would exist. I see them as useful for exploring complex data in unique ways but to run any service you pick sql every time or you will experience pain after a year or two.

There is the tremendously large assumption that RDBMS designs have not changed in 50 years, which is absolutely incorrect. Oracle Grid, RAM persistence, removal of caching, using NVMe have already been implemented. The internal structures have changed, how they are accessed, etc…

The assumptions may be true for Open Source databases that haven’t adapted, but for RDBMS technology in general these assumptions are false. Just lookup Exadata or the latest Oracle database features and implementations. Another hole in the assumptions made us that RDBMS databases have always been defined to utilize the maximum amount of memory, either for caching or execution. In the end all that truly matters is if the database focuses on Consistency or performance (eventual Consistency). The fundamental problems that drove ACID did not change with the advances in technology.

*Sigh* Where do I even begin?

I was tempted to stop reading after the first few sentences already. Did you even bother to check your story about QWERTY? The main reason for its design is in fact that it makes typing faster, because alternating hands between commonly used together keys is faster. There were some modifications to ensure letters often used together were not adjacent; and those were indeed made to reduce jamming. But the overall design was for typing speed.

So if you didn’t bother to check this fact, what about the rest?

Well, it didn’t take long for me to reach the next verifiably false claim. “The relational database predates the Internet”. Actually, Internet started as ARPANET, in the late 1960s. The first relational databases were from the early 1970s. All facts that are incredibly easy to find, if you bother to check your facts.

It gets only worse when you start talking about databases. It seems to be that you decided to rant on a topic you are not really familiar with. So let’s check some of your claims, right?

“For instance, they avoid caching on the disk layer and employ ACID semantics, writing to disk immediately and holding other requests until the current request has finished”

No. Various databases use various techniques to prevent data loss. But writing to disk immediately and holding other requests until the current request has finished is not one of them. Even relatively low end database systems such as MySQL or Access support concurrency.

“The underlying assumption is that with these precautions in place, if problems crop up, the administrator can always take the disk to forensics and recover the missing data.”

BWAHAHAHA!!!

Ever heard of a thing called “backups”? Most database administrators use them, you know.

If problems crop up, we simply restore from backup. Many of the high end database products have mechanisms that effectively boil down to continuous backing up, meaning we can restore to the millisecond before you dropped your coffee on the SSDs in the SAN.

And if we know that outage is expensive, we prepare by setting up a standby failover. Again, techniques are different between vendors and evolve over time, but functionally you could describe it as spare system that is constantly in a state that a new system would be in after restoring the backup.

“Yet RDBMS persists in putting redundancy on top of redundancy. Business and technical requirements often demand this capability even though it’s no longer needed”

Perhaps true for some. Those businesses can use other solutions (though a relational database might still bring other advantages).

Make sure to have them sign at the dotted line that they can’t sue you if your solution fails and they lose data or availability.

Most of MY customers do tend to care about not losing their business critical data. And many companies in the world lose millions if their systems are down for just an hour or so.

Your next two paragraphs are harder to debunk. Not because they are true, but because they are mere assertions without any evidence or argumentation.

In both cases, your key point appears to be that it would be better to decentralize processing (true in some cases, false in others), and then you assert that “it’s not how databases work” and “it violates the design of RDBMS.”

And that brings me to the conclusion. The overarching arguments of your post appear to be: (1) relational databases are an old technology, there’s new stuff available, so why still use the old; and (2) there are many advancements in hardware and relational databases are still using old assumptions about hardware.

As to the first argument: Kitchen knives are old technology too. Much older than relational databases. And yet we still use them. Why? Because they are still the best tool for the job of cutting foods. There have been innovations in the past decades and they have found their own use for some specific cases, but as an overall tool for generic “we need to cut food”, a knife is still the best solution. Perhaps one day a new tool will be developed that’s better than knives. But until then, we’ll always have them in our kitchen.

Same with relational databases. There are more and more innovative non-relational solutions that are a much better fit for some specific scenarios, and I would always recommend using those specific solutions for those cases. But as a generic use large scale data storage and retrieval tool, relational databases are still the best idea available so far.

And the second argument is simply bollocks. You seem to think that because the basic idea of relational databases stems from the 1970s, the technology has not changed. Well, I’ve got news for you. Major relational database users are not running their database on Oracle v4 or SQL Server 7.0. They are on Oracle 18c or higher, SQL Server 2017 or higher. And those new versions of relational databases have all been changed to make use of hardware advancements.

Your rant is poorly researched. Your knowledge of how modern databases work seems to be limited or outdated. You also seem to have little grasp on the difference between the (indeed mostly unchanged, like my kitchen knife) basic principles of the relational model on the one hand, and the actual implementations in RDBMS’s on the other hand.

It’s as if you’re criticizing modern car manufacturers and your main argument is that the original T-Ford was designed for a world with limited paved roads and mostly horseback traffic.

Perhaps there were also some valuable observations in your article. If so, then they got lost between all the fallacies.

@hugo;

There’s so much confusion on display in the original article that it makes it difficult to respond coherently. You touched on a lot of the points I think are important, so thanks for taking the time and effort.

@hugo kornelis.

Having read your comment, I was inspired to re-read the article, looking for accuracies:

close to the end:”Amazon created a whole new product – the Aurora database – by rethinking the core assumptions behind RDBMS storage abstraction.”

Looking at the product page it seems Aurora is an RDMBS that is Postgres/MySQL compatible.

…and so whilst that bit of the article is also inaccurate, I learnt something and will give Aurora a closer look.

There is a kernel of truth in the article, the Relational Model has outlived many technologies that were predicted to replace it, most recently Hadoop that is indeed a legacy technology. Hadoop still survives the in the for HDFS, the Hadoop Distributed File System that underpins many of the technologies that replaced Map/Reduce. HDFS is also a legacy (always was – GFS was always at the OS level and distribute file systems have been standard in HPC for decades). HDFS is difficult to replace because persistent storage is difficult to replace.

The relational model has evolved to include in-memory databases, column-store structures and typed blob storage (Objects, then XML, then JSON) and even Graph and block-chain. In most cases the distinction between “NoSQL” databases and RDBMS is a cost equation rather than functionality.

Globally distributed databases with eventual consistency is the current area of change which is already seeing overlap block-chain concepts of non-updatable ledger with evolution of trusted block-chain technology.

The big renascence of relational technology will come with GPGPU optimised table/index scan that will render the current generation of NoSQL database obsolete. Expect to see more query-engine functions to move to block storage devices, when ICL’s 1970’s idea of Content addressable storage (CAFS) finally comes of age.

Read the article. Read the comments. Re-read the article.

The core assertion here seems to be that ACID is no longer relevant to databases, or I missed something. You seem to be lobbying for more of a database that functions as an air traffic controller allowing data to fly in and out with most critical processes being carried out at destination points rather than the origination point by arguing that it is so much cheaper now to have tons of distributed computing (which I think is also the reason you asserted Hadoop was scrapped?).

If you scale back your argument a bit and decide to target only data consuming applications like a BI platform where data changes on a predictable time schedule (daily/hourly/etc) I could support offloading some of the calculation work onto a client machine, but that might also bring up some data security issues.

A lot of these relational databases are also moving to web platforms where you gain access through a web browser and I don’t believe that those have anywhere near the technical focus on optimizing my database level transactions that the SQL/Oracle teams do.

Yes, the ever-changing relative performance has a lot to do with database design. But I wonder whether you might have wanted to attack this issue is to start off with the concept of a Transaction and all that that implies. And under that is the notion of ACID … Atomicity, Consistency, Isolation, and Durability. This further implies support of massive concurrency and durability over all sorts of failures. Various databases play around with each of these, but whether folks think about it or not, they are all expecting that these attributes are met. We all individually believe that we are the one and only user of a database and that whatever we do with the database, when we are done, everything that we did will remain indefinitely. We believe that, but the reality is very different than that, and all sorts of DB architecture exists to hide all that reality. We don’t want to know that there could be thousand of others just like us concurrently mucking with the same data. Sure, we can speed up where and how the data becomes persistent, but a heck of a lot of that architecture comes from the basic fact that our processors are tied to a memory that has no real persistence and the persistence is available outside of that processor complex, out in IO space. The latency involved in that distinction has a way of limiting a DBMS’ throughput and from there the response time at high throughput. If what we are after is a major change is DBMS architecture, it would seem to require starting with a way that persistence becomes very close to the processor, if not directly accessible to and addressable by the processors.

As a related thought, all that a database really is is persistent data, no matter the format, which follows the normal rules of Transactions. As you likely know that data need not be rows and columns. It could be exactly as complex as any data structure that any program can create. The only difference is that whatever addressing it uses must also be capable of being understood within the persistent storage. It’s still got to make sense no matter the number of failures or power cycling. (Addressing cannot be the usual process-local addressing that all our programs tend to use, but there are all sorts of alternatives.) That is part of the database design as well, and there are a lot of different ways that that data could be represented. So, yes, it strikes me that database designs have been changing, merely because there are so many ways to represent persistent data, data which is accessed via Transactions, all the while maintaining ACID. Still, hardware architecture continues to change as well, and database design will be following that.

We still use RDBMS because they are like bits or integers or Turing Machines – they don’t just go away.

They are changing, and most support new physical and logical data formats, write-only styles, columnar storage, and other quirky things that pre-relational systems had but relational bypassed for good reasons or bad reasons.

Or the alphabet, we still use this old relic.