As server racks become more thermally dense, the costs and logistics of conventional cooling becomes ever-more challenging. These difficulties are compounded in high performance computing, where systems can be two to three times as computationally dense as standard datacenter machinery. While that has encouraged a number HPC customers to switch from air cooling to hot and warm water cooling, the more dramatic shift to liquid immersion cooling offers some interesting possibilities.

In a nutshell, with immersion cooling, or IC, you dunk the servers in a large tank that contains either mineral oil or some other inert liquid. The idea is that since all the components are completely surrounded by the liquid, temperatures cannot rise too much, typically no higher than 50 degrees Celsius, or about 122 degrees Fahrenheit. That’s well within optimal operating temperatures for processors and memory components. Pumps are used to keep the temperature relatively even throughout the tank, but there are no fans, so there is very little noise to disturb people in adjoining rooms.

To put the problem in perspective, we estimate the average rack power densities in datacenters these days range between 7 to 10 KW. For HPC systems, that average is a good deal higher — around 16 KW, estimated Rispoli, with some systems trending into 30 KW territory. Keep in mind, these high performance servers tend to use the higher bin CPUs compared to those in generic datacenter hardware. And with the increasing use of 200-plus watt GPU accelerators and manycore CPUs in HPC, compute densities are accelerating. As a consequence of the thermal load of these systems, they often use dual cooling, employing both computer room air conditioning (CRAC) and rear door heat exchangers (RDHX).

Diarmuid Daltún at Submer, a Barcelona-based supplier of turnkey IC systems, recently laid out some of the more compelling advantages of this technology, as well as some of its downsides. Daltún, also brought in Daniele Rispoli, an HPC specialist and engineering manager at ClusterVision, to discuss his experiences in setting up immersive-cooled systems.

Daltún says that’s what they are using to cool the MareNostrum 4 supercomputer at the Barcelona Supercomputing Centre. As we wrote last June, that system uses Lenovo’s RDHX units on the racks, which can drive power usage effectiveness (PUE) down to 1.2 to 1.4, compared to 1.5 to 2.0 on systems using only air cooling. As we also noted, a more advanced warm water cooling system from Lenovo, now installed as part of the SuperMUC-NG supercomputer at the Leibniz Supercomputing Centre (LRZ) in Germany, is said to save around 45 percent of cooling costs compared to an air-cooled machine.

According to Daltún, Submer’s “SmartPod” gear can do a good deal better than that, driving the PUE all the way down to 1.03. Thus, cooling costs are reduced by 96 percent compared to an air-cooled setup. Moreover, datacenter space is reduced by about 75 percent since you can pack everything a lot tighter.

To drive home the point, Daltún conjured up a 10 MW theoretical supercomputer powered by 2,200 generic CPUs and 17,600 Nvidia T4 GPUs as an example of a machine delivering 1.1 peak exaflops – FP16/FP32 tensor flops, that is. He chose the T4s because they are datacenter-friendly in the sense that they top out at 75 to 100 watts. With immersive cooling, that system could fit in 233 square meters (2508 square feet), or about the size of a large suburban home.

By his calculation, you could save about €12.3 million ($9.9 million) per year in energy costs alone for such a system. When you take into account the upfront cost of constructing a new datacenter, additional savings are possible since you’ve compressed the infrastructure into 25 percent of the space of an air-cooled facility. Daltún estimated just the land would amount to a savings of between €1.18 to €1.73, depending on real estate prices. An additional €2.9 to €3.3 million could be saved in building costs. That money that can be applied to better hardware or simply more nodes, said Daltún.

Not everything gets built from scratch. Rispoli said the customer often wants to reclaim an existing space at a facility and turn it into a machine room. “There are people in small research centers that happen to have a room that’s not used for anything and they just end up fitting their equipment in there,” he explained,” which of course creates all kinds of headaches because the room is not meant to be hosting machines.”

In Rispoli’s experience, these reclaimed rooms are often close to offices where people work, so the noise from all the fans becomes a problem. And since the air is not filtered, they sometimes will just take a normal office-supply air conditioner and “crank it up to 11 and pretend that’s good enough to cool these machines.”

Potentially, retrofitting a reclaimed space for immersive cooling is fairly simple. An IC-based cluster that ClusterVision installed in the UK was placed in an unused lab at customer’s center. According to Rispoli, it was much easier to place a couple of tanks in there with submerged nodes instead of trying to retrofit the entire room with raised floors, cable trays on the ceiling, and CRAC units everywhere.

Keeping all the server componentry at 50C or less means the lifetime of the hardware can be extended. Plus, it often becomes feasible to overclock processors to squeeze another 20 or 30 percent of performance out of them. Even without overclocking, IC enables these chips to be run at their top frequencies for much long periods of time.

“A lot for people think that with immersive cooling, your processors and your GPUs will be running hotter than they would in air, because we talk about a higher set point,” said Daltún. “However this is not the case.” Under 100 percent load in an air-cooled datacenter, processor temperatures can easily run into the mid-70s, causing CPUs and GPUs to throttle back.

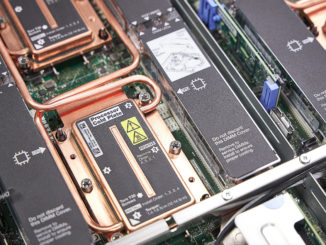

Not everything is sunshine and roses, however. Rispoli admitted that if you have to do maintenance work on the hardware, it’s a bit more complex in an IC setup. For example, to replace a memory module on standard air-cooled server, you just slip out the box, and snap in a new DIMM, and you’re done. But for submerged servers, you have to pull it out the tank and let the liquid drip off before doing anything, which costs extra time and clean-up.

Also, the servers can’t have any moving parts in them, such as spinning disks, which limits your local storage choices significantly. Plus, the thermal paste used on processors tends to dissolve in the oil or synthetic fluid used in these systems. Submer replaces the paste with indium foil, but according to Daltún, some manufacturers have developed a special thermal paste that doesn’t diffuse into these fluids.

Because of these kinds of special requirements, not all server suppliers are on board with IC. Some will void their warranty if you even remove the servers from the cabinet. Daltún admits a number of the traditional OEMs can be difficult to work with, but pointed to IBM, Lenovo, Inspur, and Gigabyte as examples of vendors who are now offering IC-compatible products. For those lagging behind, he encouraged customers to pressure their suppliers, reminding them that when you order multi-million-dollar machines, you have some leverage with system vendors.

That said, Rispoli thinks the biggest challenge right now is that customers are just not familiar with the technology and its advantages. From his perspective, increasing the use of immersive cooling will require educating people on what it can offer and then how to operate it. When you’re sinking a million or more dollars into a system, people tend to get pretty conservative with their investment, but as Rispoli noted, “submerging servers is not the end of the world.”

Hi, nice article! CGG has been using oil immersion cooling (Green Revolution gear) in its Houston data center for maybe 7+ years now. While the learning curve was painful, it is quite stable and energy efficient today. There are many advantages to the technology, several of which are covered in the article. The disadvantages can and have been dealt with, at least for our use case, high throughput computing (HTC). Remarkably, one of our current hurdles to further exploiting the technology is lack of commodity server systems with high enough thermal density! Thanks for covering this topic – Ted Barragy

Impressive advances on cooling technology, but all these complications are quite disappointing, bearable though.

“these days range between 7 to 10 KW. For HPC systems, that average is a good deal higher — around 16 KW”. really? I’ve been a long time in the FZJ “Jülich Super Computing Center”. There was BlueGeneQ system with 10 KW/rack idle power, with load 60-80KW/Rack. The entire system was cooled by water! Inlet Temp was 15-20°C Outlet 30-40°C. Think “we can cool systems with 16KW ‘backdoors'” is not a new thing, it’s a step back.