The need to host, process, and transmit more data, in less time and more securely, is putting huge strain on existing datacenter network, server, and storage architectures, with the demands of specific applications like artificial intelligence, machine learning, image recognition, and data analytics exacerbating the problem.

When they add new platforms, many organizations now prefer to buy software-defined object/block/file storage solutions from vendors such as Red Hat, or use open source distributions like OpenStack, sometimes underpinned by or side-by-side with hyperconverged infrastructure (HCI) storage stacks from the likes of Nutanix, Dell EMC, Hewlett Packard Enterprise, and Cisco Systems. Moreover, spinning disk is rapidly being replaced by more efficient all-flash solutions, with Gartner predicting that by 2021 50 percent of all datacenters will uses solid state drives (SSDs) for high performance workloads. All of which means that current storage area networks (SANs) just cannot cut it any more.

Fibre Channel Falling Behind

That is particularly true of Fibre Channel SANs, the demise of which has been predicted for decades, though a huge install base, ease of management, and isolation of block storage traffic appears to ensure their short-term relevancy. The problem for Fibre Channel now is that another of its historical advantages – a regularly updated roadmap offering a well-defined path to upgrade – looks like it’s petering out and the technology has peaked in terms of future performance enhancements. Similarly, FC no longer holds the performance advantage as Ethernet speeds have recently surpassed Fibre Channel speeds.

Ethernet Storage Fabric And RoCE

One of Fibre Channel’s key advantages over Ethernet looks to have been eroded further by the emergence of what Mellanox has dubbed the Ethernet Storage Fabric (ESF). ESF supports unique storage-aware features such as delivering intelligent networking and storage offloads – including TCP, RDMA, NVMe over Fabrics (NVMe-oF), erasure coding, T10 DIF, and encryption to deliver the highest levels of performance and the lowest latency in a lossless fabric. Mellanox switches also feature zero packet loss (no avoidable packet loss, for example due to traffic microbursts), and deliver that performance fairly and consistently to answer customers growing desire for Ethernet-based storage due to its ability to support block, file, and object storage, as well as simultaneously handling compute and storage traffic.

ESF, which supports the same type of remote direct memory access (RDMA) as InfiniBand, can use RDMA to bypasses the TCP/IP stack and enable direct server to server and server to storage data transfers between application memories without loading the processing overhead onto the CPU. This allows ESF to deliver significant performance and efficiency gains. It is now being deployed in cloud, storage and enterprise Ethernet networks with RDMA over converged Ethernet (RoCE – pronounced Rocky, and currently found in Microsoft’s Azure Cloud and other hyperscaler clouds) to reduce latency and server CPU/IO bottlenecks for example. Best of all ESF supports RoCE on either lossy or lossless networks and can simultaneously support TCP/IP traffic on the same fabric.

And using RDMA alongside the NVMe-oF storage protocol for data transfers between the host and attached storage systems can bring latencies down even further from milliseconds to microseconds. Though the technology is just begging to be adopted by the Enterprise market, it has been deployed by hyper scale datacenters for years. It is envisaged that NVMe interfaces will continue to replace existing technologies like SAS and SATA to support full line rates needed by machine learning (ML) and artificial intelligence (AI) applications and further exploit the adoption of RDMA.

Intelligent or Smart NICs provide hardware offloads to reduce latency and free up CPU on the storage initiator and target, so both servers and storage have more CPU power for running applications or storage features. Other advanced functions are available, like support for Open vSwitch (OVS) virtual switches and overlay networks such as VXLAN, that potentially save owners and operators money on additional network switch hardware elsewhere, as well as faster, more efficient security via embedded firewalls and the offloading of encryption off the server and onto the SmartNIC itself.

InfiniBand Marches On

With Fibre Channel out of the running, it now looks like a straight fight between InfiniBand and Ethernet to provide the high-speed storage fabrics datacenters want to future proof their operations. And unlike Fibre Channel, both technologies have ambitious roadmaps.

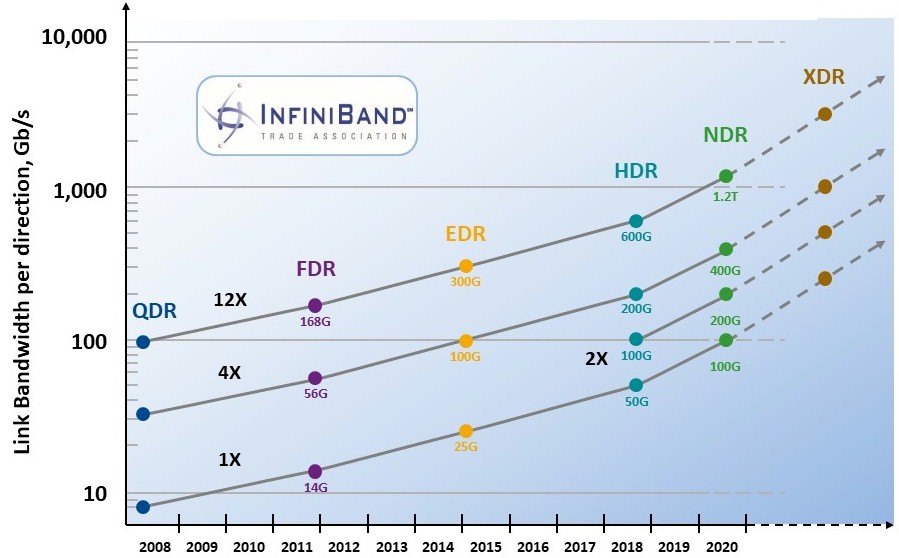

The InfiniBand Trade Association (IBTA) published a roadmap outlining 1x, 2x, 4x and 12x port widths with bandwidth reaching 600 Gb/sec in 2018, depending on the capacity per lane (either 100 Gb/sec or 125 Gb/sec) and the number of lanes per server. InfiniBand is a good fit for server interconnects over short-distance passive and active copper cables in HPC datacenters but can also be deployed over transceivers and optical cables over distances of up to 10 kilometers for inter-datacenter connections.

InfiniBand has a lot of support among the HPC community and across some hyperscalers and cloud builders, with its advantages not limited just to throughput and low latency. InfiniBand, with the HDR flavor, now has a lot of headroom for expansion required by even hyperscale datacenters, supporting maximum cluster sizes of up to 40,000 nodes, which can be extended further by InfiniBand routing. It has traditionally offered lower latency than Ethernet, whilst users laud the higher wire speeds, larger packet sizes, dynamic routing capabilities and simple to use multi pathing it provides.

Manufacturers are slugging it out to get 100 Gb/sec and 200 Gb/sec interconnects to market. Mellanox is currently shipping enhanced data rate (EDR) InfiniBand components that combine four lanes of 25 Gb/sec into 100 Gb/sec of total bandwidth (a change from NRZ to PAM-4 signaling is what makes the 25 Gb/sec per lane physical signaling look like 50 Gb/sec per lane). The company started shipping 200 Gb/sec Higher Data Rate (HDR) InfiniBand last year and has a faster Next Data Rate (NDR) InfiniBand also on the horizon with native 100 Gb/sec signaling per lane. At the extreme end of Mellanox’s roadmap is the Extended Data Rate (XDR) InfiniBand specification, running potentially to 1 Tbit/sec across the four lanes that are typically used to make a server port at some indeterminate point in the future; this implies 250 Gb/sec signaling per lane, and it is not clear yet what mix of native signaling, data encoding, and modulation will be used to reach this goal.

Ethernet Eyes 1.6 Tbit/sec

But Ethernet too is backed by concrete plans for improved connectivity. The 200 Gb/sec and 400 Gb/sec specifications were ratified by the IEEE 802.3 Ethernet Working Group late last year, with the consortium of suppliers which make up the Ethernet Alliance confident they will see 400 Gb/sec links deployed in hyper scale datacenters by 2020. Further specifications ramping capacity up to 800 Gb/sec and 1.6 Tb/sec connectivity could appear as soon as 2023.

Both 200 Gb/sec and 400 Gb/sec support a range of different cabling specifications that should satisfy the short range and long range transmission requirements, backwards compatibility and power consumption limits in most datacenters where space and electricity is often at a premium, using either single-mode fiber (SMF) or multi-mode fiber (MMF) based on multiple lanes and fiber strands.

The latest Ethernet specifications also moved to address the latency issues caused by the need to perform forward error correction prior to data transmission to ensure reliability. Rather than making it optional, the 802.bs architecture embeds Reed Solomon forward error correction (FEC) in the physical coding sub-layer (PCS) for each rate, effectively forcing manufacturers to develop 200 Gb/sec and 400 Gb/sec extender sublayers to support the future development of other PCS sublayers that can utilize other types of FEC for greater efficiency at a later date.

Ethernet Gathering Momentum

Any timescale for the deployment of the latest InfiniBand or Ethernet technology in either cloud or enterprise datacenters depends on when manufacturers can get suitable components onto market, and how keenly they are priced.

The momentum appears now very firmly behind Ethernet however. Sales of Mellanox’s InfiniBand ASICs and switches held firm from 2015 through 2018, with occasional spikes here and there, and InfiniBand showed modest growth driven by HPC/ML/AI projects. But it was demand for the company’s ESF networking components and high-performance Ethernet – where Mellanox maintains leadership in 25 Gb/sec and above in Ethernet adapters – that drove most of its revenue growth in 2018, and where they will continue to benefit from a multi-year transition to higher speeds.

Things could change, but one thing is sure, no matter which technology wins networks will become more intelligent. Today, InfiniBand has the lead with technologies like SMART, which accelerate not just HPC but also AI workloads. But Ethernet is also developing rapidly with SmartNIC technology and virtual switch offloads. Ethernet is set to deliver almost everything the modern hyperconverged or software driven datacenter needs in terms of storage performance, capacity and management over the long term. It looks like Ethernet is one technology that would be difficult to bet against. In the end, one thing is certain – the networks of the future will be smarter and faster.

Be the first to comment