For exascale hardware to be useful, systems software is going to have to be stacked up and optimized to bend that hardware to the will of applications. This, in many ways, is the hardest part of reaching exascale class systems.

According to TOP500.org’s November 2018 rankings, five of the top ten HPC systems in the world support advanced research at the United States Department of Energy (DOE). At the number one position, the aptly named “Summit” system housed at the Oak Ridge National Laboratory (ORNL) offers theoretical peak performance above 200,000 teraflops with the underlying support of 2,397,824 processor cores. That benchmark represents a stunning achievement.

However, by 2021, the team behind the Exascale Computing Project (ECP) foresees an exascale computing ecosystem capable of 50 times the application performance of the leading 20 petaflops systems, and five times the performance of Summit. With all that prowess on tap, exascale-level systems will be able to solve problems of greater complexity, and support applications that deliver high-fidelity solutions more quickly. However, the applications need a software stack that allows them to access that capability. The new Extreme-Scale Scientific Software Stack (E4S) release represents a major step toward the ECP’s larger goal.

The key stakeholders behind the ECP’s mission are the DOE’s National Nuclear Security Administration (NNSA) and Office of Science. These government agencies seek to create increasingly-powerful compute tools to tackle the massive workloads associated with complex modeling, simulation, and machine learning challenges in fields like nuclear energy production, national security, and economic analysis. However, the ECP team – comprised of contributors from academia, private industry, and government research – envisions a broader scope of use cases in the coming years.

Exascale Performance Empowering Discovery

Robert Wisniewski, chief software architect for extreme scale computing at Intel, resides among an elite group of industry HPC experts helping bring the ECP software stack to life. “While the DOE’s needs and advocacy served to initiate the ECP effort, our community envisions the ECP having an impact across a broader scope of scientific and engineering endeavors,” said Wisniewski. “Once deployed, researchers and engineers from the labs, academia, and industry, have the opportunity to apply for time on these leadership facility supercomputers. This capability helps drive scientific and engineering innovation.”

While Moore’s Law predicts compute advancement in the coming years to surpass current capabilities by a significant margin, hardware represents only one piece of a much bigger HPC puzzle. Wisniewski’s role focuses on the development of the software stack, a key ingredient enabling the success of the exascale-class machines. According to Wisniewski, “Intel is an advocate of the ECP’s work, and I am excited to be able to contribute to the Extreme-Scale Scientific Software Stack effort.”

Realizing an achievement of this magnitude requires industry collaboration. The ECP must empower the supporting architecture, system software, applications, and experts with the technical skill sets needed to derive the greatest level of performance from modern hardware.

Converged Workloads

As Wisniewski points out, measuring system performance is not just about benchmarking like the linear equations software package (Linpack). The charter for the exascale machines is providing real applications with high performance across an increasingly broad set of applications.

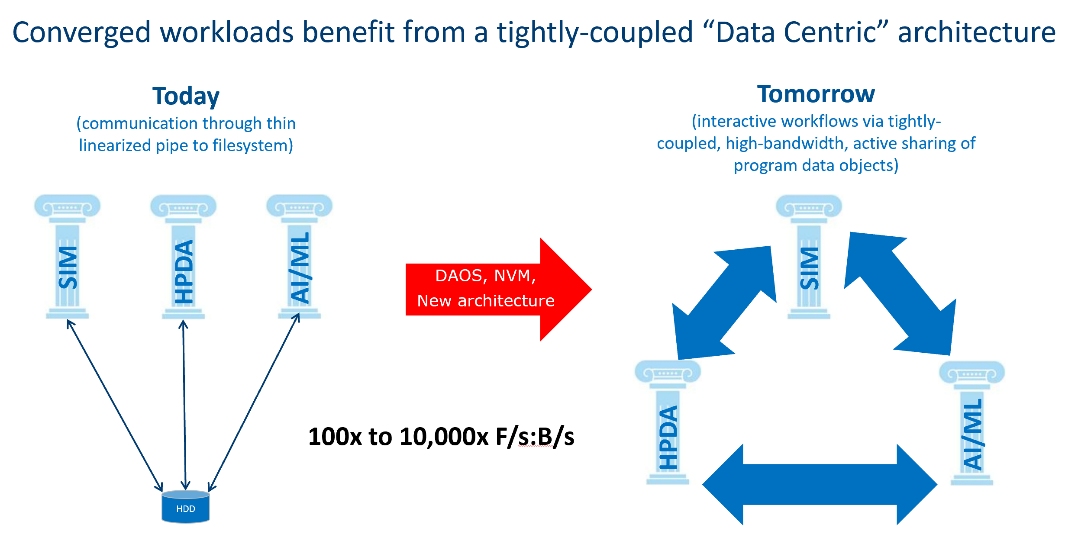

The pursuit of that performance improvement involves longer-term strategic approaches. Today, a data-centric architecture delivers insights from capabilities like high-performance data analytics (HPDA), AI, and simulation. Each of these workflows offers unique strengths. However, they are more powerful when tightly integrated. Thus, a valuable direction for exascale and beyond is converging these capabilities to operate synergistically and in a more tightly-coupled way. For example, rather than AI or HPDA runtime operating independently and linearly with stored data sets, the information could flow more freely by tighter integration of runtimes, programming models, and application using converged infrastructure. The combined approach enables more rapid discovery of more meaningful insights. However, there remains a significant cost in getting them to a tightly integrated state. Since the infrastructures for each of these pillars derived from independent development, the ECP ST team is taking important steps to bring together previously-disparate infrastructure and getting it all to work together smoothly.

Elaborated Wisniewski, “Historically, the code enabling HPC offered substantial prowess for big data and classical simulation. To maximize today’s extreme-scale computing power, we recognize the incredible value which machine learning and deep learning can bring to bear on complex scientific workloads. The combined power of AI, analytics, and simulation offer potent tools for deriving more meaningful insights from data.”

ECP And OpenHPC

The ECP released the Extreme-Scale Scientific Software Stack (E4S) Release 0.1 to a warm reception at the 2018 Supercomputing Conference in Dallas. The package includes 24 integrated components including libraries, frameworks, and compilers which coax higher performance levels from applications while simplifying the development process with common tools.

The ECP team anticipates incremental releases and feature updates at roughly a six-month cadence, offering programmers ideal tools to build applications which derive the greatest benefits from next-generation hardware and the underlying software stack.

According to Sandia’s Mike Heroux, ECP’s software technology director: “The integration element makes the ECP work especially important. The vast majority of the ECP software is intended for use by the broader community. A lot of our development work resides on laptops and desktop systems, so using a pre-exascale system is not necessary to run the stack and apply it against smaller workloads.”

Today, OpenHPC serves as the foundation for many of the world’s most advanced HPC systems. Much synergy exists between it and the ECP, including many of the contributors behind them. However, each serves a unique purpose. Wisniewski offers his perspective on ways OpenHPC and ECP complement one another. “OpenHPC plays an important role in advancing the HPC field. It targets a broad audience for moderate-sized clusters with an even broader set of HPC use case scenarios. ECP focuses on architectures optimized for capability-class, extremely-demanding workloads. However, by cross-pollinating components, ECP can leverage significant existing and on-going work from OpenHPC, and OpenHPC can benefit from increased scalability and performance by contributions from ECP. The synergistic efforts bring the value of HPC to a wider set of users accelerating the broader adoption of HPC.”

Scaling Into The Future

The DOE’s efforts – along with Intel teammates and many ECP contributors – pave the way toward the future of HPC. “We are all excited about the project, and we readily encourage other HPC experts to join us in the effort of contributing to the software stack of the future,” said Wisniewski. Reflecting on the work ahead, he added, “While we have made a lot of progress, a lot of work remains. Our work is not just about achieving exascale-level computing. It is about developing a platform which can scale beyond that.”

For more information about the ECP and its Extreme-Scale Scientific Software Stack (E4S) Release, visit exascaleproject.org, and watch this short interview with Mike Heroux, software technology director for the ECP.

Rob Johnson spent much of his professional career consulting for a Fortune 25 technology company. Currently, Rob owns Fine Tuning, LLC, a strategic marketing and communications consulting company based in Portland, Oregon. As a technology, audio, and gadget enthusiast his entire life, Rob also writes for TONEAudio Magazine, reviewing high-end home audio equipment.

Be the first to comment