A few months ago, we took an in-depth look at Intel’s quantum hardware strategy—from qubits to device manufacturability and commercial viability. But without a firm software and programmability foundation, no quantum technology can thrive.

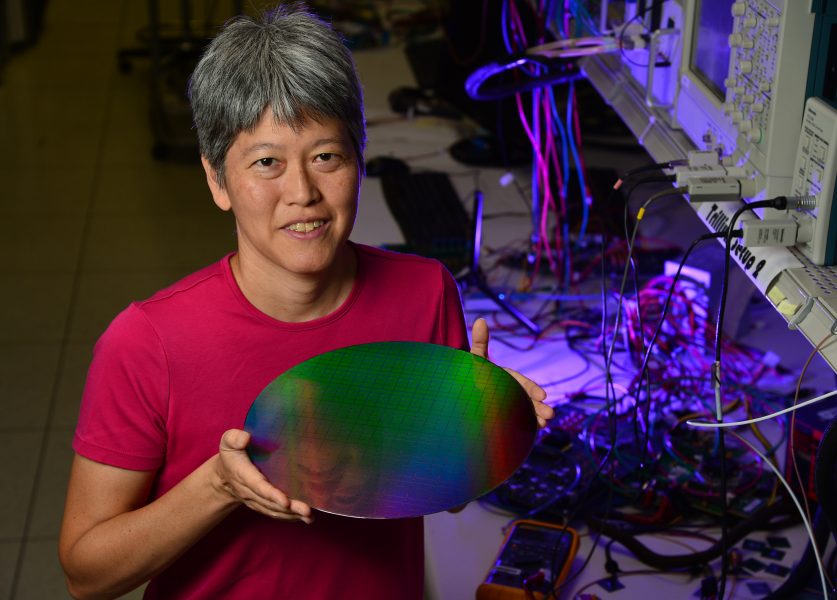

For a more rounded perspective, we also talked to Dr. Anne Matsuura, who directs quantum applications and architecture research at Intel Labs. The interview below is interesting technically, but it is also noteworthy because it sheds light on how quantum initiatives at Intel, particularly from a materials science perspective, are feeding the next generation of chip manufacturing technologies within the company.

Matsuura is originally a physicist with focus on materials used in electronics, including high-temperature superconductors with novel magnetic properties. This area forms the burgeoning discipline of quantum materials, which holds promise for future post-Moore’s Law technologies despite some challenges in simulating such materials with classical systems.

Early quantum materials research at Intel was difficult because of the strange nature of materials that exhibit some unexpected electronic properties. “These are materials where sometimes a theory will tell you that you should have an insulator but instead you have a metal. These things do not simulate well—they actually simulate the electronic properties incorrectly on classical computers,” she explains. The goal was to run experiments to understand what happens with these materials and see if it is possible to build and use a quantum computer, even at small scale, to simulate with instead.

“The question was if we could create a quantum computer that would truly solve the holy grail problem of how high-temperature superconductors actually work. And if we can do that, can we create a material that superconducts at room temperature?”These are the types of questions that drive the work toward practical applications, especially in quantum materials, at Intel Labs under Matsuura, as we explore in our Q&A below.

TNP: What does it take from an algorithmic side to create new applications as quantum computers continue to grow in capability? What do you think is possible and what are the big algorithmic and development challenges?

Matsuura: I think the low hanging fruit for quantum algorithms and applications are simulating other quantum systems. You see a lot of material simulation as well as chemical, catalyst and molecular simulations. It is easiest for a quantum computer, which is a quantum system, to simulate another quantum system. I have a slightly different take on the importance of algorithms to actually building a quantum computer.

My team creates these algorithms around quantum simulation of materials. What we are doing is writing small workloads for our current small qubit systems. We are interested in seeing if can we run all of the important components through a larger material science problem on our near term small qubit system.

TNP: Can you give us an updates sense of where this stands from a hardware perspective and what does this mean for applications research?

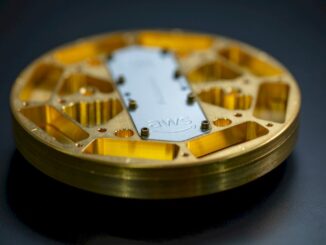

Matsuura: Right now we are running things at 5 qubits, 7 qubits but we have some algorithms for 17 qubits. What we are finding is that you can run these algorithms on the small systems of qubits. In doing so, I really think that we will be able to architect the quantum computer.

My team is half physicists and half computer architects. That is why I have an algorithm writer specifically for near term machines, like 5 to 7 qubit systems. She has to talk to the Harvard people about what kind of qubit rotations have to happen and what operations have to happen between which qubits in order to run her algorithm.

The architects in my group are interested in seeing this work because they start to understand what is important to the algorithm. How does the algorithm flow through the computer stack? Therefore, How should they design the computers stack?

TNP: Right now, most algorithms for other quantum are being designed to make use of 49 or 50 qubits. Are the applications themselves generally architected that way or not?

Matsuura: If you look at the algorithms that are run on 49 or 50 qubits, that is really in simulation. They are not really run on the qubits. There is some work from Rigetti where they ran a very short circuit algorithm on 19 qubits.You see people using the IBM experience for 5 or 7 qubits. All of this is good work. My team is focusing on one application area. Simulation of materials. This is twofold, one is to help us to codesign and architect our quantum computer. The other is to prove to ourselves that there is an application area that will get sped up by a quantum computer.

That is a major question that has to be answered–what application area are we really certain will get sped up by quantum computers? I guess it will be simulation of other quantum systems, simulations of materials, simulation of chemical reactions. That is still an open question that needs to be answered. That is one of the reasons my team is focusing on trying to nail that down. What will the first quantum machine be bought for? Maybe simulating materials. Most likely, it will be an accelerator for some application area with a classical coprocessor.

TNP: What problems might you be able to solve using fewer qubits then?

Matsuura: We are trying to prove to ourselves that computers down the road will be good for certain application areas. when we are doing things on 5 qubits, we are taking some component of a larger simulation and asking if we can we do all of the important parts. We are not necessarily making it better on 5 qubits, we are just showing we can do it. We are doing it to try the design to architect the quantum coprocessor which will accelerate material simulation problems, for instance. We are focusing on architecture. We are seeing how you run something that is more real, not showing speed up, but something that is a real part of an algorithm that we may want to run on a future quantum coprocessor.

My architects are able to see what sort of algorithmic flow is happening and what needs to happen to design the machine. To clarify, we are doing this with no error correction. We are running these things fast.You can do one algorithmic run and continue to run it many times in order to get a statistical answer. You will want to run it many times so you have a strong probability of having the right answer. Everything is probabilistic.

TNP: Are there any important lessons learned about that or does it make you change how you think about connecting these?

Matsuura: One thing that we had to pioneer, on the back end there are some co-selection techniques that happen. That might be true for these quantum machines. Because the results are probabilistic, you run the algorithm to get the statistical accuracy that you want. We have been developing co-selection techniques to help us discard results that are physically not possible. We are trying to figure out what the application area is that will be the first commercializable use case.

TNP: Have you developed your own internal tool chain for working with these or are you using something such as Q# or some other pre-developed platform?

Matsuura: There are a lot of tools out there Project Q and many other open source projects. My team is still evaluating and using elements from a lot of these tools. We are starting to see algorithms flowing through the stack. The compiler will probably have to be a hybrid between the classical and quantum viewpoints.

These are all really good tools, but you can tell by looking at the software tools whether the physicist or the computer architect wrote it. This splits my team because the physicist likes the ones that they wrote better and the architects like the ones they wrote better because they are easier for them to use.

What we are coming up with as a team, is that we have to use a hybrid between a quantum and a classical. We may end up with using some open source platform though–again, there is a lot out there and they are very good tools. We may have to create some things that are customizable to our machine.

TNP: As you work on these algorithms and toolchains are you using a classical computer and offloading it to the quantum system or do you have a classical computing there and if so, what are the challenges of getting those to talk to each other?

Matsuura: My algorithm people are talking to the hardware people directly. We have all of the components of a complete computer stack, we don’t have it all automated yet though. We will have classical components and some things will have to be offloaded to a classical computer.

TNP: How big of a challenge is it to get classical and quantum computers to work together?

Matsuura: That is a work in progress right now. Everything is in simulation now for us as far as what does the complete system will look like. We have also been working on system performance simulators. We are trying to do as much as we can in simulation before having to build many different designs.

TNP: What parts of the workflow are classical and what parts are quantum? What is the ratio of classical to quantum?

Matsuura: There are hybrid algorithms where part of the algorithm is ran across the classical side and part is ran on the quantum side. In chemistry, a common one is called QAOA, the Quantum Approximate Optimization Algorithm. We are using that approach as a model. Up to this point, we have ran everything quantum. We don’t have the full tool flow going together yet. The algorithm person is directly working with the hardware person to run things on the qubits. It is about 50/50, but it is hard to say at this point.

There are things that aren’t so important in the material science but there are things in other application areas that you wouldn’t do on a quantum computer, like basic mathematics. It depends on the algorithm. With the material science algorithm, which is really a physics simulation/quantum simulation Maybe more of the algorithm will be run on the quantum coprocessor side. It might depend on what the application area is.

TNP: Once you get the results from the hybrid quantum system, are those ideally transferred back to the super computer for further analysis and visualization? What is the output?

Matsuura: Right now, approximately one-third of HPC’s time is used for things such as simulation of chemical reactions, materials, and nuclear physics. We are aiming to do those simulations better than HPC. It is similar to sending your batch file to HPC and they run your molecular simulation. You may also be able to run it in the future on a quantum computer coprocessor at your HPC.

Read more on Intel’s quantum progress as we have covered it over the years here.

Be the first to comment