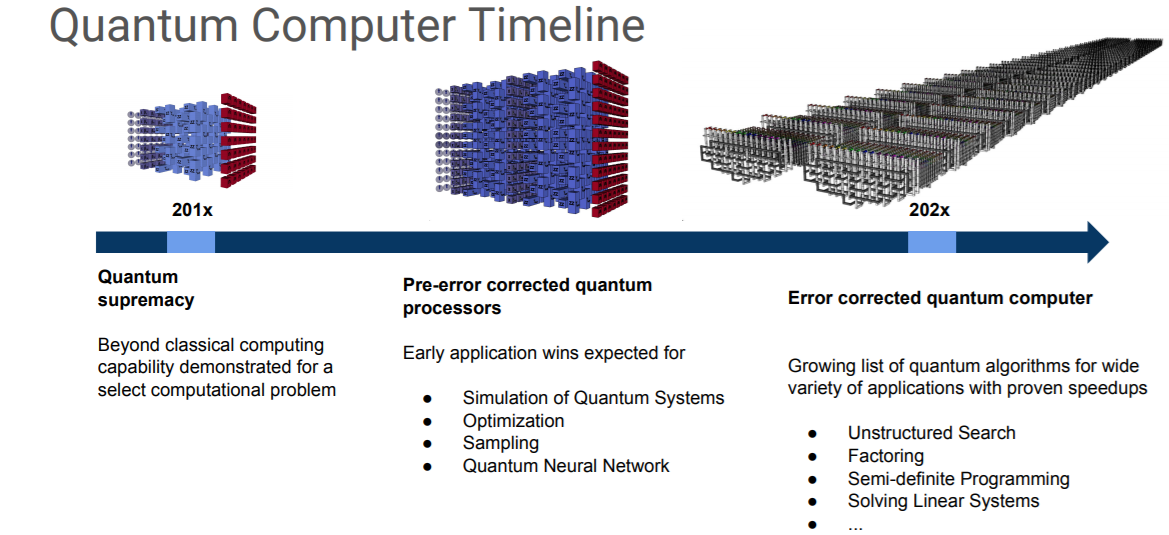

This morning at Google Next computer architecture pioneers, John Hennessey and David Patterson, remarked that even though it could be revolutionary, quantum computing is still at least a decade away. Even still, the search giant, like its hyperscale competitors, is investing in future quantum chips.

From standalone quantum system makers like D-Wave to established chip and system builders like IBM, the race to secure this distant quantum future has not begun in earnest. For some, driving the number of qubits higher is the way to show quantum supremacy but for others like Google, it is far less about qubit count and more about baking in reliable error correction for universal fault tolerant quantum computers.

We heard quite about this fault tolerant aspect at the International Supercomputing Conference (ISC) this year when Google Cloud Technical Director, Kevin Kissell, described the evolution of the company’s 22-qubit “Foxtail” quantum architecture and most recent 72-quit “Bristlecone” design which emphasizes fault tolerance and extended fidelity on quantum simulations.

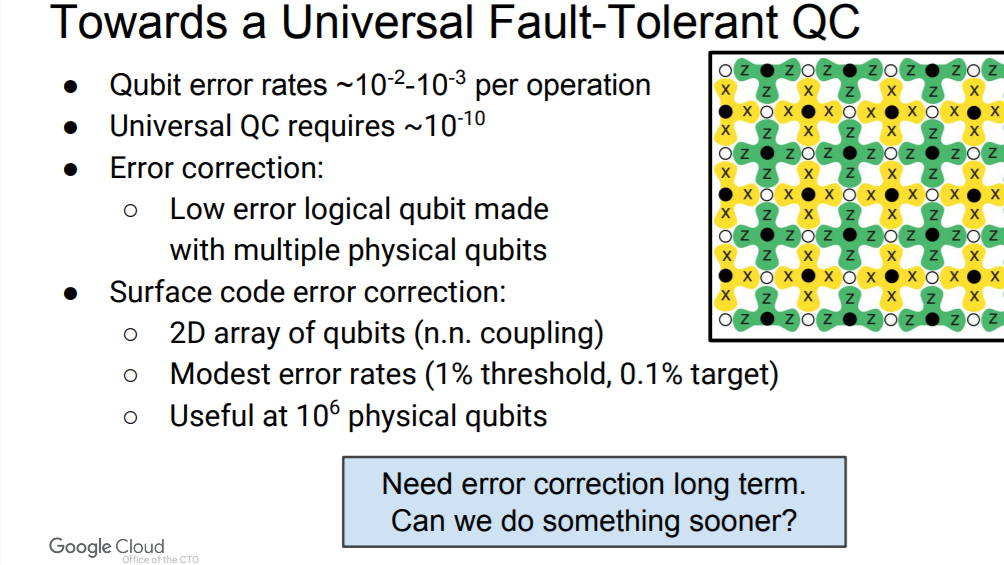

“For successful quantum computing, we do not just need a large number of qubits; this is necessary but not sufficient. What you have when operating a system can be thought of as a sort of volume in space and time—when you operate a quantum computer there is a certain number of qubits and an underlying error rate that you multiply by the number of operations you want to perform. At some point, the fidelity of those operations because the limiting factor in terms of the number of successive quantum steps that can be performed.”

“We are now at an interesting point where the chips we can build are not big enough for fully error corrected quantum computing, the point where a logic unit has a lifetime of several hours or even days,” Kissell explains. “It is still maybe hundreds or at most thousands of operations before decoherence. Quantum supremacy means running near term interesting applications that are too expensive on classical machines with reliable error correction schemes that will work in the real world.”

Recall that D-Wave was just able to run reliable quantum simulations on its own 2000-qubit hardware, something we discussed from a capability standpoint but without a lot of sense of how fault tolerance was handled. We will revisit that in an upcoming interview but in general, for quantum machines to take the place of supercomputers for some applications that are too resource hungry on those traditional architectures means the results need to be reliable. Kissell says this is where Google is architecting from, realizing this in the Bristlecone architecture with an error correcting approach called surface code error corrections.

In theory this is a rather simple approach; at any point in time in a quantum computation the qubits are tiled alternately with data and measurement qubits. The measurement qubits are there only to detect if one of the data qubits has unexpectedly flipped or taken on an alternate value because during entanglement, this would drag other measurement qubits along for the ride. Interestingly, those data and measurement qubits can move around but the measurement qubits will always arrange in a diagonal pattern.

Smaller sets of these qubits in diagonal patterning are the basis of the 72-qubit Bristlecone architecture, which Google researchers are still in the process of firming up. “It is a new adventure every time we bring up a chip,” Kissell says because of the unique materials used. While he did not provide any detail about the novel materials, he did share some images of what the system looks like as installed at Santa Barbara.

Google has not talked much about the software stack to interface with their quantum simulator on the Google Cloud or with the actual system at Santa Barbara but Kissell did say they have been impressed with efforts like ProjectQ even if they have taken their own route.

As a side note, for those interested in the idea of the 50-qubit limit for existing computations and fault tolerance on quantum systems more generally, we recommend this excellent paper from John Preskill at CalTech.

Be the first to comment