There are few people as visible in high performance computing programming circles as Michael Wolfe—and fewer still with level of experience. With 20 years working on PGI compilers and another 20 years before that working on languages and HPC compilers in industry, when he talks about the past, present and future of programming supercomputers, it is worthwhile to listen.

In his early days at PGI (formerly known as The Portland Group) Wolfe focused on building out the company’s suite of Fortran, C, and C++ compilers for HPC, a role that changed after Nvidia Tesla GPUs came onto the scene and required stronger hooks for the growing supercomputing set in 2007–and certainly after. Even though Wolfe (who came to Nvidia in 2013 when the GPU maker acquired PGI) sees GPU computing as a major force that has shaped HPC, he says that when it comes to programming GPU supercomputers in the next decade, the more things will change the more they will stay the same—in some ways at least.

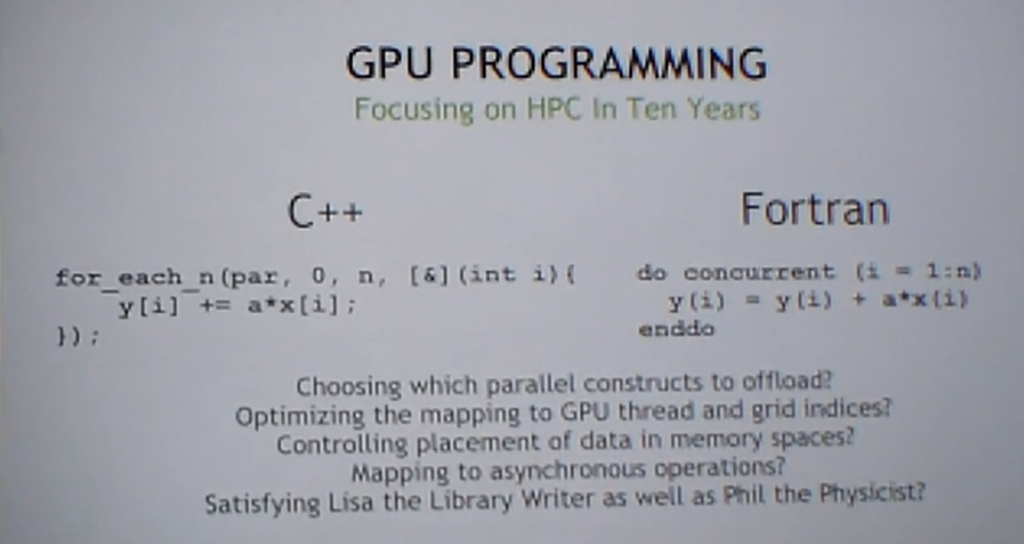

During his talk today at the annual GPU Technology Conference (GTC), Wolfe made the argument that the way we write parallel codes in HPC will not change much from how it’s done now. In short, C++ and Fortran will still dominate but for GPU accelerated supercomputing, these simpler methods will overtake others as more creativity is put into exploiting the inherent parallelism of GPU and the sequential offload capabilities of CPUs (which Wolfe says will continue to be just as relevant).

This might not be the sexy futurist answer folks were hoping to hear from Wolfe, but let’s be honest—HPC programming is about extending the lifespan of languages over many decades.

“Some, especially those who are younger, are going to be bothered by seeing Fortran here. Think about this: 30 years ago when C++ was invented, Fortran was already 30 years old. Young people then were sneering as many of you are now, saying it’s too old and needs to be retired. Now C++ is 30 and I don’t hear any sneering there—in 30 years when C++ is 60, you’ll be ecstatic that the framework you worked on still works with C++,” Wolfe told the whippersnappers in the room.

The way these languages adapt over time is going to take some creativity, as we will get to in a moment, but Wolfe says he believes that both Fortran and C++ will continue to be the future of HPC programming with GPUs with no extensions and no directives for most users—a big claim at a time when we see even sophisticated directives-based approaches like OpenMP and OpenACC working hard to keep up with the many changes in new GPU memory architectures (among other things) in architectures like the latest Volta generation GPUs.

Currently, there are several approaches to programming GPU supercomputers in terms of optimization and memory management; fully exposed models (CUDA level), fully, hidden (libraries, etc) and virtualized (directives-based). While this might change over the next ten years, Wolfe says that there are four constants that any unified C++ or Fortran-only approach to programming GPU boosted supers must provide: data/memory management, compute management, compute optimization and being able to take advantage of asynchronous operation. While these four won’t change, the architectures in the next decade will—for instance, consider Volta with half-precision and the TensorCore; this would have been something difficult to imagine ten years before, not just because of technology changes but changes in workloads that would necessitate such an architecture.

As C++ and Fortran evolve to be the projected end-all languages, there are plenty of tough problems to solve. Some just take creativity and others an overhaul in current thinking. For instance, how might such an approach handle reductions, which are currently separate operations. These can’t be addressed in the middle of a parallel operation and missing pieces like can be filled in, it will just take time and creativity, Wolfe argues.

There are even harder problems on the horizon. For instance, all procedures must be compiled for both hosts and device execution. This includes addressing things like system calls, function pointers, and language runtimes that must be fully ported to the devices. There is also the tricky issue of statically linking host and device code to the same address space with such an approach, to say nothing of the potential for dynamic linking that takes into account the GPU. Again, these are all farther horizon problems but still limiting factors for the future as Wolfe sees it (and he stresses his opinions are his own and not based on Nvidia or PGI roadmaps, which he says he is not privy to).

Since there will be a CPU in the loop for fast sequential execution, selecting which code sections to leave on the host will continue to be a problem, but with either Fortran or C++, optimizing compute schedules, data layouts, and launching asynchronous kernels fall into the “really tough” problems category. Further, spreading work across both CPU and GPU (load balancing) will get tricky, as will simply spreading work across multiple GPUs.

In short, there is no free lunch when it comes to integrating the device complexity available on unknown future GPU architectures and the needs of HPC applications. It either means pumping a lot of work into the directives-based and lower-level languages and bolstering libraries—something the community is already used to—or using a standard language base to build from and accepting all the complexities that come along with that.

While it would be interesting to see a world without directives-based approaches emerge from within Nvidia, which is closely tied to work on OpenACC, the ultimate decision will be made by developers who have decide how much pain they’re willing to live with, for how long, and to what end.

GPU is now very much essential for your computer because it is responsible for the display and it takes care of graphics related task like computer gaming, video, image, photoshop, animation etc. in the future of programming, GPU has been the great impact on the supercomputer.