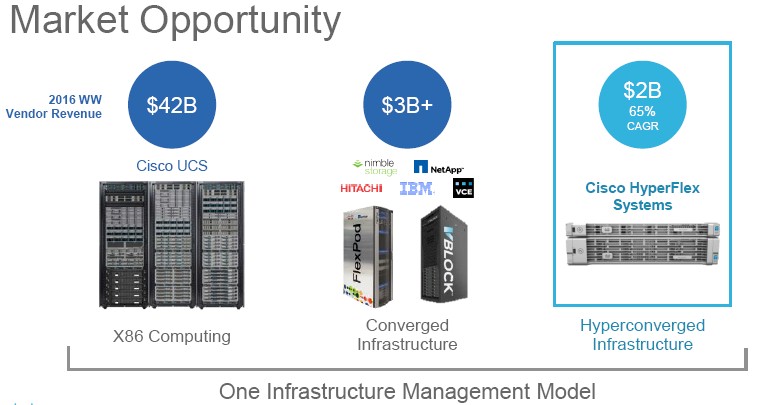

The convergence of servers and networks forced the hand of networking giant Cisco Systems back in the late 2000s, compelling it to create the Unified Computing System blade servers and their integrated networking and launch them nearly seven years ago now, upsetting the balance of power in the datacenter.

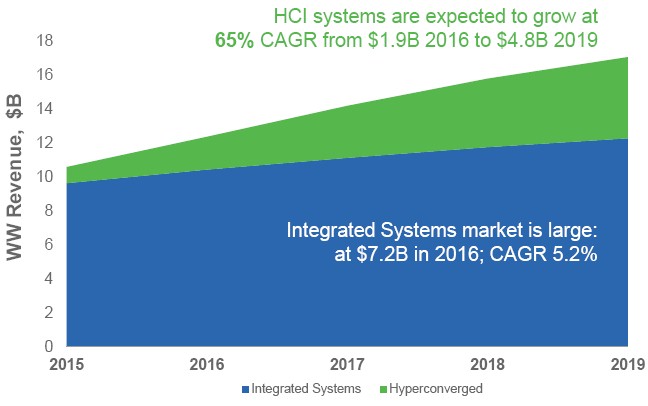

Now, Cisco is being pushed by competitive pressures and pulled by the 50,000 customers using its UCS iron into hyperconverged storage, and it aims to upset some applecarts again.

The idea with hyperconvergence is to replace expensive and unmalleable (from the point of view of customers at least) appliance-style storage arrays with more generic servers stuffed with disk and flash storage that can run distributed storage and distributed computing at the same time on the same iron.

Cisco was not a first mover when it came to hyperconvergence, any more than it has been for servers, but the company has been waiting for an opportunity to integrate and differentiate. “Our observation is that the first movers in this space really focused on two things almost exclusively,” Todd Brannon, director of product marketing for Cisco’s Unified Computing System line, explains. “First, delivering against the insatiable demand for IT simplification and second, get to market fast and meet that demand. And along the way, we believe that they took some architectural shortcuts. The most glaring is probably the file systems, and just about every hyperconverged platform out there is based on the EXT4 file system they pulled from Linux and it has roots back in Unix platforms from the 1970s and has no comprehension of the distributed, scale out environment that a hyperconverged cluster represents. Write-in-place file systems really were not intended for this type of work.”

The other shortcoming, says Brannon, is that hyperconverged platforms do not take the network into account properly, and given that these are clustered systems, the network fabric is at the heart of the hyperconverged stack.

We have observed the same thing, that for physical storage arrays that are clustered to scale out their performance, InfiniBand is by far the preferred means of doing so because of its high bandwidth and low latency, but hyperconverged storage does not seem to require it or RDMA over Converged Ethernet (RoCE), which steals the low latency memory access method from InfiniBand and grafts it onto the Ethernet protocol.

Other corners that were cut, according to Cisco, include having fixed ratios of compute and storage in appliances, which we have explained as a cause behind large enterprises not yet adopting hyperconverged products in large numbers, and requiring customers to deal with a whole new set of interfaces and management tools with the hyperconverged stack is also a problem. The reason hyperconverged vendors go that route is simple: Integrating with existing tools and processes is harder.

“Obviously Nutanix is very influential among the first movers in this space, but it is almost in the same way that Netscape was influential in the browser and web server space,” quips Brannon, who says that Cisco has been talking to customers for the past 18 to 24 months and studying this market and their needs very closely. “We think that through Nexus switching and UCS systems we have done more than anyone else to converge the datacenter, and our customers are telling us that they trust us to figure out hyperconvergence.”

To that end, Cisco is, as has been widely rumored, partnering with upstart hyperconvergence software maker Springpath, which uncloaked out of stealth mode last February as it launched its Data Platform hyperconverged product.

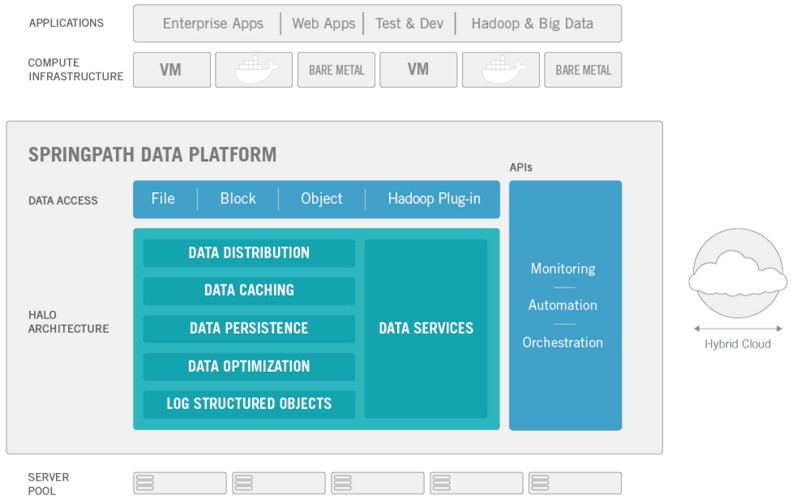

Springpath Data Platform runs atop VMware’s ESXi hypervisor. At the time, Springpath had a few dozen early adopter customers running the code, which was certified to run on servers from Cisco, Hewlett Packard Enterprise, Dell, Lenovo, and Supermicro. The company also said that it was charging a base price of $4,000 per node for its software – considerably less expensive than other hyperconverged stacks – and that it would support Microsoft Hyper-V and OpenStack/KVM atop its hyperconverged storage layer as well as Docker containers.

Deep Roots In VMware

Creating a file system is no easy task, and that is perhaps why we have so many of them, tuned up for many different workloads. Springpath was founded in 2012 by Mallik Mahalingam, who was a researcher at Intel and Hewlett Packard Labs before landing a very long gig at VMware back in 2002. While at VMware, Mahalingam was put in charge of the networking and storage I/O parts of the VMware stack and was the leader of the team that came up with the VXLAN network overlay that is popular among cloud builders for allowing multiple networks to be managed as one from the point of view of virtual machines and hypervisors. To fund the development of Data Platform, Mahalingam secured two rounds of venture funding worth $33 million from Sequoia Capital, New Enterprise Associates and Redpoint Ventures. As of the end of last year, the company had 60 employees and Cisco was looking for a hyperconvergence software partner, and that is why the networking giant has led an undisclosed third round of funding in January of this year.

Brannon says that the deal that Cisco has cut with Springpath to integrated its hyperconverged storage software with its UCS iron to create the HyperFlex platform is not exclusive, but as far as he knows Cisco is the only company that Springpath is working with on an OEM basis at the moment.

The Springpath Data Platform hyperconverged storage is based on an architecture called Hardware Agnostic Log-Structured Objects, or HALO for short. As this suggests, the storage is based on a log-structured file system, which is a very old idea that is implemented in a number of file systems. Unlike append-only file systems like the Hadoop Distributed File System, which put data at the end of the stream, the log structured file systems move the newest data to the front of the stream and try to cache as much of it as possible in cache and main memory before dumping it to persistent media. (One of the inventors of the log structured file system is Mendel Rosenblum, a professor of computer science at Stanford University and one of the founder of VMware, in fact. You can read his initial research paper on the topic, written with John Ousterhout, at this link.)

A log structured file system, says Brannon, is much better at handling inline de-duplication and compression of data. In fact, these features were built into the storage platform from the get-go, so there is no way to say precisely how much overhead they put onto the file system. De-dupe and compression run on main memory, flash memory, and disk storage, automatically and transparently. The compression also allows for variable-sized compression blocks, allowing customers to tune the performance a bit. Cisco estimates that for most enterprise applications customers should expect a 20 percent to 30 percent reduction in capacity from de-duplication and another 30 percent to 50 percent on top of that for compression with the Springpath software.

The HALO approach is also interesting in that it uniformly stripes data across all of the servers in the cluster, regardless of the node where the application using it resides. In essence, Springpath Data Platform treats the cluster like a single computer, and one of the side effects of this is that when a virtual machine is live migrated from one node to another in the cluster, there is no need to do a companion live migration of its associated data. (Clever, that bit. And considering who invented log structured file systems, it is a bit of a wonder that VMware’s stack doesn’t work this way.) A lot of hyperconverged software writes data locally to the node where the application runs and then there is a flurry of replication to copy it for resiliency. There is replication in the Springpath stack, which defaults to two copies but can be set at any level by end users.

Springpath was originally called Storvisor, with the idea being that it was creating a storage equivalent to a hyperviser, which abstracts away the differences in underlying hardware to present an idealized virtual machine in which operating systems and applications run. With the storage hypervisor like the one that Springpath has created, the central log-structured object store is layered with abstractions to look like whatever kind of storage an application needs. While the underlying Springpath storage is an object store, it has been designed to support block and file interfaces for those applications that require this as well as an object interface; a Hadoop plug-in is also available that makes it look and feel like the Hadoop Distributed File System. HALO architecture also supports bare metal access as well as the hyperconverged mode, but this is not yet available.

One key feature of the stack is called the IOVisor, and it is this driver this that allows for compute to be added independently of storage capacity in either the caching or persistence tiers. You just load IOVisor into a VMware ESXi virtual machine and it can read the data in the cluster.

The HALO architecture also uses SSDs as both read and write cache, eventually persisting warm and cold data to disks. There is a hard separation between the caching tier (memory and flash) and the persistent tier (based on disks) and that means the two can be scaled independently of each other depending on either capacity or performance needs of the overarching workloads.

You can see now why Cisco did a partnership with Springpath instead of spending a few years to write its own. What may not make sense is why Cisco did not just buy a majority stake in Springpath – or the company outright – and be done with it. With $60 billion in the bank as of its latest financial reports, the company could afford to do so, clearly.

Putting A Finger On The Scale

The one thing we do not know, and that Brannon did not know either, is what the architectural limits are for the Springpath Data Platform. Obviously it is not as easy as counting nodes, since you can scale up the compute tier, caching tier, and persistence tier independently of each other. With its HyperFlex Platform, which is based on the UCS C Series C220 and C240 rack-based servers and their integrated network fabrics, Cisco us supporting hyperconverged storage that scales from three to eight nodes. At the moment, the C Series iron is equipped with 10 Gb/sec converged Ethernet, with 40 Gb/sec networking coming shortly.

We here at The Next Platform are not impressed by such scale, not when VMware VSAN can do 64 nodes, EMC ScaleIO can do push it to 1,000 nodes, and Nutanix is claiming there are not scalability limits on its Xtreme Computing Platform. (Every architecture has a practical limit, obviously, but getting Nutanix to say a number is . . . difficult.) We think that customers want to be able to have large clusters, but they also want to limit the blast area for when things go wrong. Most enterprises start getting jumpy when a workload has 100 or 200 nodes under their control. Our point is that any enterprise-class solution has to be able to do this and leave some room for overhead. Moreover, if Google can make clusters with 5,000 and 10,000 nodes or as many as 50,000 nodes on which it distributes its workload, there should be no reason why an enterprise hyperconverged storage platform can’t scale to hundreds of nodes – and do so over distributed geographies, while we are at it. Hopefully, Springpath and other hyperconverged storage software will be able to do this at some point.

The base HyperFlex system has two different server nodes, and they can be mixed and matched in a single system.

One is based on the HyperFlex HX220c M4 server, and three of these are linked by a pair of UCS 6200 Series fabric interconnects (these are the 10 Gb/sec variants). These are 1U, two-socket rack servers based on Intel’s “Haswell” Xeon E5 v3 processors, with a pair of on-board 64 GB SD flash cards that act as boot devices for the operating system. The system has a 120 GB SSD for storing logs from the hyperconverged storage and a 480 GB SDD that is used to cache reads and writes. The persistent storage layer runs on the six 1.2 TB 10K RPM disk drives in the HX220c M4 node. The system has a single virtual interface card out to the networking (which can have multiple virtual NICs) and comes with the ESXi 6.0 hypervisor and vSphere stack pre-installed. (ESXi 5.5 is also supported, but not preinstalled.) The server memory, which is used to run compute as well as storage jobs, scales up to 768 GB.

The second machine is the HX240c M4, which is a 2U rack server with 24 drive bays and the same two-socket Xeon E5 motors. This machine has a 1.6 TB for the Springpath caching layer and room for up to 23 of the 1.2 TB 10K RPM disks for the persistent storage layer.

A base system with three of the HX220c M4 nodes and their networking, including the Springpath software but not the VMware licenses, costs $59,000. Those machines employ the eight-core Xeon E5-2360 v3 processors with 256 GB of main memory and the storage and networking hardware as outlined above. The vSphere Standard Edition license, the cheapest one available, would add another $5,970 for the three nodes, call it $65,000. On first blush this seems to be a lot less expensive than Nutanix or VSAN machines. We are working on price/performance comparisons for hyperconverged storage because frankly there is not very good data on this and we do not like when that happens.

Be the first to comment