Here at The Next Platform, we tend to focus on deep learning as it relates to hardware and systems versus algorithmic innovation, but at times, it is useful to look at the co-evolution of both code and machines over time to see what might be around the next corner.

One segment of the deep learning applications area that has generated a great deal of work is in speech recognition and translation—something we’ve described in detail via efforts from Baidu, Google, Tencent, among others. While the application itself is interesting, what is most notable is how codes and systems have shifted to meet the needs of new ways of thinking about some of the hardest machine learning problems. And when we stretch back to the underpinnings of machine translation and speech recognition, IBM has some of the longest history—even if that history doesn’t have a true deep learning element in relatively recently.

In his 36 years at IBM focusing on speech and language algorithms, Michael Picheny, senior manager for IBM’s Watson Multimodal division (an area that focuses on language and image recognition, among other areas), much has changed for both code and the systems required to push speech recognition. While IBM, like many others doing deep learning at scale, has also landed in the large-scale deployment of GPUs for neural networks, the path to that point was long and complex. It is just in the last few years that the critical combination of advanced neural network models and the hardware to run them in real-time and at scale have been made available. And this one-two punch has shifted IBM’s approach to speech algorithmic development and deployment.

Picheney says that back when he joined IBM, they were the only company doing speech analysis and recognition with statistical and computational approaches. Others were focused on physical modeling of the underlying processes in speech. “They were the only ones solving the problem with compute and mathematical techniques, it was the neatest thing I’d ever seen.” His early speech recognition work at IBM was done on large mainframes entirely offline, an effort that was later deployed on three separate IBM minicomputers working in parallel to achieve real-time performance—a first of its kind capability. Then, in the early 1980s, came the IBM PCs, which could have custom accelerators lashed on until the 1990s, when the work could be done entirely on a CPU. Speech recognition teams in Picheny’s group have come full circle to accelerator cards with a reliance on GPUs, which he says are a spot-on architecture for speech, even if there are some limitations hardware-wise on the horizon for the next level of speech recognition using deep learning models.

Code-wise, much has changed for IBM when it comes to speech recognition. Picheny says that earlier systems were a combination of four components–a feature extractor, acoustic model, language model, and speech recognition engine or decoder. As neural networks evolved, both internally and in the larger ecosystem (Caffe, TensorFlow, etc.), these components have been fused and merged, creating a master model—one that requires significant computational resources and scalability from both hardware and software. Back then, he explains that it was difficult to create an architecture that would run all of these different elements efficiently since they all had their own optimization and other features.

“What we are seeing over time is that deep learning methods seem to be taking over more of the speech recognition functions. Bit by bit, deep learning structures and mechanisms are replacing older mechanisms that made it harder to do the heavy duty scaling. In the next couple of years, perhaps longer, we will see deep learning architectures used for all components of speech recognition—and image as well.”

He notes that over time too, it might be possible for these many functions to be packaged into a single special purpose chip to make it even easier to scale.

“People working in deep learning have become very agile in what they’ve learned to do. The field is moving so fast; there are advances in one framework, another in a different one—all of the deep learning packages have their pros and cons, especially for speech…We’ve worked with all the major packages, some are better than others, but I don’t want to say which. But we also have built a good bit of our own code,” Picheny says.

“Deep learning architectures are now being used for all components of that speech processing system and merging these structures that learn everything to solve the problem as a whole. As you can imagine, it’s much easier to scale something based on a single component and a single architecture—even if that architectures is more complex—than if you have many components that need to be programmed separately. In the future, as that one architecture becomes more mature and standardized, you can see how CPUs might have some inherent assists or there will be chips with these single architectures for doing this in bulk.”

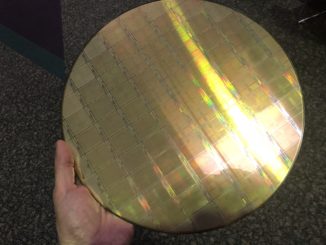

For speech, IBM has its own custom-developed neural network models that feed into Watson (which is still a nebulous thing hardware and software-wise, we’re still working on that story), For these models, the drivers are computation speed and memory. As it turns out, these are the biggest limitations as well, particularly on the memory front.

“GPUs are very fast but limited in memory. That’s where the bottleneck is for training on huge quantities of speech. There’s advantage in keeping everything on local memory on the GPU versus fetching it off the chip. And there are algorithms where people are trying to combine results across multiple GPUs, which means making performance tradeoffs for that level of parallelization. What we really want are faster GPUs with more memory.”

Aside from custom ASICs just for speech, we asked Picheny about other architectures that seem to be getting traction in deep learning outside of pure-play deep learning chips from companies like Nervana Systems (now part of Intel). One of the likely candidates for speech acceleration could be neuromorphic devices—and IBM has developed its own (TrueNorth). “There is a lot of work on neuromorphic architectures but the limitation of these chips, even though they do fascinating things, is that you have to program them far differently than a GPU. And with a GPU there are big communities of people writing special purpose libraries. The limitation is that the people developing their algorithms don’t want a new way of programming what they’re already doing.

FPGAs have a similar problem, Picheny says, but are more of an intermediate solution, but programming is still not easy.” He says the availability of a rich array of libraries in the GPU CUDA ecosystem is the reason deep learning will continue to be done on GPUs in this middle period before we might see specialized chips just for this task.

On the note above about Watson being nebulous: Pichney agrees that it is hard to pin down just how many different frameworks and models are part of Watson’s overall AI umbrella, but this is not by design. “Everything is evolving so quickly, especially in the last two years.” He says that what Watson was in the beginning, just as with his own speech recognition algorithms and clusters, isn’t the same now. While we might not get answers from IBM about just how many components Watson encapsulates and what hardware is required to make it all work for key applications, one can image that Picheny’s story about the merging and fusing of various components into master networks with specialized functionality might ring equally true for Watson.

In terms of Visual Recognition. IBM Watson is the least impressive out there from all the big IT players. So maybe IBM might still have the edge on Voice but I am not even sure on that as Microsoft has been working it for nearly as long and Google has thrown lots of resources on it in recent years too.