With the “Skylake” Xeon E5 v5 processors not slated until the middle of next year and the “Knights Landing” Xeon Phi processors and Omni-Path interconnect still ramping after entering the HPC space a year ago, there are no blockbuster announcements coming out of Intel this year at the SC16 supercomputing conference in Salt Lake City. But there are some goodies for HPC shops that were unveiled at the event and the chip giant also set the stage for big changes in the coming year in both traditional HPC and its younger and fast-growing sibling, machine learning.

Speaking ahead of the SC16 event, Intel’s top brass in the HPC segment – and we are using the broadest term for that – talked about various kinds of compute it had in the works to goose the performance of parallel workloads.

“Intel remains vitally interested in high performance computing,” explained Charlie Wuischpard, vice president of the Scalable Data Center Solutions Group at Intel. “There is obviously a huge body of work that remains around the science codes and scientific discovery and simulation. Clearly, artificial intelligence and machine learning has become a big deal lately and is a rapidly growing workload. We have plans and are addressing that very aggressively, but we can’t leave behind all of that history that has happened before. So it is really a balancing act. We still see high performance computing as driving business innovation and scientific discover, and it is bigger than us and it is one of the reasons we are interested in HPC.”

This is aligned, of course, to the theme of SC16, which is that HPC matters. But it is also equally true that HPC is hard, and maybe a lot harder than chewing on mountains of digital data we all generate to have machine learning algorithms come up with their own inferences and make decisions about what that data is and what event to trigger based on that. Deciding something is a lot easier, it seems, than simulating something in full fidelity, and it only take a few seconds of contemplation to see why this is the case.

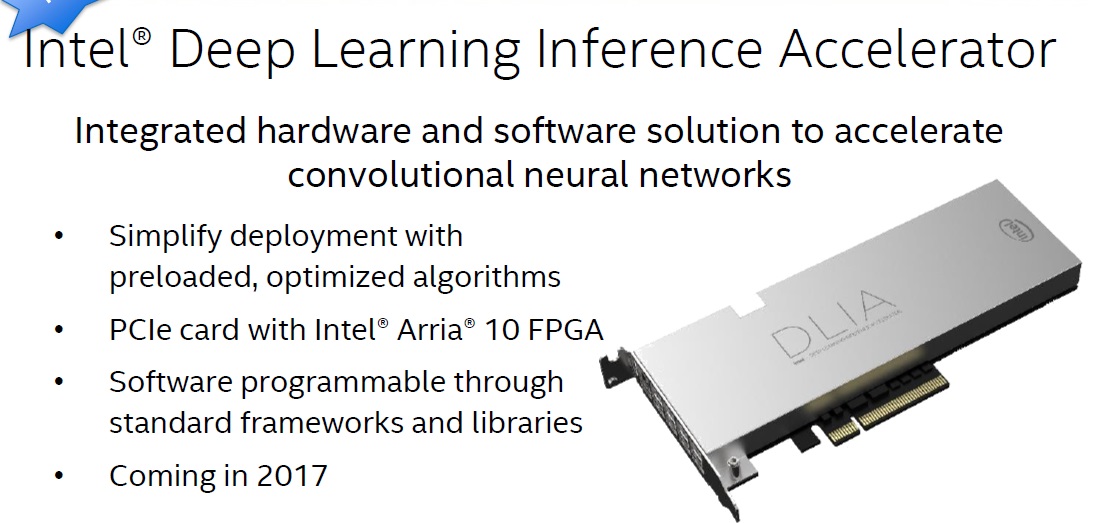

That said, kudos to the hyperscalers again for expanding the definition of HPC, first with Hadoop and distributed analytics on a massive scale and now with machine learning for image, text, voice, and video recognition techniques that will be turned back HPC. This is one key reason why Intel paid $16.7 billion to buy FPGA maker Altera last year, and another reason why it shelled out cash – rumored to be at least $400 million, but also rumored to be a lot more than that – this summer to buy machine learning upstart Nervana Systems.

On the hardware front, Intel has two important things it unveiled for HPC shops. The first is a special version of the current “Broadwell” Xeon E5 v4 processor that is tuned and priced specifically for HPC workloads that depend on floating point oomph. The new Xeon E5-2699A v4 processor has 22 cores running at 2.4 GHz and includes a 55 MB on-chip L3 cache, and Wuischpard says that this chip will have about 4.8 percent better performance on the Linpack parallel Fortran benchmark test than the stock Xeon E5-2699 v4 processor. The Broadwell chips started shipping to selected hyperscale and HPC shops last fall – we think maybe September or October in 2015 – and were launched at the end of March this year for volume shipments. These chips are the first Xeons to use the 14 nanometer manufacturing processes from Intel, and it took a little longer than usual to work the process kinks out. In any event, the stock Xeon E5-2699 v4 part runs at a slower 2.2 GHz and costs $4,115 each when bought in trays of 1,000 units from Intel. This is a very expensive processor compared to the rest of the Xeon E5 v4 lineup, and even with deep discounts that hyperscalers (particularly those running search engines) get, it still carries a hefty premium. But the high core counts allow server consolidation, and that saves money in all kinds of ways.

The good news for HPC shops – and we think more than a few hyperscalers and cloud builders will also be interested in this new chip, too – is that this slightly faster processor fits in the same 145 watt thermal envelope as the E5-2699. The bad news is that it costs $4,938 per chip when bought in those 1,000-unit trays. That is a 20 percent premium for a 4.8 percent increase in performance for floating point. You have to really want that extra performance. “Every little bit of performance counts, and our customers have been asking us for this,” explained Wuischpard. If you are putting in a cluster with hundreds or thousands of nodes, the numbers can work.

The other processing news relating to HPC shops is that the “Knights Landing” Xeon Phi processors, which were unveiled last June at the ISC 2016 supercomputing conference in Germany, are now shipping in volume, according to Wuischpard, after being in early ship for the past year and on the minds of the HPC community for several years before that.

“All of the early work, as we have been talking about for the past several months, has been for early ships customers who had ordered the product a few years ago in some cases,” said Wuischpard. “We have been busy installing and bringing these systems up. You will see a number of very large systems on the Top 500 list. The adoption has been fantastic. We have over 50 large scale deployments either done or under way. The thing that is also notable has been the Xeon Phi-F product, which has the integrated Omni-Path fabric on it.” That part shipped just as SC16 was starting, and none of the systems installed to date have the integrated fabric. “So we are looking at another wave of interest for people who have been waiting for this part in particular. We have been working on getting broader adoption for Xeon Phi, and you are going to see that from our partners.”

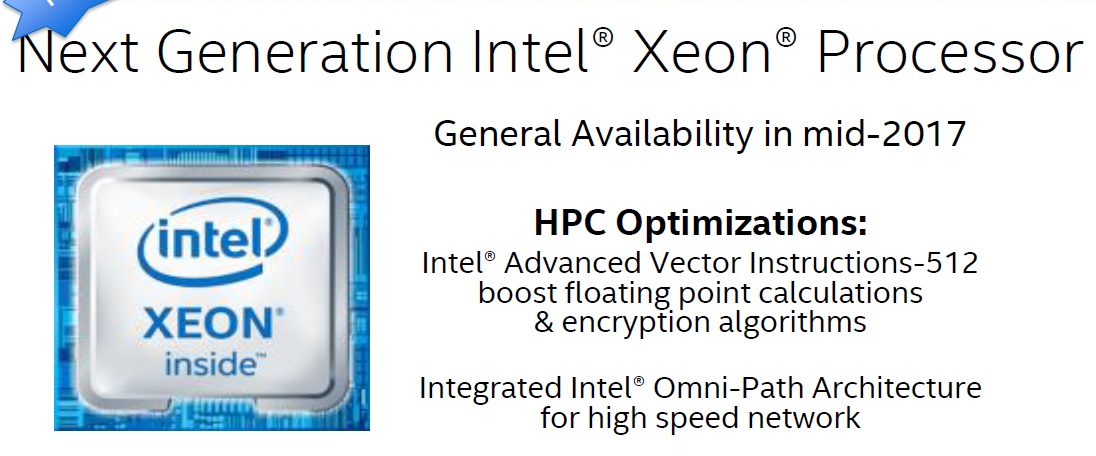

Interestingly, and related to the Knights Landing Xeon Phi in a tangential way, is the fact that Intel has confirmed what we had all been expecting for years now: The future “Skylake” Xeon E5 v5 processors due to be announced in the middle of next year sometime, will support the 512-bit Advanced Vector Instructions (AVX-512) floating point and encryption features that made their debut first in the Knights Landing chips. As we told you previously, the Skylake Xeon processors are expected to have a single socket for machines that span from two to eight sockets, so in a way, there is no Xeon E5 separate from the Xeon E7 anymore; the top bin parts are expected to have 28 cores as far as we know, but there could be more latent cores on the die, which might have as many as 32 physical cores. We told you back in May 2015 that the Skylakes would support AVX-512 instructions, too, which Wuischpard formally confirmed.

Because many people have been nervous about the 14 nanometer ramp with the Broadwell Xeons and the slowing of the Moore’s Law pace of advancement, Wuischpard took some time to talk about this process and how it relates to being able to ship the faster Broadwell part for HPC customers and deliver Skylake. (Intel CEO Brian Krzanich, Intel’s chief executive officer, said in late October that Skylake Xeons were already sampling to “leading edge” customers, usually the key OEMs plus hyperscalers and HPC centers.)

“It is a good indication of the health of our 14 nanometer manufacturing process,” Wuischpard said. “As yields improve and the manufacturing process improves, you can just drive more performance out of the process. So if you think of Skylake as being on the same manufacturing process, that is a real promise as to what you can expect with Skylake.”

Wuischpard said that Skylake Xeons will be generally available in mid-2017, putting a stake in the ground, and reminded everyone that “this doesn’t mean we will not be shipping quite a few in volume before then.” As we have previously reported, the Skylake Xeons will have an optional integrated Omni-Path fabric, too, just like Knights Landing. The interesting thing to consider is how a Skylake will stack up against a Knights Landing.

A Knights Landing 7290 with 72 cores, with two AVX-512 units per core, runs at 1.5 GHz and delivers 3.46 teraflops in 245 watts, while the slower Knights Landing 7250 has 68 active cores that run at 1.4 GHz and that deliver 3.05 teraflops across those AVX-512 units. Assuming that Skylake chips with 28 cores run at maybe 2.0 GHz flat and have only one AVX-512 unit per core, at 28 cores would yield maybe 900 gigaflops peak, and with two AVX-512 units, would deliver 1.8 teraflops or so. Knights Landing might have a performance advantage thanks to the cores and a memory bandwidth advantage thanks to HMC memory, but Skylake definitely has an ease of programming advantage for those familiar with Xeons.

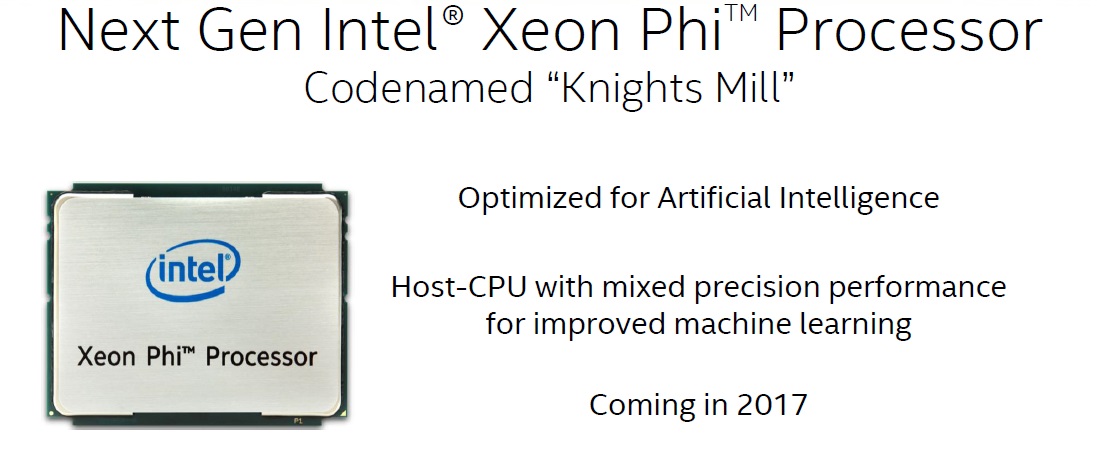

Separately, and ahead of an AI Day that Intel that is hosting in San Francisco at the same time as the last day of the SC16 extravaganza, Intel’s Barry Davis, who is general manager of the Accelerated Workload Group that includes Xeon Phi chips, Altera FPGAs, and Nervana Systems chips, confirmed again that the future “Knights Mill” variant of the current Knights Landing Xeon Phi processor would support mixed precision math, as the company revealed back in August. This should mean 16-bit floating point as well as the normal 32-bit and 64-bit variants, but Intel could have baked 8-bit support in there, too.

Presumably more details will be coming later in the week on Knights Mill and an FPGA adapter card that Intel has created and paired with FPGA Verilog “code” that handles machine learning inference routines. The idea is to use Knights Mill for training neural nets and this FPGA card and its “software” to handle machine learning inference offload for applications, which Intel will update like other pieces of enterprise software and which Davis said offered four times the performance per watt on inference than a CPU alternative. We will presumably hear more about the Broadwell Xeon-Arria 10 hybrid chip that has been in the works for a while now.

Still waiting to see who’s going to be the first cpu vendor to announce unum support on hw level.

Has Gustafson announced his unum 2.0 concept at sc16?

Looks promising definitely would wait what Knights Mill has to offer before going out and invest big time in a ML setup. Looks like Intel is turning up the heat here, which is great as competition is definitely needed

Mr. Morgan, quoting Intel 1K price is deceiving.

Whether buyer or competitor one has to know and

understand Intel actual pricing.

Obviously deception is not a word in the Next Platform’s vocabulary, in my opinion the best computing report since PC Tech Journal, Byte, Personal Workstation and High Performance Computing. The reason, for me, depth of reporting, whole platform take, examining business aspects of compute and here on price assessment is where NP falters.

Best when NP reports any price a quick search of the broker offerings to estimate range and actual.

Today 11.17.16; E5 2699 eBay range for one unit is $945 to $6500. Throwing out the sub $1000 low end which are samples, marked Confidential, Abel you’re letting them slip out again to impact the competition, and I just complained to BK, the open market non IDM certified offering mean price is $3341, minimum $1230, maximum $6500 which is the rip off IDM platform certified part. Easily one can estimate an Intel 25% volume discount on this part.

Moving to the Amazon Intel Dealer IDM Certified for their platforms, the rip off parts, mean $8637, minimum $2999, maximum price $11769. What this means is that Intel top tier dealers are more than taking their NRE consumer monopoly overcharge value.

I estimate Intel will let loose in very high volume E5 2699 v4 22c for $2543 and the 20c for $2321.

Mike Bruzzone, Camp Marketing