The computing industry is facing a number of challenges as Moore’s Law improvements in circuitry slow down, and they don’t all have to do with transistor counts and memory bandwidth and such. Another problem is that it has gotten progressively more costly to design chips at the same time that mass customization seems to be the way to provide unique processing capability specifically for precise workloads.

In recent decades, the US Defense Advanced Research Project Agency pumped huge sums of money into designing and manufacturing gigascale, terascale, and petascale systems, but in recent years this development arm of the US military has been more concerned with efficient computing it can take onto the battlefield than in pursuing exascale systems. This change in focus is warranted, given the mission of the US military and its need for in-field computing, but it has certainly had long-term effects on investments in exascale system research and development, pretty much leaving the US Department of Energy and National Science Foundation shouldering the burden of the next three orders of magnitude in development on their own.

The interesting bit is that DARPA doesn’t just want more efficient computing, it wants to foster faster and lower cost development of new processors, and is doing so under the $32 million Circuit Realization At Faster Timescales (CRAFT) program, which was announced in August 2015. (This funding is distinct from the pool of money that was used to invest in Rex Computing to foster its 256-core Neo massively parallel processor.) The goal of the CRAFT program is to design chips using 16 nanometer processes for under $1 million a pop, with DARPA kicking in some funds to cover the costs of making masks for the chips when the designs are done.

Adapteva, which was founded in 2008 by Andreas Olofsson, is one of the beneficiaries of the CRAFT program and used that investment and the help with the masks to crank up the number of cores on its Epiphany massively parallel chips from 64 cores on the Epiphany-IV to a whopping 1,024 cores on the Epiphany-V – and did so on the shoestring budget that DARPA mandated. Olofsson said that a mere five people worked on the Epiphany-V chip and that he did 80 percent of the work himself in adding 64-bit processing and addressing to the chip as well as scaling the cores up by 16X.

It has been a long and somewhat strange journey for Olofsson, who designed TigerSHARC digital signal processors (DSPs) at Analog Devices for a decade before striking out on his own in 2008 to build a floating point coprocessor for systems that would be ten times more power efficient than the devices on the market at the time. The Epiphany-I prototype chip had 16 minimalist RISC cores (with 35 instructions, that’s it) and was implemented in 65 nanometer processes and was working by early 2009, and after seeing what it could do, BittWare, a supplier of FPGA platforms, kicked in $1.5 million in Series A funding to help push development of the Epiphany chips along. We don’t know much about Epiphany-II, but we do know that the Epiphany-III had 16 cores running at up to 1 GHz and used a 65 nanometer process as we presume the Epiphany-II did. The Epiphany-III chip delivered 32 gigaflops of single precision (SP) floating point performance.

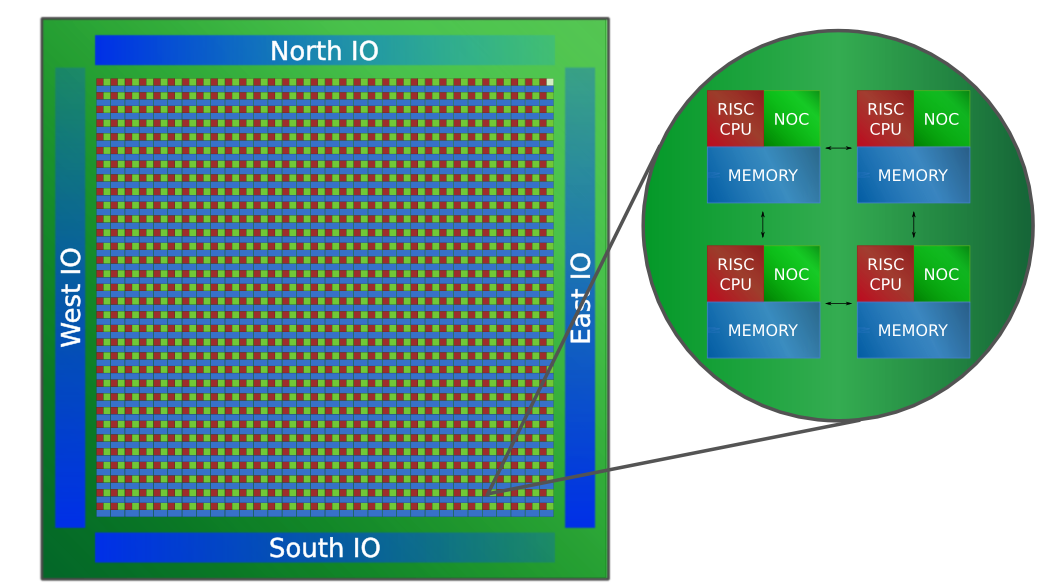

In October 2012, just after securing $3.6 million in funding from network equipment maker Ericsson and Carmel Ventures, Adapteva tried to break into the bigtime with its 64-core Epiphany-IV processor, which crammed 64 cores onto a die, using a Kickstarter program that would put system and desktop boards in the hands of individual investors. That Kickstarter program raised nearly $1 million, and allowed Adapteva to put the Epiphany-IV into production using 28 nanometer processes from Globalfoundries. The Epiphany-IV chip put 64 cores running at 1 GHz on a die with 32 KB of static RAM (SRAM) per core, and the secret sauce of the design was the 2D mesh network that linked the SRAMs together so any core can access any SRAM bit, and this aggregated SRAM is mapped as a single address space across the cores. The Epiphany cores all have a direct memory access unit that can prefetch data from an external flash memory device, too. The Epiphany chip runs ANSI C and C++, adheres to IEEE floating point math, and uses OpenCL interfaces to offload parallel processing from a CPU and it is essentially a math coprocessor. Running at the peak of 1 GHz, the Epiphany-IV could deliver 100 gigaflops of aggregate SP floating point processing, which worked out to around 70 gigaflops per watt.

Four years ago, when the Epiphany-IV launched, Olofsson set a goal for himself to deliver a future family of Epiphany chips etched in 7 nanometer processes and delivered around 2018 – in time for exascale-class systems. This included a chip with over 1,000 cores that would deliver 2 teraflops of performance in a 2 watt power envelope and an aggregate grid of chips that would have 64,000 cores (a grid of sixteen chips with 4,096 cores each) with 1 MB of SRAM per core and deliver 100 teraflops in a 100 watt envelope, or 1 teraflops per watt.

With the Epiphany-V, which has just been unveiled, Olofsson is on the way to this ambitious goal, and the existing Epiphany chips are starting to get some traction, too, with interest in the Epiphany-V, which has just taped out to Taiwan Semiconductor Manufacturing Corp using its 16 nanometer FinFET processes.

“In general, things are pretty good,” Olofsson tells The Next Platform. “We have hit steady state and have become a sustainable company. It has taken eight years, but we have controlled our burn rate and now we are still at the early adopter stage, which we have adjusted to. Now we have academics, research labs, and DARPA using our chips, and we do very well there. But we are still waiting to break through to the mainstream.”

Companies like Tilera and Calxeda tried to break into the mainstream on massively parallel processors, the former spending $100 million on its own RISC designs and the latter spending $150 million using ARM cores. Tilera didn’t reach orbit despite its burn rate and good technology, and was eventually absorbed by EZchip and is now part of Mellanox Technologies (and is the basis of the BlueField programmable network adapter cards that it launched in June). Calxeda went bankrupt, but some of its networking lives on. Adapteva, by contrast, has raised less than $7 million in nine years, including some debt financing – and significantly, is still here, doing what it set out to do.

Cambrian Chip Explosion

Olofsson pitched DARPA on the idea of building the Epiphany-V before the CRAFT program was even started, as it turns out, and the chip was designed for under $1 million – again, not including some mask costs that were pretty substantial and picked up by DARPA and that Olofsson was not at liberty to divulge.

The idea that DARPA has – and we think it is a valid one – is that if many chip makers speed up their designs as well as make them less costly using sophisticated tools while at the same time tailoring those chips for very specific functions, the result could be a Cambrian explosion in chips, all fit for purpose and all done at a reasonable cost.

“People are seeing the end of Moore’s Law, and they are also seeing an explosion in design costs, and everybody is trying to figure out how to keep this thing going,” says Olofsson. “You can’t make 16 nanometers the exclusive domain of Qualcomm, Intel, and others. We want small design teams to have that capability as well. So, when I pitched it, it fir with some of the thinking at DARPA, and the idea was to reduce the design costs by 100X compared to the average out there in the industry.”

The core in the Epiphany-V processor is binary compatible with the four prior generations of chips, which in the aggregate have shipped to over 10,000 customers (with nearly 6,000 of those coming through the Parallela Kickstarter program from four years ago). The specifications for the Epiphany designs are open, and this is one of the reasons why the chips have been popular among academics and researchers. Other chip startups, including the deep learning ones we cover here like Nervana Systems and Wave Computing, only show off details under non-disclosure agreements, according to Olofsson.

The DARPA grant to Adapteva did not require Epiphany-V to move to 64-bit processing and memory addressing, but Olofsson figured it made sense to do it now. It took about a month – a mere month! – to upgrade the architecture from 32-bits to 64-bits. It took years and hundreds of engineers for ARMv7 to move to ARMv8, by contrast, and look at how long it took Intel way back when. (In the latter case, that was politics and marketing as much as technology.) Olofsson says that because the architecture was cleanly done from the beginning, upgrading it was no big deal. (To be fair, ARM and Xeon chips do more things and have much more legacy to carry forward.) The addition of double precision floating point means that HPC centers can now take Epiphany coprocessors seriously, and the doubling of SRAM memory to 64 KB per core, which is 64 MB total across those cores, which makes it easier to run beefier applications. The 2D mesh is now 136-bits wide has a much larger bi-section bandwidth, too, seeing as though the core count has scaled up by a factor of 16X between the Epiphany-IV and Epiphany-V generations.

Conceptually as well as literally, the Epiphany chips are a tightly coupled cluster of compute nodes that run mathematical calculations instead of full operating systems, but there is a possibility that future Epiphany chips will have their own bootable core and become a processor in their own right instead of a coprocessor. (More on that in a second.)

“The elegance of 2D, mesh-oriented architectures is really underappreciated,” Olofsson contends. “When you combine that type of architecture with a very tightly coupled design team – it is hard to imagine a more tightly coupled design team than one person – you can get very fast improvements on a design. That is what I found out over the past year. Layout designs would feed back into circuit, circuit would feed back into architecture, and I would go through these iteration cycles on a daily basis. If you look at a company like Intel or Qualcomm, when you have a thousand people on a design team, nothing happens quickly. It is just impossible. So the revolution cycle, they are just on a different timescale. So startups definitely have an advantage.”

To be fair, chip startups like Adapteva don’t have much baggage. They have a carry-on bag, while Intel has a truckload of steamer trunks.

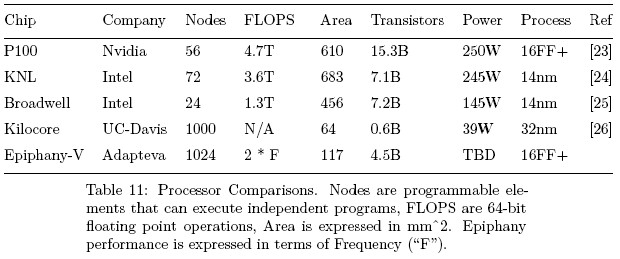

Another big challenge for the Epiphany-V was the power gating approach, which was to allow each individual core to be powered off and powered on as needed. What this means is that if a workload pauses, the chip will essentially burn no juice at all except enough to keep its memory in place. The Epiphany-V has 2,052 separate power domains and weighs in at 4.56 billion transistors in a 117 millimeters square of chip area. By the way, this is a little bit smaller Apple’s latest A10 “Hurricane” ARM processor for its iPhone 7s, which is 125 millimeters square but has only 3.3 billion transistors using the same TSMC process. The Epiphany-V could, in theory, be used in a smartphone, but peaks at 20 watts going full throttle with all cores on.

More interestingly, the 2D mesh interconnect can be extended across multiple systems using glueless memory I/O links to over 1 billion cores and over 1 PB of aggregate SRAM memory. Such a grid would have a grid of 1,024 of the Epiphany-V chips cross linked in a 32 by 32 array. (What fun it would be to build such a monster. . . .)

At the moment, the Epiphany chips do bare-metal math processing, but this seems about to change. To run Linux and have access to general purpose math libraries, runtimes, and networking stacks requires a general purpose CPU.

“Every one of our cores is a proper RISC processor, but we don’t have memory management or a sophisticated multi-tier caching system built in,” explains Olofsson. “Which means that it doesn’t run Linux very well and not with the performance that people expect. Adding a CPU host core to the chip was in the plans here, but we ran out of time. If there is a proper CPU core on there, it will be RISC-V, there is no doubt about that. I had some loose plans to do that, and it will be in our next iteration.”

RISC-V, of course, is the open source RISC processor that was created at the University of California at Berkeley and that is gaining momentum and is a possible contender against ARM and Power in the fight to take some share away from X86 in the datacenter.

The Epiphany-V tapes out over the summer and chips will be coming back within the next four or five months, hopefully by January if all goes well. Clock speeds and final power consumption are not being disclosed, but Olofsson hints they should be in line with the Epiphany-IV chips.

Everyone wants to know what the performance of the Epiphany-V chip will be, and no one more than Olofsson himself. And he is being a bit dodgy until real results are available.

Using a simulator with a 500 MHz clock speed on the 1,024 cores, Olofsson reckons the Epiphany-V can do about 8.55 gigaflops per millimeter squared at double precision. He reckons that the Nvidia “Pascal” Tesla P100 accelerator chip weighs in at 7.7 gigaflops per millimeter squared, and the Intel “Knights Landing” parallel processor comings in at 5.27 gigaflops per millimeter squared. The top-end “Broadwell” Xeon E5 v4 chip weighs in at 2.85 gigaflops per millimeter squared. If the clock speeds can be cranked up on the Epiphany-V to 1 GHz, it will have a 2.2X advantage on compute density over the Pascals and a 3.2X advantage over the Knights Landings. We will do the comparisons when the chips are back and the watts and slots are known, including the KiloCore chip out of the University of California Davis and the PEZY-SC chip from Exascaler, which was the first to break through the 1,000-core barrier two years ago.

So … If the cores are very simple maybe we can expect only one FLOP per core and Clock cycle.

Hence we could expect ~ 1024*FREQ*2 ( 2 FMA:s per Clock) .

So anywhere between 1000-2000 GFLOPS @ 20 watts.

That could yield and impressive 2000 / 20 ~ 100 GFLOPS/watt.

For reference: the Nvidia P4 (pascal based 50 watt part) runs at about 4146 GFLOPS / 50 watt ~ 83 GFLOPS/watt.

I am wondering if this beauty could handle 500-1000 docker containers…

>Cristian Vasile says: October 25, 2016 at 3:37 pm

>I am wondering if this beauty could handle 500-1000 docker containers…

No MMU, remember? This is why the earlier Epiphanys didn’t live up to expectations. This chip shares more with GPUs in compute mode than with anything else. For all those containers, Knight’s Landing might work since each node is (an original?) P5, with MMU and all (I think–please correct me if I’m wrong!)