For storage at scale, particularly for large scientific computing centers, burst buffers have become a hot topic for both checkpoint and application performance reasons. Major vendors in high performance computing have climbed on board and we will be seeing a new crop of big machines featuring burst buffers this year to complement the few that have already emerged.

The what, why, and how of burst buffers can be found in our interview with the inventor of the concept, Gary Grider at Los Alamos National Lab. But for centers that have already captured the message and are looking for the boost in their storage performance, the real question is what they will yield now—and how workflows down the line will benefit.

The storage tides have shifted over the course of 32 gatherings for the International Conference on Massive Storage Systems and Technology (MSST), which is being held in Santa Clara this week. Sessions on forthcoming exascale architectures as well as approaches to storing and whipping around large enterprise data have been on the menu, and several noteworthy speakers from research and the vendor community have described what’s ahead, with particular emphasis on burst buffer technology.

As we have noted in past interviews focused on the CORAL systems and their burst buffer approaches, as well as those that will appear later this year on Los Alamos National Lab’s Trinity supercomputer, there are real storage performance benefits to be gleaned. Others, including IBM, are taking the charge on the performance modeling side to see what the addition of burst buffers could mean for machines in the 2020 timeframe.

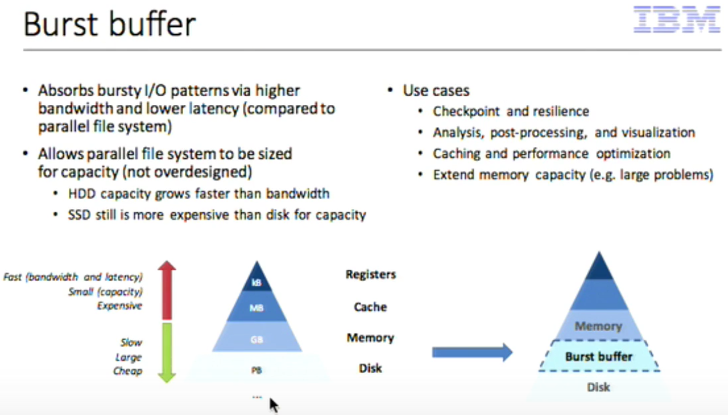

One key guidepost for these efforts is the new Alliance for Application Performance at Extreme Scale (APEX) workflows report—a dense document detailing the specific requirements for a range of scientific and data-intensive workflows. Using this report, IBM’s Yoonhoo Park, from IBM Research systems software group, and his team have modeled what burst buffers might mean on future machines and workflows and shared findings at this week’s MSST meeting. When looking at the range of storage challenges on the horizon, Park says, “the biggest challenge is that current parallel file systems are not able to provide an adequate fraction of the aggregate disk bandwidth.”

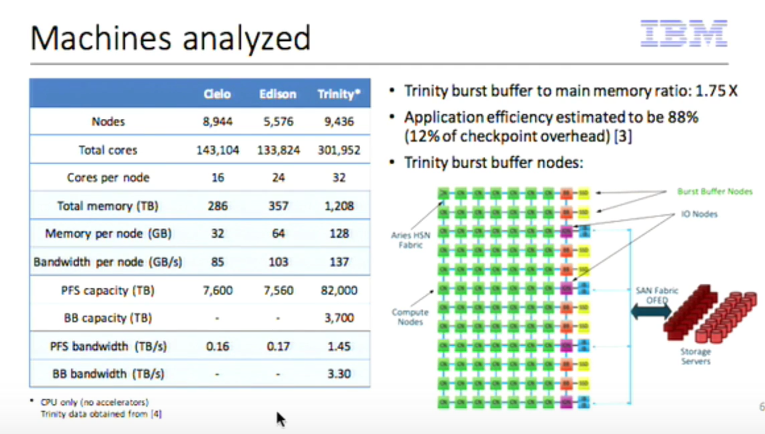

“We’ve been told we could face application checkpoints on the order of 32 petabytes. If we want to transfer that out of main memory to a burst buffer in 30 minutes, we would need bandwidth on the order of 16 terabytes per second. So, with the Trinity machine, the bandwidth to their parallel file system is on the order of 1.5 terabytes per second. So yes, we’re a long way from that.”

Further, in the world of high performance computing, applications are such a mixed bag that coming up with a one size fits all approach to the problem is even more complicated. IO patterns lead to irregularity and unpredictability, multiple processes are writing to the same file, and these issues, along with others for specific workflows, are dramatically ditching storage system performance. However, using the APEX workflows document, IBM researchers were able to predict how the burst buffer might lend to overall storage performance.

As seen below, there are specs for three Department of Energy machines. The burst buffer nodes are the orange, compute are green. As a side note, these are different burst buffer configurations than on the forthcoming CORAL machines at Livermore and Oak Ridge where the burst buffers are on each of the nodes. The results of performance modeling predictions based on the anticipated increased problem sizes by 2020 show around double the I/O performance from the parallel file system to the burst buffer and around 20X over Cielo’s parallel file system (for the same checkpointing interval).

“Disks give us a lot of capacity, which is great,” Park says, “but they don’t give us the latency or the performance. So the burst buffer is really filling the niche in between—it gives us benefits in terms of latency, even if not capacity. It fills in the memory hierarchy pyramid nicely.”

Currently, he says, IBM Researchers are looking at those 2020 APEX workflow demands and deciding how burst buffers will integrate with the architectures for the Crossroads and exascale machines. But clearly, there is still a lot of work to be done to reach the I/O targets for those systems. In 2020, Trinity, will be nearing the end of its useful lifetime. Crossroads is a Trinity replacement, a tri-lab computing resource for existing simulation codes, and a larger resource for ever-increasing computing requirements to support the weapons program. Crossroads will provide a large portion of the AT system resources for the NNSA ASC tri-lab simulation community: Lawrence Livermore National Laboratory (LLNL), Los Alamos National Laboratory (LANL), and Sandia National Laboratories (SNL), during the FY21-25 timeframe. The goal will be to perfect the burst buffer concept and make the machine a representative example, along with the CORAL systems, of what burst buffers will lend to overall storage system and application performance.

Problem is already solved: http://www.alluxio.org/com