When it comes to national priorities for funding leading class supercomputers, the National Nuclear Security Administration (NNSA) tends to have a strong case to make. The modeling and simulation of nuclear stockpile degradation, storage, and maintenance requires massive machines to lend a high resolution view into what happens to nuclear and other weaponry and defense-related material over time in different conditions.

This is one of those areas of HPC that is not widely discussed, although it constitutes a great deal of HPC software innovation that has taken place over the years, particularly in the desert of New Mexico at Los Alamos National Laboratory.

The entire fleet of supercomputers at Los Alamos National Laboratory (LANL), across both classified and unclassified divisions, totals between two and three petaflops, according to the center’s head of high performance computing, Gary Grider. But of course, this will change significantly when the massive Trinity machine comes online at Los Alamos in 2016, which will bring the lab’s capacity to between forty and sixty petaflops.

That petaflops capability figure before Trinity arrives does sound rather low, especially since we have to assume there are other classified systems that might offer additional capacity beyond Cielo (handles classified and unclassified workloads), which is a 1.3 petaflops Cray-built system (a midget compared to the other monoliths Cray is building for other national labs) that is hitting the end of its lifespan this year. Los Alamos decommissioned one of its most powerful machines in 2013, the IBM Roadrunner, which provided 1.7 petaflops of peak computing power, which in 2008 was first to break the petaflop barrier.

The $174 million Cray-made Trinity machine will be operated by the NNSA and LANL to model nuclear stockpiles and to serve a broader array of classified and unclassified scientific and technical computing projects. There are a range of applications that track a number of different scenarios across nuclear and weapons stockpiles, but even with the Cray XE6 petaflopper, Cielo, on site, LANL was hitting a limit with their codes. For instance, as Bill Archer, program director for LANL’s Advanced Simulation and Computing Program notes, a machine like Trinity is the only class of system that could adequately tackle what is known as “The Problem” in the weapons stockpile world. The multiphysics 3D simulation, which (let your imagination run wild on this horror) factors in complex models that simulate thermonuclear burn, fission, hydrodynamics, and the photonic interactions from radiation.

The $174 million Cray-made Trinity machine will be operated by the NNSA and LANL to model nuclear stockpiles and to serve a broader array of classified and unclassified scientific and technical computing projects. There are a range of applications that track a number of different scenarios across nuclear and weapons stockpiles, but even with the Cray XE6 petaflopper, Cielo, on site, LANL was hitting a limit with their codes. For instance, as Bill Archer, program director for LANL’s Advanced Simulation and Computing Program notes, a machine like Trinity is the only class of system that could adequately tackle what is known as “The Problem” in the weapons stockpile world. The multiphysics 3D simulation, which (let your imagination run wild on this horror) factors in complex models that simulate thermonuclear burn, fission, hydrodynamics, and the photonic interactions from radiation.

Although the existing Cielo machine is far from puny, the code could only run on half the machine after a three-to-four day wait for an eight hour run because of so many other projects eating the machine. Aside from that more practical concern, nodes cannot go down during such a simulation and fast interaction between nodes is critical. This meant NNSA needed to do extensive resource management to ensure reliability in advance. However, when NNSA went to run its simulations, the memory was exhausted just using half the Cielo system and the file handling of the machine caused major data movement problems. As we’re finding out, this memory wall is a key barrier being addressed with other massive scale systems, including the Summit machine coming to Oak Ridge National Lab in 2018.

Memory was the overriding bottleneck, as it is with many codes that are hitting the limits of the supers they’re running on. The LANL team cut down on the physics side to improve accuracy and trim memory usage by 40 percent, according to Archer, but even that didn’t solve the problem. The simulation was simply too large and the memory too weak to support it.

Considering the Trinity machine, which has been designed specifically around single simulations that are capable of taking the whole of a multi-petaflops capable machine, has with between 2 and 3 petabytes of main memory, a 7 petabyte flash array with burst buffer, and 82 petabytes of disk.It will be interesting to follow up with Archer post-Trinity and see how much compute and memory could be left on table before they scale it to meet the capabilities of Trinity.

To be fair, it’s not just NNSA projects that run on LANL supercomputers. For instance, most recently, we learned about a partnership through LANL’s Institutional Computing Program, which provides over 400 million CPU hours each year based on a proposal system for LANL projects in industry and research that require access to large-scale computing systems.

Programs like this are available at most supercomputing sites, including Oak Ridge National Lab (ORNL), the National Center for Supercomputing Applications (NCSA), and many others. The projects that run on these supercomputers are often national research efforts, but also include partnerships with private companies. For instance, ORNL has worked with companies like Dow, Ford, and other major companies on their applications at scale, just as LANL is working with an oil and gas company that provides services to Shell, Chevron, and other major companies for deep sea drilling.

This most recent effort uses a publicly funded supercomputer at LANL to work with several commercial applications at large scale to improve the operational efficiency of drilling rigs for vessels at sea. Houston Offshore Engineering, using a range of CFD applications from ANSYS and others, is testing rig stability at sea using LANL supers, and hopes to pass the benefit onto their operations in service to Shell, Chevron, and other oil and gas giants that rely on their equipment for deep sea drilling.

According to Bob Tomlinson, who heads LANL’s Institutional Computing Program, the focus right now is on looking ahead to yet another new batch of machines that are being evaluated for the CTS-1 procurements. The RFP deadline for that ended just over a week ago, and they are setting about now trying to understand how applications at LANL and those like the oil and gas CFD codes will mesh with the commodity systems that comprise this series of machines. Not all of the systems in this procurement will be at LANL and despite what one can infer from an RFP called “Commodity Technology Systems” (hence the CTS), this does not mean all-Intel based machines.

What we might call “commodity” here (meaning standard X86 machines) can also mean AMD, but Tomlinson said for his teams, it means being able to run their codes without modification. That would bar GPUs, for examples, or any other kind of accelerator that requires tuning (FPGAs, DSPs, etc). But to be fair, there are not a lot of choices out there for this type of processor beyond the obvious, so we will see what type of machines they select. The program is a tri-lab undertaking, with systems set to be placed at Sandia, Lawrence Livermore, and Los Alamos National Labs.

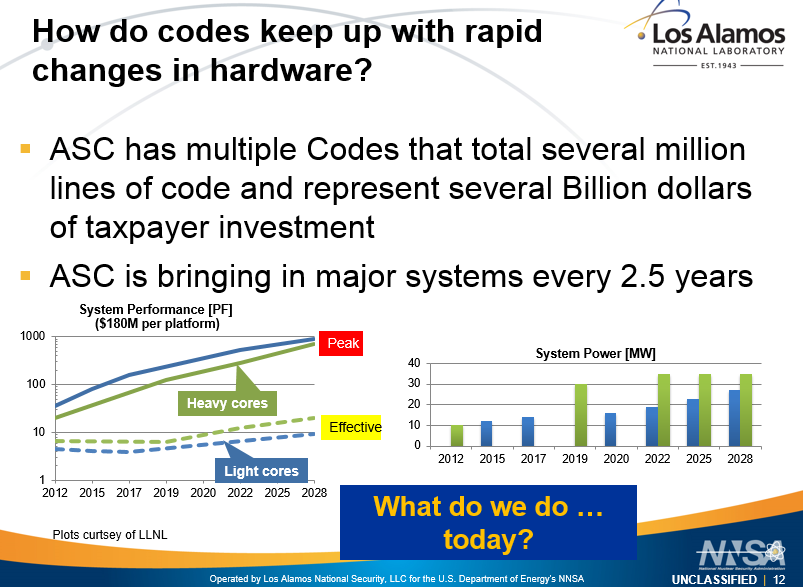

According to the original RFP, the commodity Linux cluster approach is a strategic decision “based on the observation that the commercial off-the-shelf marketplace is demand-driven by volume purchases. As such, the CTS-1 procurement is designed to maximize the purchasing power of the tri-laboratories.” They note that “by deploying a common hardware environment multiple times at all three laboratory sites over multiple government fiscal years, the time and cost associated with any one cluster is greatly reduced.” This also means that, strategically speaking, they can cut down on the number of man-hours at the labs fine tuning codes, ostensibly while they save their big developer talent for tuning for the upcoming “Knights Landing” Xeon Phi architecture, putting the novel burst buffer technology into real-world practice at scale, and the other code-specific and efficiency challenges ahead for getting the 30+ petaflops-capable Trinity (and the other machines that were part of that deal at the other two labs) running at something close to high utilization.

This effort is overseen by the Advanced Simulation and Computing Program’s committee, which supports the NNSA efforts for weapons supply modeling. The Sequoia system, which will also be retired at LLNL, is used for these purposes, particularly on the applications and software development side, while other smaller systems at Sandia, including Red Sky also handle NNSA application efforts. Private partnerships as with Houston Offshore Engineering will continue on on unclassified machines at LANL.

The world might be better served were this machine to devote a significant fraction of its capability to high resolution climate simulations given the impending world-wide problems of climate change due to human induced warming.

There are actually several systems that do this already–and several Department of Energy supercomputers are part of these efforts. The modeling of nuclear stockpiles, however, is something that only happens at a few national labs. And if you read about degradation and maintenance for these stockpiles and what can go wrong if that process goes wrong, a changing climate is going to be the last of our concerns.