OpenStack was born at the nexus of high performance computing at NASA and the cloud at Rackspace Hosting, but it might be the phone companies of the world that help it go mainstream.

It is safe to say that most of us probably think that our data plans and voice services for our mobile phones are way too expensive, and as it turns out, that is exactly how our mobile phone operators feel about the racks and rows and datacenters that they have full of essentially proprietary network equipment that comprises their wired and wireless networks. And so, all of the big telcos, cable companies, and service providers are making a giant leap from specialized gear straight to virtualized software running on homegrown clouds, and it looks like the open source OpenStack cloud is becoming the de facto standard for building clouds that provide network function virtualization.

That the telcos, cable companies, and other service providers would gravitate towards an open source cloud controller to underpin the next generation of their networks is not at all surprise. The Unix open systems revolution began in 1969 at Bell Labs, which gave us the C compiler and the Unix operating system that was written in it, and the telcos were among the first builders of large scale distributed systems. They were, in fact, the hyperscalers of their time, but were probably more on the kiloscale to be honest.

The phone companies operate slightly different kinds of compute farms and networks than the typical hyperscaler or cloud builder. They tend to be a lot more distributed, with multiple tiers of gear spreading out from central datacenters and getting closer and closer to the users in aggregation centers and points of presence, as the most local facilities are called. (We discussed this architecture of telco clouds recently with the folks at commercial OpenStack distributor Mirantis.) When you add all of the nodes up, they are deploying thousands to tens of thousands of OpenStack as they virtualize their network functions. Interestingly, right now the big telcos are not so much worried about containers – they seem to be happy to get their network functions virtualized and running on generic X86 iron. Once that is accomplished, you can bet that they will be working to get the monolithic software behind those network services busted up into microservices and running in containers, but for now, they are mostly happy just getting their software virtualized and off of expensive appliances.

At the OpenStack Summit this week, the big operators were on hand, including AT&T, Verizon, China Mobile, Comcast, TimeWarner Cable, and Swisscom, to name a few. AT&T and Verizon, two of the largest telcos in the world, gave out specifics of their own OpenStack implementations and showed how they were using the open source cloud controller at scale.

Sorabh Saxena, senior vice president of software development and engineering at AT&T, gave a presentation about the company’s AT&T Integrated Cloud, and said that the company expected to be able to move 75 percent of the network applications that are currently running on specialized appliance gear to OpenStack clouds by 2020. One of the reasons that AT&T wants to do this is the same one that compelled hyperscalers to build their own systems, switches, network and server operating systems, and other components, and that was so they could scale the infrastructure faster than the cost of that infrastructure.

The infrastructure scale is daunting for the mobile phone operators. Saxena said that the mobile data on the AT&T network grew by 150,000 percent between 2007, before the age of smartphones, and 2015, when these devices are the norm, and that the network was shuffling 114 PB of data daily across AT&T’s network backbone and, worse still, expected for that data traffic to grow by a factor of 10X between now and 2020. That is not too many years away, and you can see now why AT&T and its telco peers want to shift to commodity iron and open source software to support network functions. Not only will the individual datacenters have to scale up as data volumes grow, but AT&T also has to span nearly 1,000 zones around the globe.

“The economic gravity of this reality says that we must transform our approach to building networks,” Saxena explained. “And out answer to the challenge is to transition from purpose-built network appliances to open, whitebox commodity hardware that is virtualized and controlled by AIC. We are also liberating the network functions from the same purpose-built network appliances into standalone software components and managing the full application lifecycle with both local and global controllers. Taking this approach prevents vendor lock in and allows us to have an open, flexible, modular architecture that serves the business purposes of scaling to meet the explosive growth at lower cost, increasing speed of feature delivery, and providing much greater agility.”

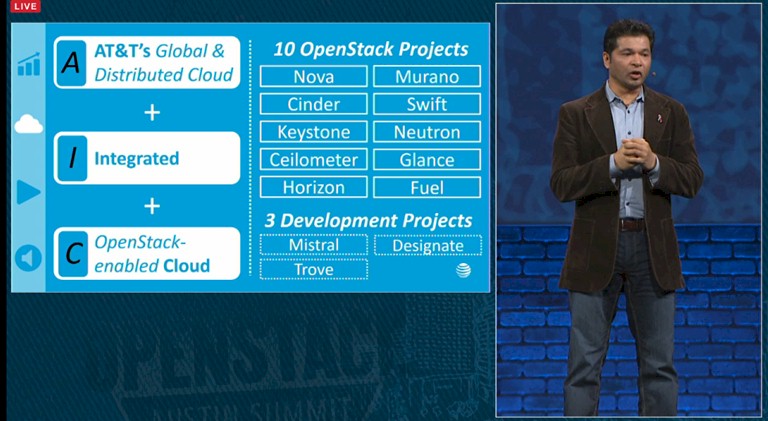

AT&T did a lot of work to modify OpenStack and extend it so it could be used as the foundation for its new global network. Here are the ten core OpenStack components that are used in AIC, and the three more that are being added to the mix later this year:

One interesting aspect of the AIC stack that AT&T has developed is that it uses the same exact code base for both enterprise workloads (which run the AT&T business) and carrier grade workloads (which run the network). Usually, the carrier grade variant of a software stack is more ruggedized and tends to evolve slower, but AT&T is essentially standardizing on the carrier grade version throughout its organization and making sure it performs well enough to do both jobs.

Saxena highlighted the Murano application catalog, which allows for the automation of onboarding of a network functions across multiple AT&T zones, and Fuel, which is being used to automate the deployment of zones themselves, as being key components of the stack. (Both of these were created by Mirantis and contributed back to the community, by the way.) AT&T developed its own components as well, including a Resource Creation Gateway, which deploys applications to particular zones through OpenStack, and the Region Discovery Service, which acts as a reservation system to show the network applications running on regions on the AT&T network. Remember, there will be nearly 1,000 OpenStack clusters, so finding stuff will not be trivial, and every site will not be a cookie cutter datacenter with exactly the same hardware and applications.

But perhaps the most important thing that the AIC configuration tools do is get network engineers out of the habit of using Excel spreadsheets and cabling diagrams to manually configure the network. This is all done through a web interface, and all done virtually on the network, and the software is even being extended so AT&T’s customers can use a self-service portal to reconfigure their own network services, running on local POPs, on the fly as they need to.

To make this work, AT&T had to come up with a local controller that could run inside of a zone and integrate with the Neutron networking APIs inside of OpenStack. Then, AIC required a centralized management controller that worked at a global level, integrated all of those OpenStack clusters with a single set of ordering, tech support ticketing, and monitoring tools that the telco already had in place. This global controller is called Enhanced Global Control Orchestration Management Policy, or ECOMP for short. (It is not clear if AT&T will be open sourcing ECOMP, but probably not.)_

The upshot of switching to OpenStack and NFV is that AT&T can provide these services from within its datacenter rather than putting five or six or more devices at the site of customers subscribing to its network services, and it also means that it can reconfigure the network for customers only the fly rather than taking weeks or months to do it as its mobile network requires based on load. The NFV approach also means that AT&T can grow its network much faster. It took ten months for AT&T to deploy its first 20 OpenStack clusters before all of this automation was created, and it just recently added 54 zones in less than two months. That is more than an order of magnitude faster, which matches the expected data growth AT&T is experiencing. The company did not say how much less costly this approach will be than using network appliances from various vendors, but it has to be pretty substantial to warrant all of this engineering.

Red On Red

Ahead of the summit this week in Austin, we caught wind that Verizon was also going to be discussing its NFV efforts on top of OpenStack. So we spoke to Chris Emmons, director of software defined network planning and implementation at Verizon, about its OpenStack setup.

“We have a plan over the next three years to virtualize all of the direction network elements for Verizon infrastructure for both wireless and wireline services,” Emmons said, adding that he believes this will be the largest NFV implementation in the world, running across tens of thousands of servers by the time Verizon is done. “We want our datacenters to look like everybody else’s datacenters because that is the best way to get the cost points and operational efficiencies that we are trying to get to.”

Like AT&T, Verizon will be rolling out OpenStack to its aggregation and edge sites, too, as well as in its core datacenters where the switching gets done and many services reside. Rather than roll its own OpenStack code, which Verizon is perfectly capable of doing, it has chosen Red Hat’s OpenStack Platform (at the Liberty release from last fall), and Emmons says that the company specifically wanted to go with an off-the-shelf implementation and did not want to fork the code in any way. Verizon looked at the OpenStack implementations from Canonical, Hewlett Packard Enterprise, and Mirantis as well, but chose Red Hat because of its long history of supporting Linux in commercial settings.

As you might expect, Verizon is using KVM as its hypervisor on the OpenStack cloud, and while it is keeping an eye on Mesos, Kubernetes, and other container services, for the most part the network applications have been running as monolithic Linux code for a long time and it is not yet appropriate to try to refactor these apps to run them in containers. Verizon has some homegrown code to run its wireless network, but a lot of it comes from Alcatel Lucent and Ericsson, and in the wireline business there are a slew of appliance providers who supply that code. Just getting everything on a common hardware and software substrate will be such a big improvement that this is what Verizon – like other telcos – is focused on.

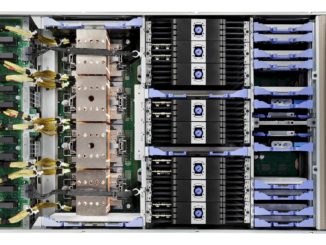

Verizon’s OpenStack is being deployed on plain vanilla PowerEdge servers from Dell at the moment, but Emmons says that the company is looking at Open Compute vendors and custom gear from Dell’s Datacenter Scalable Solutions unit or various original design manufacturers for further down the road when it is scaled up. The OpenStack clusters are implemented using a Clos network architecture (like Google, Facebook, and Microsoft use for their infrastructure), and for now Verizon is using whitebox switches from Dell, network operating systems from Big Switch Networks, and hooking them all into OpenStack through the Neutron virtual networking module.

Verizon is at the beginning of its rollout and has OpenStack clusters deployed in five datacenters and several aggregation sites now, with more to come this year and beyond until the network functions are virtualized three years hence. Emmons, like Saxena, is not able to comment on the TCO benefits of this NFV approach using OpenStack, but did confirm that it is not just about saving on capital expenses, but also about gaining operational efficiencies and being able to scale up the network and add new features and functions more quickly.

Be the first to comment