As we have been describing here in detail, there is little end in sight to the train of exascale computing challenges ahead. The most prominent include building dense, energy-efficient systems that are capable of providing a 20X increase in floating point capability between now and the 2020-2024 timeframe, but more important, do so in just a tick above current power limits. For instance, today’s top supercomputer, the Tianhe-2 machine in China, consumes 18 megawatts while the goal for exascale systems is a peak power pull of 20 megawatts.

Massive power-efficient performance boosts aside, all of this effort will be useless without robust programming models to allow applications to scale and efficiently use exascale resources. With the above improvements needed, consider also another figure; the Tianhe-2 system has total concurrency of around three million threads—a number that needs to jump to billions in that relatively short timeframe, all the while with the ability to hide latency and get maximum performance. The existing programming models for large-scale supercomputers alone may well not be able to hit these points.

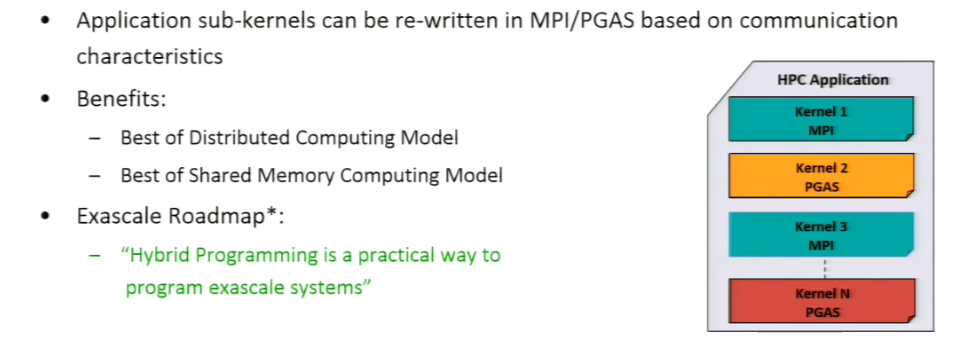

The dominant model, based on distributed model and MPI has risen above other approaches, including shared memory, but what is appearing with increasing frequency in the research for how next-generation exascale systems might be programmed is called the Partitioned Global Address Space (PGAS). The concept is similar in that there is the same distributed memory approach but with a logical shared memory abstraction on top. This approach provides simplified shared memory abstractions so any process can do reads and writes and get input from remote memory, a lightweight one-sided communication framework, and the ability to express irregular communication, which is difficult to do with MPI and will be required for exascale applications. By combining MPI and PGAS, there is a “best of both worlds” possibility that might fit the exascale bill, says Panda.

Although PGAS has found adherents already via a number of language and programming interfaces (including Unified Parallel C or UPC, Co-Array Fortran or CAF, Chapel, and through libraries like OpenSHMEM and Global Arrays) it might be best envisioned as the programming model approach combined with MPI. This “hybrid programming model for exascale” makes sense, particularly because it will be impossible for HPC codes to be rewritten (especially at the half million lines of code level and up) but PGAS can be snapped in to the code in just the areas where it is not scaling—boosting the performance of existing code, according to Ohio State University’s DK Panda, who gave an overview of how PGAS might fit into the future of HPC and exascale at the HPC Advisory Council Stanford Workshop last week.

Work on PGAS frameworks is not new, but it is finally getting more attention that it did in the past, even after DARPA funded work on such languages to meet the challenges of then many-petaflop systems. This funding effort, filed under High Productivity Computing Systems (HPCS) backed a number of programming efforts, including Chapel and X10, both of which are programming languages for PGAS models. These efforts have since been expanded and are the subject of some interesting benchmarking results against a large-scale hydrodynamics application in the UK.

In the paper describing the results of applying the PGAS languages and approaches, the authors note that since unlike MPI, PGAS based approaches rely on a lightweight one-side communication model and a global memory address space, there is the opportunity to “reduce the overall memory footprint of application through the elimination of communication buffers, potentially leading to further performance advantages.“The established method of utilizing current supercomputer architectures is based on an MPI-only approach, which utilizes a two-sided model of communication for both intra-and inter-node communication, the authors remind. “We argue that this programming approach is starting to reach its scalability limits due to increasing nodal CPU core counts and the increased congestion caused by the number of MPI tasks involved in a large-scale distributed simulation.”

If one looks at the published papers and conference presentations about PGAS, there was a height of work and development in 2012-2014, with PGAS frameworks being analyzed and presented at all major supercomputing events and major companies, including Mellanox, using such approaches as a key to their co-design architecture for current HPC and future exascale systems. It appears that these conversations are back in full force—and accordingly, we will spend time once per month looking at how the various language implementations like CAF, Chapel, X10, UPC (and their requisite hardware and networking gear) are being deployed to various levels of success.

I don’t understand the dichotomy between MPI and PGAS. MPI-3 RMA is a perfectly reasonable PGAS runtime, as demonstrated by the implementations of Global Arrays/ARMCI (https://wiki.mpich.org/armci-mpi/index.php/Main_Page), OpenSHMEM (https://github.com/jeffhammond/oshmpi), and Fortran 2008 coarrays (e.g. http://www.opencoarrays.org/ and Intel Fortran) on top of it. http://scisoftdays.org/pdf/2016_slides/hammond.pdf summarizes the state of things.