Object storage is not a new technology, but it is something that many enterprises are just starting to adopt as they follow the hyperscalers and HPC centers away from the overhead and scalability limits of traditional storage and its file systems.

As one of the pioneers of commercial-grade object storage, DataDirect Networks has been selling its Web Object Scaler (WOS) product for six years and through June of this year customers have amassed more than 200 billion objects across their combined installed WOS storage arrays and are adding data at the rate of more than 1 billion objects per month. Object storage is a big and fast-growing part of the DDN business, and one that the company wants to push further into the enterprise.

The WOS storage arrays are getting set to be upgraded with the “Wolfcreek” storage server platform that DDN talked to The Next Platform about back in June. As DDN has previously divulged, Wolfcreek will also be the foundation of future hyperconverged block storage, scale-out NAS arrays, and the Infinite Memory Engine burst buffer. The Wolfcreek platform has two active-active Xeon-based storage controllers in its 4U rack enclosure and supports up to 72 SAS drives with 12 Gb/sec interfaces or 72 SATA drives, all in a 2.5-inch form factor. Wolfcreek has a PCI-Express fabric within the system and supports the NVM-Express protocol to cut down on the latency between the processors and main memory in the storage controllers and the storage media in the enclosure. With 2.5-inch NVMe SSDs, DDN can put two dozen SAS drives plus up to 48 NVMe drives in the enclosure. With the IME burst buffer configuration, the Wolfcreek storage node will support the full complement of 48 NVMe SSDs. The Wolfcreek machine will also be the basis of its future SFA arrays used to host Lustre and GPFS parallel file systems and will support a mix of disk drives and SSDs, too.

Scaling Out Like A Hyperscaler

Even without the Wolfcreek hardware upgrade, DDN’s WOS object storage has truly beastly scale. The base WOS object storage cluster scales up to 256 nodes, and with a total of 7,680 drives and 46 PB across those nodes, it can house over 1 trillion objects. But that is not the end of the scalability story. WOS has a unified namespace that can span up to 32 clusters, which brings the total up to 8,192 nodes with up to 245,768 drives that delivers a maximum of 32 trillion objects and a capacity of 1.47 exabytes.

That is hyperscale. Period.

For the sake of comparison, let’s look at the S3 object storage service on Amazon Web Services, the world’s largest public cloud, which had 2 trillion objects in April 2013. This was the last time statistics were given out for object counts on S3, which had doubled in less than a year at that point. Depending on how usage has grown, it could be anywhere from 10 trillion to 15 trillion objects now, we estimate. (AWS initiated automatic deleting of files for users in 2013 and stopped giving out numbers for total objects stored.) What we do know for sure is that the data rates into and out of S3 are growing at an exponential rate, and we think that S3 is a substantial portion of the $7.3 billion annual run rate that AWS set in the second quarter.

Like other object storage providers, it is this S3 target that DDN has been focused on since development of WOS began back in 2008. In late 2009, WOS V1.0 came out and shipped to an unnamed Federal government customer, and in late 2011 the software was updated with an NFS file system overlay and NAS gateway with a multi-site, federated namespace for managing objects. With the WOS 360 update of the software in 2013, DDN added Global ObjectAssure, a multi-site distributed data protection scheme with local data rebuilds, and in June this year the WOS 360 software was updated to a V2.0 release that added support for the OpenStack Swift object storage APIs and the WOS arrays were given the option of a new 96-drive capacity tier (in a 5U enclosure) to hang off the WOS 7000 performance nodes, which support 60 drives in their 4U enclosure.

While the expected updates to WOS with the Wolfcreek platform will help drive performance and density for DDN’s object storage, the more important driver could end up being the substantial cost savings that internal object storage arrays can offer compared to cloud-based object storage.

While the big public clouds do not charge for data to be ingested to their clouds, they certainly do charge as it is moved around their clouds or accessed from the outside by users or applications. And it is this latter bit that anyone looking at on-premises or cloud object storage has to model very carefully for their own workloads.

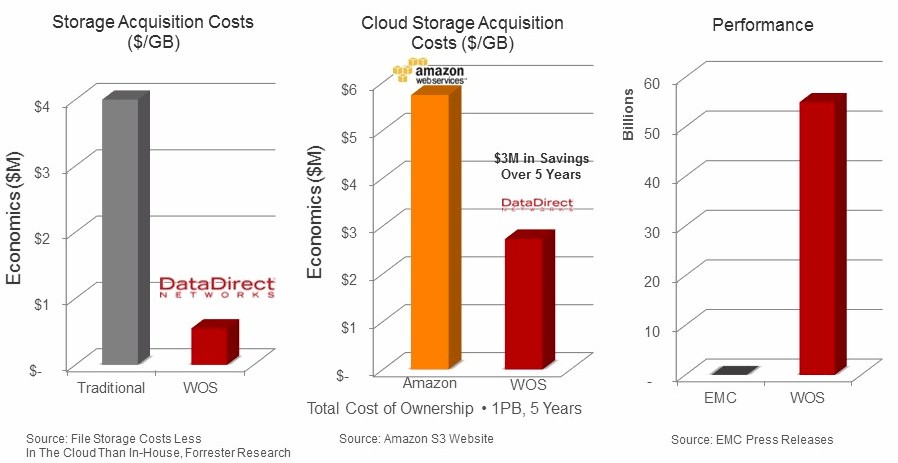

Here is a comparison chart from last year, which DDN put together:

Michael King, director of marketing at DDN, admits that this data is not perfectly up to date, but says that the ratios remain roughly the same and reminds everyone that this comparison assumed that data usage patterns on S3 are typical of those used by DDN WOS customers, meaning high data transfer rates into and out of the object storage.

For fun, King ginned up a more recent comparison for The Next Platform. To get 1 PB of object storage and support it for three years would cost $285,000 to buy from DDN with its WOS platform. Using pricing on the AWS site effective this week, 1 PB of capacity would run $543,000.

“There is a larger discussion to be had about cost comparison, certainly,” adds King, and we are going to take a look at the numbers in a little more detail down the road with a bunch of different object storage platforms. King elaborates a bit for now: “For example, at year four in this comparison, WOS costs $16,000 (which is just support) because all the cost of the hardware is already accounted for and Amazon costs another $181,000 because you just keep paying for the service. The above costs only account for storage and not data transfer. In the case where most of your data could be accessed locally over a LAN and less over a WAN or the Internet, this cost becomes very significant with Amazon S3 and is trivial for WOS. Even in cases where the majority of your access is over the Internet to both systems you can have significantly lower costs with WOS because Amazon is an unneeded middleman in the bandwidth business (providing extra margins) in this use case.”

Does Object Win Almost Everything In The End?

Object storage was invented expressly because traditional NAS and SAN storage could not scale and was far too expensive to scale even if it did. And that makes us wonder if object storage will dominate in datacenters only a few years from now.

“That is the prevailing trend in conversations,” says Laura Shepherd, director of HPC markets at DDN. “People who are generating a huge amount of data or who are planning on it are thinking of object as a de facto part of their environment in terms of cost reduction, but searchability and location independence are also key. If you add on top of object storage a unified namespace, giving you the ability to put part of it here and another part of it there, you can treat this massive, scalable storage entity as an active archive, or private or hybrid cloud for an application, or a shared environment for academic research, or have it function like content distribution. So this is what we are hearing also.”

There will be filers around for a long time yet, of course, because untold and uncounted applications require a POSIX-compliant file system to access data and they want to be able to update data through the file system, which typically tops out at millions of files. The trick to object storage is that it scales to trillions of objects by being immutable and storage scales linearly, unlike the exponentially bad scale of file systems. Generally speaking, the larger a file system gets, the worse it performs and the more expensive it gets.

As usual, we can look to HPCers and hyperscalers as a precursor to the possible enterprise storage future.

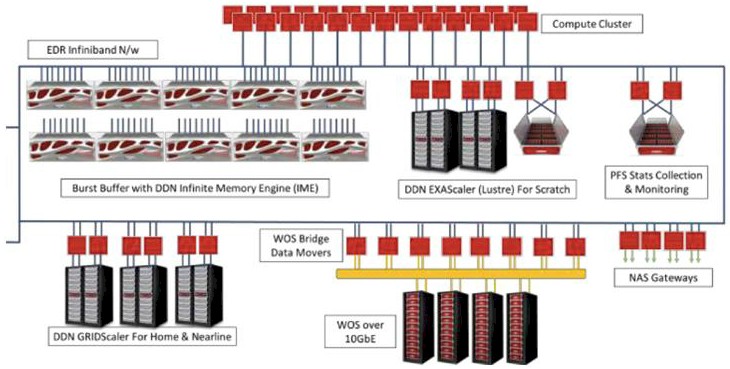

“If you look at the very highest end of our customer base, in the HPC centers, we are already seeing people designing for an environment that is not subject to the locking constraints of a POSIX-based file system,” Shepherd tells The Next Platform. “Today it is impractical to throw out the parallel file system approach. There are too many applications that need a POSIX paradigm. But there are people that have hit the limits of what we can do under a POSIX paradigm. So what we are able to do with the Intelligent Memory Engine burst buffer is remove those locking constraints on the front end and on the back end have the burst buffer align the I/O and put it into a form that is acceptable to the parallel file system. The applications write the data the way they are used to. This is an interim step, but we can then take another step and say on the back end you have object storage and on the front end instead of POSIX you write to something else. You end up with a translation layer between what we do today and what we will want to do tomorrow with all of that data we are generating.”

In a sense, all of the other layers of storage, where systems actually chew on data, will be dwarfed by the object storage, which is where everything will eventually end up in this scenario. DDN has a number of different ways of interfacing its file-based products to object storage – such as a NAS gateway or a WOS bridge between object and parallel file systems – and at many customers, the ratio in the chart above will be more like a hundred racks of WOS object storage instead of four racks shown. The WOS bridge stubs into the parallel file system and only requires these filers to be large enough to host the hottest data that applications require. (We think there could be tape libraries with object access, too.)

“Object storage is in the background, and people don’t even really know they are using object storage,” says King. “If you look at that pie chart for storage, judging from the sheer number of people who are using Amazon Web Services for their applications, let alone file sharing, that pie chart slice for object storage is at least 50 percent in my mind and is growing so fast in comparison to file storage that it will soon be 80 percent or 90 percent.”

As goes AWS on the public cloud, so will go DDN, we presume, among its own enterprise, service provider, and hyperscale customers.

Be the first to comment