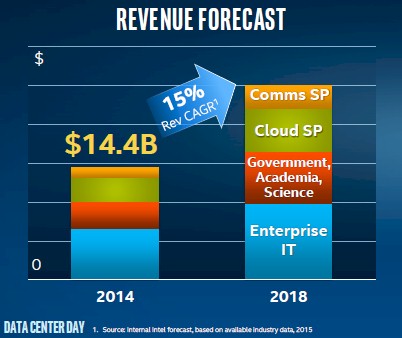

The only number you need to remember about Intel’s Data Center Group business for the next three years is this one: 15 percent. That is the annual growth rate that general manager Diane Bryant says that the company can deliver, on a compound annual growth rate basis, “out through time,” although the presentations that the top brass from Data Center Group only did comparisons between 2014 and 2018.

To deliver such growth – and consistently year after year, given the uncertainties of the global economy and the tectonic shifts taking place in the datacenters of the world – would be a truly remarkable feat. Assuming that Intel makes its numbers for 2015 and grows Data Center Group revenues by 15 percent, the company has averaged 17.7 percent growth, with a few blips up and down from that average, and even more significantly, has been able to grow its operating income at nearly twice that rate.

Bryant did not mention this statistic at a recent Data Center Day event help for Wall Street analysts, but any time a company can grow operating earnings twice as fast as revenues – and for that matter grow a hardware business at anything approaching 15 percent in a mature market – that company is on to something. But the company has plans to expand its total addressable market, accelerating the buildout of public and private clouds with its Cloud for All initiative, getting a bigger chunk of the datacenter networking market proper through its Omni-Path and Ethernet products, and transitioning the fleets of specialized gear used by telecommunications and service providers to Xeon and, we think, possibly hybrid Xeon-FPGA architectures.

But make no mistake about it. Bryant was clear that Intel will also need for enterprises to keep upgrading their own server fleets, particularly since they account for somewhere north of 40 percent of the Data Center Group’s revenues these days. We suspect that enterprises also account for a slightly higher share of the Data Center Group’s operating income, given the volume leverage that hyperscalers and cloud builders have and that most enterprises do not.

Intel had a blip in enterprise sales in the second quarter, but had a first quarter that exceeded expectations and therefore Bryant thinks Intel can still hit its goal of boosting Data Center Group revenues by 15 percent this year. She added that China is part of the problem, which buys a lot of four-socket and eight-socket Xeon iron and has for years, but this slowed down. (The word we have heard is that many Chinese companies bought half-populated four-socket machines, leaving room to add CPUs in the future. Maybe Chinese hyperscalers are finally doing the math on this one and realizing they do not upgrade processors as much as they thought they might.) “We are keeping our eye on that, but the underlying growth drivers remain the same, and we are still confident on the year,” Bryant affirmed.

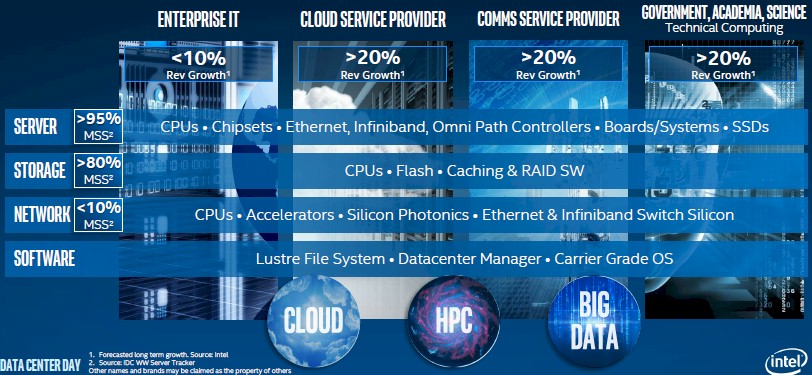

Here is how Bryant characterized Data Center Group’s current market share and growth trajectories based on product sets and markets:

Processors based on the Intel X86 architecture have account for an astounding 99.1 percent share of server shipments and 80.1 percent of revenues, according to the latest stats from box counter IDC. Intel’s chart above merely says it has more than 95 percent share and Bryant said it was 96 percent in her presentation, which occurred just after IDC released its Q2 2015 server statistics. The point is, Intel has this one nailed, and the fact that even 20 percent of the server base is using non-X86 architectures is a testament to the staying capacity of the applications that run on those machines – mostly in large enterprises, mostly running big databases and the back office applications that run on them. (Not exclusively, mind you.)

Back in the mid-2000s, Intel’s share of processing for storage arrays was in the range of 20 percent or so, but it has grown so fast that Intel now has more than 80 percent shipment market share for silicon for the controller brains in storage arrays. While many NAS and SAN storage arrays were based on Power, MIPS, or proprietary chip architectures only a decade ago, almost all of them have ported over to Xeon chips and the new breed of upstarts selling hybrid disk-flash or all-flash arrays use Xeons as their controllers. (There are a few exceptions, but not many.) As a fairly large amount of storage capacity is being sold on beefy servers running open source object, block, and file systems – in the latest quarter, original design manufacturers accounted for 13.3 exabytes of storage, or about 43.8 percent of the total 30.3 exabytes of capacity shipped. The Intel platform is benefiting from this shift, but again, the money goes away. Those ODM server-storage hybrids only generated around $1 billion in revenues, and when you do the math, this ODM storage is about one-sixth the cost of storage with a brand on it.

Intel’s share of the global networking is at 8 percent, Bryant said, and presumably this is some measure of shipments to be consistent with the other data presented for servers and storage. The networking business is the real growth opportunity for Intel, with such a small share, but incumbents like Cisco Systems and Hewlett-Packard, which still make their own network ASICs for some of their products, and rivals such as Broadcom and Cavium Networks (which are driving the new 25G Ethernet standards) and Mellanox Technologies (which is out in front with 100 Gb/sec Ethernet and InfiniBand products) are not going to let Intel take their business without a fight.

“We love the old days of enterprise IT, very predictable buying patterns. We have twenty years of seasonal buying patterns of enterprise IT, and so we could nail each quarter with great predictability. The cloud market, the public could service provider market is much harder to predict, and they will even say they struggle to predict. They’re tuning their algorithms all the time. They’re deploying more infrastructure all the time. They’re trying to gauge how much capacity they need because it’s a big capex spend. They don’t want to spend without the demand being there. So they even have trouble anticipating what next quarter’s demand is going to be.”

Expansion in storage and networking is not just limited to the corporate datacenter, but also to the parallel universe where service providers still use mountains of proprietary gear to deal with voice, data, video, and other streams of data on behalf of consumers, governments, and corporations.

It is hard to draw lines between the categories, and we all know this. “When we say the move to cloud computing, we mean that as a computing architecture,” explained Bryant. “So it is the move to cloud computing of the big public cloud service providers, enterprise moving to private clouds, the communications industry moving to a cloud-based infrastructure. So we mean it as a computing architecture that is pervasive.”

For the past year, Intel has been talking up Jevons Paradox, which basically says that the consumption of a commodity, like coal or computing, can behave elastically if it is made easier to consume. Rather than keeping the usage of the resource the same, people find other uses for the resource even as their use of that resource becomes more efficient. As we have pointed out before, the worldwide server base has increased by about a third in the past decade, but the amount of compute in a server has gone up by several orders of magnitude. This is more or less elastic behavior. Intel is not answering the question of whether or not this can hold forever, and wants to accelerate consumption, not wanting to wait for cloud hardware spending to double between 2014 and 2019. Intel wants to get tens of thousands of organizations worldwide on cloudy infrastructure earlier than that.

Don’t get the wrong impression. The cloud (or cloud plus hyperscale, as we use those definitions here at The Next Platform) has not been a bad business for Intel. Up until now, Intel has identified the big four in the United States – Google, Amazon, Facebook, and Microsoft – and the big three in China – Baidu, Alibaba and Tencent – as the key drivers in its cloud segment. This time, Bryant said that there are more like ten biggies, with JD.com (an online retailer) and Qihoo 360 (a provider of antivirus and security software) added to the mix from China as bulk buyers of compute. The interesting bit is that on a worldwide basis, about two-thirds of cloud infrastructure based on Intel technology is going into consumer-facing clouds and the remaining third is for running enterprise-class services and raw infrastructure for their own applications. “That mix has been pretty constant over time,” Bryant said.

As you can see in the chart above, the enterprise IT sector is the laggard, and is forecast to grow at under 10 percent. The other three pillars of the Intel business – cloud service provider, comms service provider, and technical computing – are all growing at more than 20 percent, making up a lot of the difference to hit that 15 percent growth figure.

Making forecasts is a tough business, and back in 2011, when Kirk Skaugen was running the Data Center Group, the company was projecting that it would hit $20 billion in revenues by 2015. (It took Intel a decade between 2000 and 2010 to hit $10 billion in revenues for this key part of its business.) This forecast was subsequently revised by Bryant in 2013 after an enterprise spending slowdown that we think is absolutely tied to cloud computing architectures (companies buying public cloud capacity and making their own infrastructure more orchestrated and efficient). If Intel hits its 15 percent growth target for 2015, it should hit $16.6 billion in sales for Data Center Group and if all other trends persist, it should bring in $8.4 billion in operating income from these products.

Intel did not put numbers on its axes, but in the chart above, enterprise was about $6.7 billion in revenues, with technical computing accounting for about $3.3 billion, cloud service providers accounting for about $3.2 billion, and communications service providers accounting for $1.2 billion, adding up to $14.4 billion. Looking ahead to 2018, Intel is forecasting that it will hit $25 billion in sales, with enterprise IT accounting for just under $10 billion and revenues from technical computing nearly doubling, comms service providers more than doubling, and cloud service providers growing more modestly but also up around maybe 75 percent. (It is hard to estimate from these charts, but we tried.)

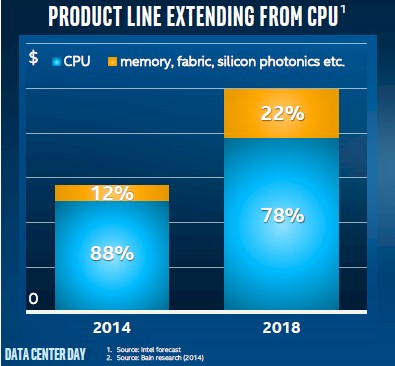

The levers that Intel will be pulling to hit its growth forecast are many. The first is to ride up the transition in the Xeon product line as more and more customers forego the basic SKUs in its lineup and move to more standard SKUs that have more features and also cost more. Compared to 2010, about 80 percent of the CPU volume sold by Intel has “moved up the stack,” with the top of the stack being the 39 custom CPUs that the company has etched for hyperscalers and system suppliers for a premium; Intel already has ten custom “Broadwell” Xeon designs, with Facebook having input on the Xeon D processor for microservers that was revealed in March and that will begin shipping in volume shortly.

Intel will also pull the cloud lever hard to accelerate those cloud transitions at the 50,000 or so large enterprises in the world, boosting its share in the networking space, converting comms service providers to server-based Xeon gear instead of fixed function appliances, and selling more flash and now 3D XPoint memory in servers, storage, and networking. The way Intel sees it, the advent of 3D XPoint memory alone expands its total addressable market from $37 billion for just logic chips to $49 billion including logic and memory applicable to its markets.

While Intel will still be predominantly a vendor of processors and chipsets, the company is forecasting that by 2018 about $5.5 billion in its revenues will come from chipsets, system boards, Ethernet and Omni-Path controllers and switches, silicon photonics interconnects, and whole machines coming out of its Systems Group. The remaining $19.5 billion will come from CPUs like the Xeon E5s and E7s, although those product categories will probably not be there by then, and possibly FPGAs once it closes its $16.7 billion acquisition of Altera. (It was not clear from Bryant’s presentation if FPGAs were classified as CPU or Other.)

Intel’s strategy involves a lot of different technologies and divisions, and we will be analyzing them since they affect many aspects of The Next Platforms that companies are building.

I’ll bet that those in the x86 camp are waiting to see what AMD’s Zen/Greenland GPU HPC/workstation APU’s on an interposer are going to cost relative to Intel’s more expensive solutions, before they begin to start planning for the possibility of going to Power8 based solutions from the third party licensees, or IBM.

The most interesting part is the competitive pressures that will be coming with more competition from Power8 at maybe a higher cost but better performance/$ ratio, and AMD’s Zen x86 based server APU SKUs that may not outperform Intel’s CPU cores IPC for IPC, but will cost less and be able to offer a better Performance/$ ratio coming more from a lower price point than Intel’s usual margins will allow. The IBM SKUs may cost more than Xeon but they perform better, while the third third party licensed power8 SKUs may be more price competitive with Xeon.

AMD appears to be improving its Zen x86 IPC performance with SMT/other improvements, but AMD is also integrating its announced new HPC/workstation APUs with more of its HSA aware GPU/GCN asynchronous compute features that will make AMD’s Zen based HPC workstation APUs an even more attractive choice. AMD will be further refining its GCN/future Greenland/Arctic Islands GPU’s ACEs paired with its upcoming Zen CPU cores, with these APUs on an interposer implementing even more of the HSA 1.0+ feature sets, along with the new HBM, and possibly some FPGA capabilities added to those HBM stacks.

The pressure is on the incumbent to maintain its market share, and even the Xeon D’s may have more competition from new x86 as well as the ARM based custom ARM cores. Could AMD be baking up some custom SMT capable ARMv8a running GCN accelerated competition in an ARM server APU. It appears that there even may be some 64 bit MIPS based HSA inspired competition coming from the far east, along with many licensed power8 systems also.

Well I don’t think people actually are waiting what AMD is going to cost more like if it is going to perform at all or will be anywhere competitive in any performance metric to Intel. I wouldn’t hold my hope up high. The whole Bulldozer and everything that followed in that same architecture was a disaster. Why they dragged that awful architecture out for a total of more than 6 years is really beyond me. That shows there is no strategic thinking at AMD at all.

But all of the old AMD management is gone and more realigning of the management of AMD to experienced product engineers is in progress as I write this post. AMD is rumored to even be getting a large equity capital type of investment to help with the longer term capitol issues. That GPU compute and the HSA ability baked into the APU’s GPU, and the discrete GPUs is going to offer more in the way of compute than just CPU cores alone. More computing is moving to being done on the GPUs massive ranks of FP units, many orders of times more FP resources than even the 18 core Xeons can devote to the task. Those APUs are becoming CPU/GPU vector processors for all sorts of workloads to be done on the ACE’s on AMD GCN GPU cores.

The era of CPU only for any and all compute workloads is drawing to close from HPC/Server/workstation SKUs down to the Mobile device SOC SKUs, whose makers are also members of the HSA foundation alongside and equal to AMD. One need only look at Imagination Technologies latest powerVR series GPUs to see that GPGPU is already there too on mobile devices, Ditto for ARM’s Mali, etc. This CPU only pathological obsession has to be gotten over, computing is going forward without the need or dependency on just the CPU for any and all types of computational workloads. Expect that AMD’s Greenland/Arctic Islands GPUs will have even more asynchronous compute abilities to run the number crunching without the need for any CPU assistance, or a very limited need for some CPU assistance for some limited case computational scenarios. GPUs will be doing more of general purpose computations that where done only on the CPU in the past, get over the CPU as the main provider of number crunching abilities, those days are gone for good.

Yeah, yeah we heard that APU story so many times and so far it has zero to show for it. Actually Intel was even ahead of AMD in integrating the GPU on the same die.

We’ve seen so many management changes at AMD over the last six years and the effect was basically zero.

AMD has to execute not just show us powerpoint slides before anyone takes them serious again.

I also disagree with your era statement as CPU vector units become larger and larger every gen and slowly will morph in the same direction in the long run the benefits of GPU will shrink and shrink.

Especially if CPU integrate large amount of first and 2nd level cache like KNL does. GPU’s Integer performance is abysmal and also out-of-order instructions and memory gatrhering is still both very poor on GPU architecture

I think for the real specific algorithmic adjusted compute path future lies on fully customizable parts like a FPGA part directly on the CPU die as this will outperform any GPU or APU design, as they are still too general and not every problem adjusts well to these architectures

You are talking from a gaming Git’s point of view, nothing but negatives and a little too much brand loyalty. We are talking about AMD’s server/HPC APUs that will be on the interposer and come with an entirely different coherent connection fabric etched out on the interposer’s silicon. And no Intel’s GPUs are not as fully integrated with their CPUs as AMD’s GPUs, does Intel’s integrated product even have full unified memory addressing between its CPU cores and GPUs yet. I certainly do not see Intel doing what AMD and Imagination Technologies are doing by adding virtualization technologies into their GPUs. You very much fail to even see that Intel’s integrated graphics is rather sparsely populated with execution resources compared to AMD’s or Nvidia’s integrated products.

Intel tends to tune its graphics more for gaming workloads at the expense of other graphics and compute workloads, so for the workstation GPU market Intel’s graphics is not even considered. Even AMD’s Carrizo APUs are able to do more compute wise than Intel’s overpriced SKUs! AMD did something that was very innovative starting with the Carrizo line of products, and that was in taking its high density design libraries from its GPU lines and using them to design a low power CPU/APU variant for mobile laptop devices. In doing this AMD was able to get more circuit density on the already mature 28nm fabrication node, and have more space available for GPU/other resources. We are talking about an APU designed for low power usage products anyways, so using the higher power lower density design libraries normally associated with CPU tapeouts is a bit of overkill for low power usage, and even Intel has to down clock and power gate its U series SKUs to fit the mobile devices thermal envelope.

Intel’s high end graphics is not ever going to be utilized on any of its SKUs that ship in a comparable price range of AMD’s or even Nvidia’s mobile SKUs. So all this grandstanding over Intel’s highest priced Graphics and only gaming benchmarks is not going to convince those that use GPUs for other graphics, or compute, workloads to adopt Intel’s kit. When other graphics software and non gaming rendering workloads are done on these devices it’s still the AMD/Nvidia/Imagination Technologies(PowerVR) powered solutions the are the better all around solution for graphics and HSA style GPGPU compute.

AMD’s patent filings and Exascale grant funded R&D point to AMD including on the HBM memory stacks some FPGA resources, sandwiched between the HBM control/memory interface die on the bottom of the HBM stack and the memory dies stacked above. So not only is AMD going to have complete APU HPC/workstation/server SKUs with complex coherent interconnect fabrics on the interposer’s substrate, but there will be FPGA compute distributed among the HBM memory stacks right in the die stack to directly interface with the HBM dies.

This level on integrating of CPU/GPU and FPGA/other resources on AMD’s APUs is currently unique to AMD, especially the use of an interposer to host the CPU/GPU/FPGA and HBM memory on AMD’s new APUs for the server/workstation/HPC market. The ability of the silicon interposer to be etched with 10’s of thousands on individual traces that could never be done on a PCB type of process, will allow for much more in the way of very wide coherent interconnect fabrics to connect up the CPUs/GPUs/FPGAs to the HBM memory and each other, in ways that even the fastest PCI interconnects can not. The interposer technology is going to change the way future systems are made, and assembled together. Those large monolithic Dies with their thousands of functional blocks will now be able to be broken up into more manageable sizes and placed on an interposer and not lose the ability to be connected up/interfaced with the wider faster on die types of interconnect fabrics usually associated with the on die internally etched connection fabrics, thanks to the silicon interposer’s ability to support the same density of traces that any silicon substrate can naturally support.

You are not even considering the new technology that is being introduced before your knee-jerk dismissing of AMD’s efforts.

Well that uniqueness of AMD so far hasn’t paid off. Carrizo barely wins any designs on any ground, especially not in the compute and server market where AMD is completely out of the picture. No matter what scale we’re talking about. That market so far hasn’t bought into the APU hype. If it would they design makers wouldn’t buy expansive nVidia GPU kit instead. And I don’t think this is going to change in the near future.

Intel is not interested in GPU for compute as they have a different more flexible architecture for this called XeonPHI why would they want to have two competing designs? Makes no sense. Only AMD drives such a suicidal strategy.

Besides you’re wrong Skylake opened up the eDRAM for more general purpose so I wouldn’t be surprised to see that appearing it on some Xeon design.

You think HBM is unique it isn’t KNL features similar tech.

AMD has absolutely nothing and not even on the horizon that convinces me that they will get back in the game actually looking at the latest developments splitting of their GPU department into an independent unit paints a dark picture on future APU endeavors. Wouldn’t surprise me if they give up on that all together. As so far it i hasn’t gained them any traction in the marketplace.

No one goes IBM or AMD.

Let me describe.

IBM’s big mistake is to do something usual with an unusual big group. I even don’t understand why so many cos are in there hoping to make money through data center business, BUT I know open sourcing was a bad way for IBM and it doesn’t help it make $. In addition, trust me, IBM did another thing which does NOTHING meaningful or significant for IBM. IBM is going down on lithography which won’t necessarily help IBM, because certainly intel is ahead in all aspects of processors from IBM.

IBM is not so big to enterprise, it is Microsoft. Only Americans say IBM is an enterprise company, but it is smaller..

Thanks!

IBM did not open source the power8 ISA/IP IBM merely went with an ARM Holdings style licensing arrangement in its opening up of the Power/power8 ISA/IP. IBM makes its money mostly NOT through the hardware sales channel, but through its services channel so having the power8 IP/ISA out there Arm Holdings style will allow IBM to possibly offer its services to more than those that buy IBM’s branded Power based hardware.

IBM is out of the fabrication business and out of the billions of dollars of cost related to keeping a running fab going at as close to 100% capacity or lose billions on upkeep of the chip fab’s facilities. That chip fab economy of scale for the power8 tapeouts is now going to be spread across the third party foundry business and IBM will have access to the very same process via its GlobalFoundries contract for fab services. IBM will not incur the Fab plant maintenance/other costs while IBM will maintain control over all the IP involved in the Power8/Power ecosystem, and IBM will still retain its world class chip R&D facilities and its large patent portfolio of licensable and propitiatory IP.

That said, it will be more of IBM’s third party power8 licensees(Google, etc.) that will take some of Intel’s cake, along with those that will look at AMDs future HPC/Workstation APU’s on an interposer replete with their own closely integrated on the interposer GPU accelerators. It’s not merely some form of limited PCI type of communication with regards to AMD’s HPC/Workstation APU SKUs between the CPU and the GPU on these APUs, as they will come with much wider fully coherent fabrics etched out on the interposer’s silicon substrate! So expect that things will be accelerated on the GPU portion with very little latency relative to the current methods of interfacing the CPU with PCI card based GPU accelerators. AMD has done something on the interposer that will definitely attract attention to these APUs on an interposer for the HPC/Workstation market.

Simply spouting tautologies that Intel is the “Best” solution without backing things up with server benchmarks is not going to convince those in the HPC/workstation/server market of Intel’s “omnipotence”, those businesses employ engineers/actuaries and others that will take the time to do multiple costs/benefits analysis before decisions are made. Calling IBM out as not a business related concern, as well as only relating the Power8 market in terms of IBM’s usage in not going to convince others of Intel’s ability to deliver a competitive product. The Power8 market like the ARM based market is to be counted in the sum total of its market adoption across the many large and small market cap interests producing products for the Power market. Likewise The market caps of those companies in the ARM based ecosystem dwarfs even Intel’s so called billions put towards both R&D and otherwise. We are talking entire industries here that are more than any one companies’ market cap. ARM is huge, Power8 is more than one company now, things are going to be very difficult for Intel, and even Microsoft, going forward.

@ContraContraRevenue. Dreams are all but dreams, isn’t? The unfortunate truth is INTC R&D is equal to all those Open Power, ARM partnership, and whatever else the name of the organizations are. GOOG is not as dumb as you think. If INTC comes with competitive product. Open Power membership and all that comes with it will go down in the tube as quick as I flush my toilet. Also for the moment it appears INTC has abandoned the PC R&D and it is all streamed towards the Data Center products. INTC has long realized that consumer PC is no longer growing, now it is the concrete bunkers around the globe, and they are dominating that market as they do PC. God help all involved in that segment.

Since none of the IBM/ARM/NVDA etc are manufacturers they need to invest extraordinary amount of money just for the hope to brake INTC hegemony. Licensing will not work there my friend, now the moto as Jerry Sanders said is “Real man have fabs”.

You mean Apple, Samsung, Qualcomm, AMD, ARM Holdings and MANY others combined do not spend more on CPU/GPU R&D! You do know that the entire ARM based industry’s many companies dwarf Intel in market CAP, and R&D spending! Really Intel is not that big compared to Apple or Samsung, and throw in Qualcomm and Intel is pretty small in comparison, and that’s not even considering the OpenPower licensees or even IBM’s top of the world in New Patent filings for over 2 decades from R&D yearly investments. In what confined space/rarefied air environment were you brought up in, blurting out such nonsensical utterings supported by such completely unsubstantiated information backed only by maniacal claims and tautologies.

@GrayMatter. I will ask you only one question, because snipers kill with only 1 bullet. Do you understand the difference between Enterprise vs Consumer?